Mistral AI suddenly announces new large-scale language model '8x22B MOE', with a context length of 65k and a parameter size of up to 176 billion

Mistral AI, an AI startup founded by researchers from Google and Meta, has released an open source large-scale language model, ' 8x22B MOE '. Although details are unknown, it may have more than three times the number of parameters of the model '

magnet:?xt=urn:btih:9238b09245d0d8cd915be09927769d5f7584c1c9&dn=mixtral-8x22b&tr=udp%3A%2F% https://t.co/2UepcMGLGd %3A1337%2Fannounce&tr=http%3A%2F% https://t.co/OdtBUsbeV5 %3A1337%2Fannounce

— Mistral AI (@MistralAI) April 10, 2024

RELEASE 0535902c85ddbb04d4bebbf4371c6341 lol

— Mistral AI (@MistralAI) April 10, 2024

Breaking: New AI Model Alert

— Shubham Saboo (@Saboo_Shubham_) April 10, 2024

Mistral AI just dropped a brand new 8x22B MoE model on X.

65k context length, more details to follow soon. Stay tuned! https://t.co/PpHhwkC7nq pic.twitter.com/XsIYMtbyLy

Judging from the model name, the Mistral 8x22B MOE is thought to be a larger version of the open source model 'Mixtral 8x7B' released in 2023.

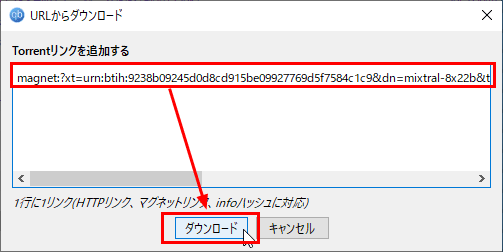

Mistral AI shared the 8x22B MOE using a Torrent magnet link. If you have a BitTorrent client, you can download the model data and more.

magnet:?xt=urn:btih:9238b09245d0d8cd915be09927769d5f7584c1c9&dn=mixtral-8x22b&tr=udp%3A%2F%http://2Fopen.demonii.com%3A1337%2Fannounce&tr=http%3A%2F%http://2Ftracker.opentrackr.org%3A1337%2Fannounce

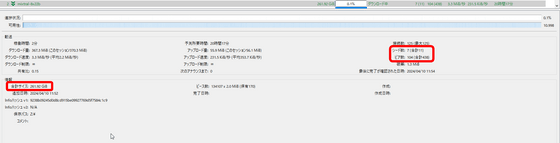

If you use ' qBittorrent ', click 'File' and then 'Add Torrent Link'.

Copy the magnet link shared by Mistral AI and click 'Download'.

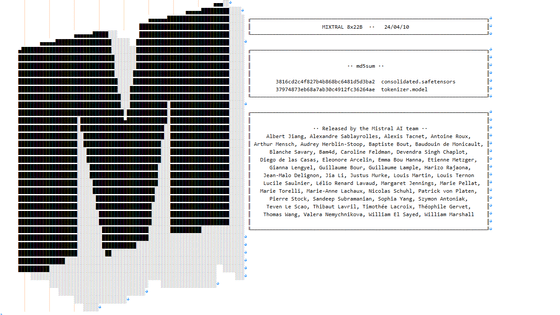

The file size is about 261GB. 11 people have already seeded it, and 438 people were downloading it at the time of writing.

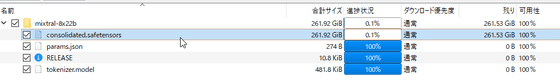

The contents of the file are as follows:

Details of the 8x22B MOE are unknown, but the total number of parameters may be up to 176 billion. The context length that can be handled is said to be 65k. Mistral AI models often appear in the form of 'leaks', but this time it was officially released by the official account.

Mistral AI's 2023 release, Mixtral 8x7B, had a total of 46.7 billion parameters and achieved benchmark scores equal to or higher than Llama 2 70B and GPT-3.5.

Introducing the free and commercially available large-scale language model 'Mixtral 8x7B', which can perform at the same level or higher than GPT-3.5 with low inference cost - GIGAZINE

Mistral AI is a startup founded in France that has released both open source models such as 'Mixtral 8x7B' and commercial models such as 'Mistral Small', 'Mistral Embed' and 'Mistral Large'. It has already raised more than 385 million euros (about 63 billion yen) from venture capital firms Andreessen Horowitz and Lightspeed Venture Partners, and its valuation has reached about 1.8 billion euros (about 297 billion yen). In February 2024, it was reported that it signed a multi-year partnership agreement with Microsoft and received an investment of 15 million euros (about 2.5 billion yen), but this matter was investigated by EU regulatory authorities concerned about AI monopoly.

In addition to releasing the aforementioned model, Mistral AI also offers an AI chat service called ' Le Chat .' Mistral AI's open source model can also be used with NVIDIA's free chatbot AI ' Chat With RTX .'

・Added on April 11, 2024 at 10:50

The 8x22B MOE has been released for use with Hugging Face. This means that you can now use the 8x22B MOE with applications such as LM Studio , which allows you to test the performance of large-scale language models even on a laptop.

·continuation

Introducing the free and commercially available open model 'Mixtral 8x22B', with high coding and mathematical abilities - GIGAZINE

Related Posts:

in Software, Posted by log1p_kr