The CEO of AI company Mistral confirms that the large-scale language model leaked on the Internet was made by the company

Hugging Face, which is developing a community related to machine learning and AI, suddenly released a mysterious large-scale language model (LLM) file. Based on the characteristics of the prompt, it was rumored that it was an LLM from the AI company

An over-enthusiastic employee of one of our early access customers leaked a quantised (and watermarked) version of an old model we trained and distributed quite openly.

— Arthur Mensch (@arthurmensch) January 31, 2024

To quickly start working with a few selected customers, we retrained this model from Llama 2 the minute we got…

Mistral CEO confirms 'leak' of new open source AI model nearing GPT-4 performance | VentureBeat

https://venturebeat.com/ai/mistral-ceo-confirms-leak-of-new-open-source-ai-model-nearing-gpt-4-performance/

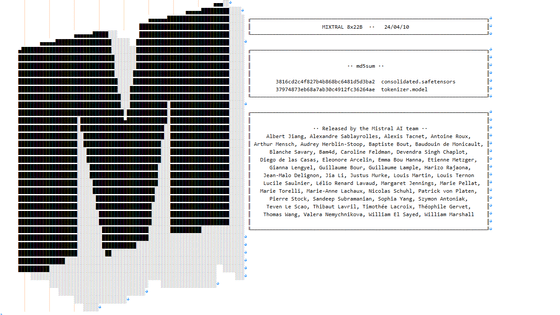

On January 28, 2024, a file named 'miqu-1-70-b' was uploaded to Hugging Face by a user named 'Miqu Dev.'

miqudev/miqu-1-70b · Hugging Face

The series of files appeared to belong to LLM, and when volunteers investigated, they found that the prompt characteristics were similar to 'Mixtral 8x7b' developed by Parisian AI venture Mistral. Mixtral 8x7b has been described as 'the highest performing open source LLM available as of January 2024.'

It has been pointed out that ``miqu-1-70b'' may exceed GPT-4, and machine learning researchers have suggested that it may be an abbreviation for `` Mi stral Qu antized'' (Mistral quantized version).

While the identity of 'miqu-1-70b' is attracting attention, Arthur Mensch of Mistral confirmed that the LLM in question belongs to Mistral.

According to Mensch, one of the enthusiastic employees at a company that Mistral allows early access to published a quantized version of the old model.

Mistral apparently retrained the model from Meta's LLM/Llama 2 and completed pre-training on the release date of Mistral 7B.

Since then, progress has been good, and Mr. Mensch commented, ``Please look forward to it.''

Related Posts:

in Software, Posted by logc_nt