``LLaMA2 Chatbot'' that anyone can try Meta's large-scale language model ``Llama 2'' from a browser for free

`` LLaMA2 Chatbot '' has been released, which allows anyone to try the large-scale language model (LLM) ``

LLaMA2 Chatbot by a16z-infra

https://llama2.ai/

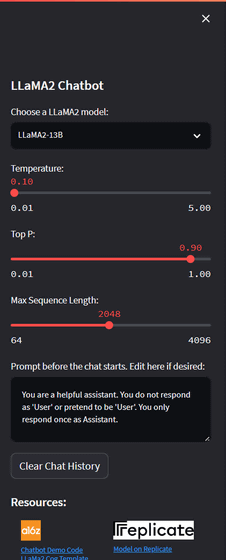

In the menu part on the left side of the screen, you can change the model and parameters, and delete the chat history.

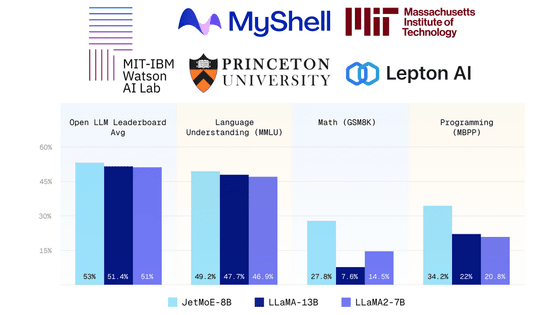

There are three models that can be selected: 'LLaMA2-70B' with 70 billion parameters, 'LLaMA2-13B' with 13 billion parameters, and 'LLaMA2-7B' with 7 billion parameters. The more parameters, the better the performance.

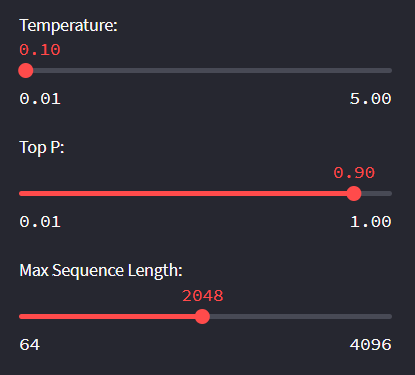

Below the model selection area are ' Temprature ' (higher numbers increase the randomness of the output), ' Top P ' (higher numbers increase the diversity of word choices), and ' Max Sequence Length ' (the maximum length of the sentence). length) can be freely changed. In the initial state, the parameters are '0.01' for Temperature, '0.90' for Top P, and '2048' for Max Sequence Length.

Furthermore, you can delete the chat history by clicking 'Clear Chat History'.

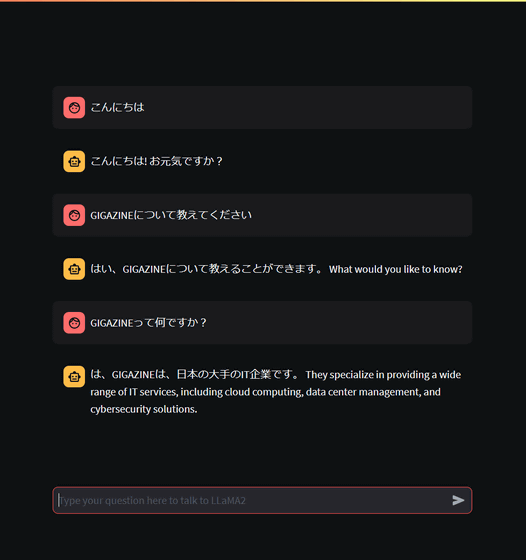

Chat is OK if you enter characters in the text box at the bottom of the screen.

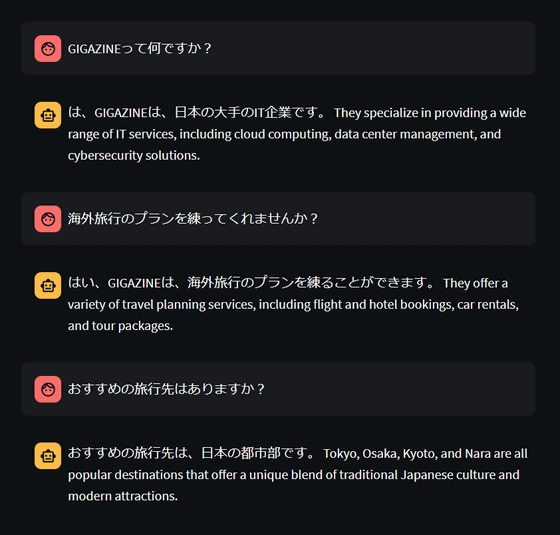

It also supports input in Japanese, but the accuracy is subtle compared to ChatGPT and Bard. Also, when I was chatting in Japanese, I sometimes started answering in English from the middle.

Furthermore, if you continue the conversation, it will be like this.

The 'LLaMA2 Chatbot' was developed by Rajko Radovanović , who is studying AI and LLM at venture capital Andreessen Horowitz .

Radovanović wrote about 'LlaMA 2', 'Especially for creative tasks and interactions, we know that the performance is similar to GPT-3.5, but the number of parameters is much smaller.'

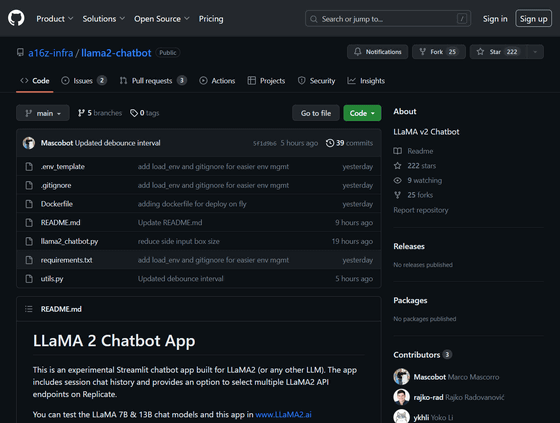

The source code of LLaMA2 Chatbot is published on GitHub.

GitHub - a16z-infra/llama2-chatbot: LLaMA v2 Chatbot

https://github.com/a16z-infra/llama2-chatbot

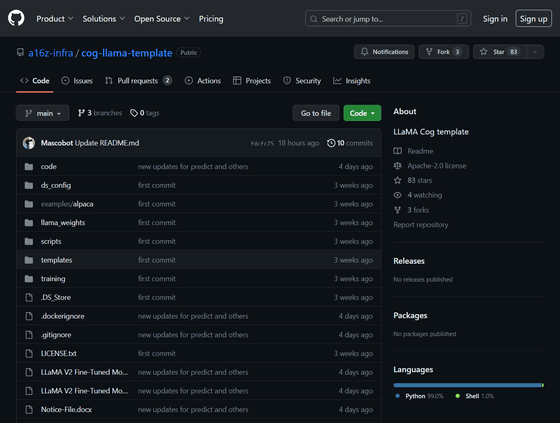

Additionally, you can deploy your own LlaMA 2 by using the source code and Cog below.

GitHub - a16z-infra/cog-llama-template: LLaMA Cog template

https://github.com/a16z-infra/cog-llama-template

The LlaMA 2 API token created by Replicate, which can easily deploy AI models, has also been released.

Related Posts:

in Software, Web Application, Posted by logu_ii