A large-scale language model 'JetMoE-8B' that exceeds Llama 2-7B despite having a learning cost of less than 1/10,000 of Llama 2-7B has appeared

A large-scale language model called ' JetMoE-8B ' has been released, which has performance that exceeds Meta's '

JetMoE

https://research.myshell.ai/jetmoe

GitHub - myshell-ai/JetMoE: Reaching LLaMA2 Performance with 0.1M Dollars

https://github.com/myshell-ai/JetMoE

jetmoe/jetmoe-8b · Hugging Face

https://huggingface.co/jetmoe/jetmoe-8b

JetMoE-8B, released by AI development company MyShell , has a significantly lower training cost than existing models, making it possible to fine-tune the model even with consumer-grade GPUs. In addition, it uses only public datasets for training, and the code is open source, so no proprietary resources are required.

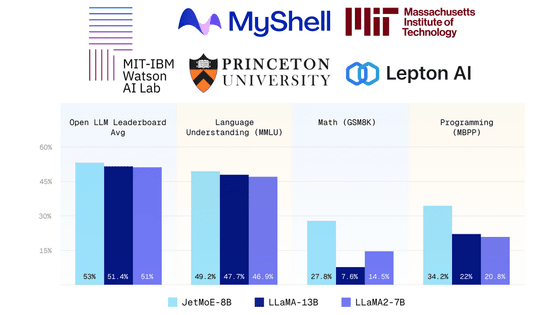

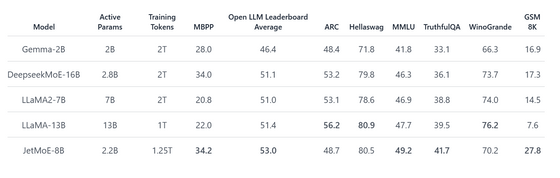

The figures comparing JetMoE-8B with Llama 2-7B, DeepseekMoE-16B , Gemma-2B, etc. are as follows. JetMoE-8B's active parameters during inference are 2.2B, which means that the computational cost is lower than Llama etc. In benchmarks using datasets such as MBPP and MMLU, JetMoE-8B outperformed Llama 2-7B and other models.

You can check out the capabilities of JetMoE-8B from the demo site 'Lepton AI,' which allows you to build large-scale language models.

JetMoE | Lepton AI Playground

First, you need to log in, so click 'Login to chat'.

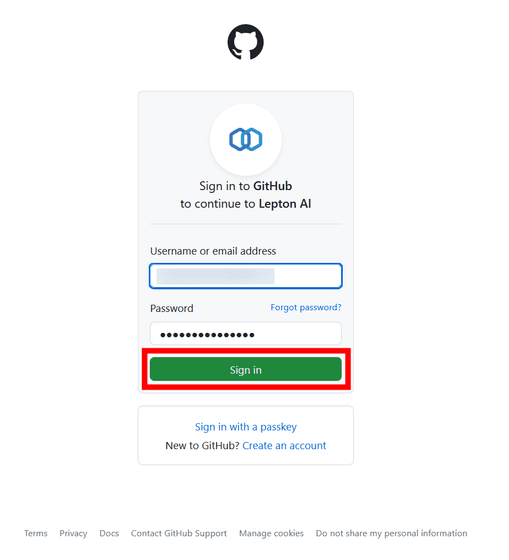

You can log in using your Google, GitHub, or LinkedIn account. This time, select 'GitHub.'

Click “Sign in”.

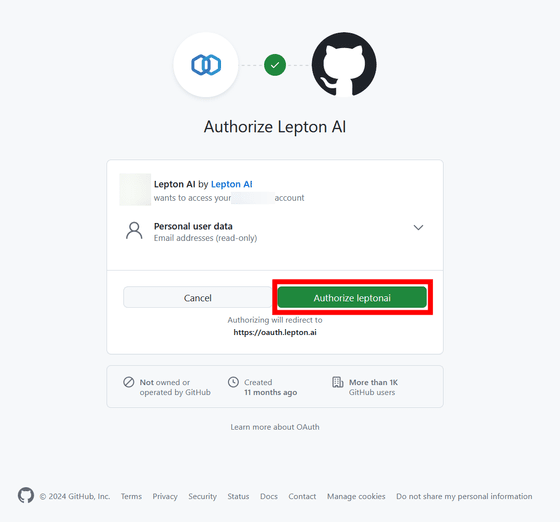

You will be asked to link your account with Lepton AI, so click 'Authorize leptonai'.

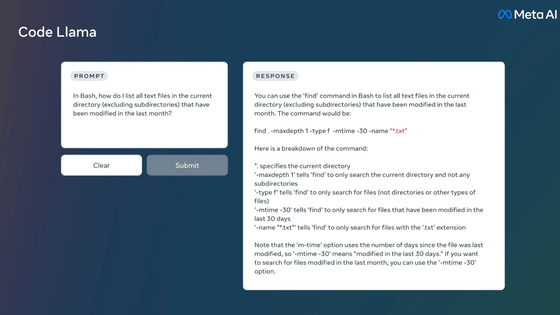

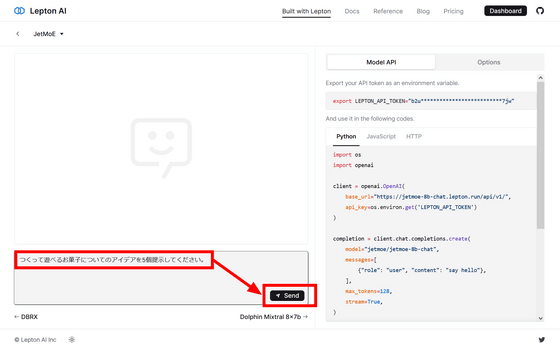

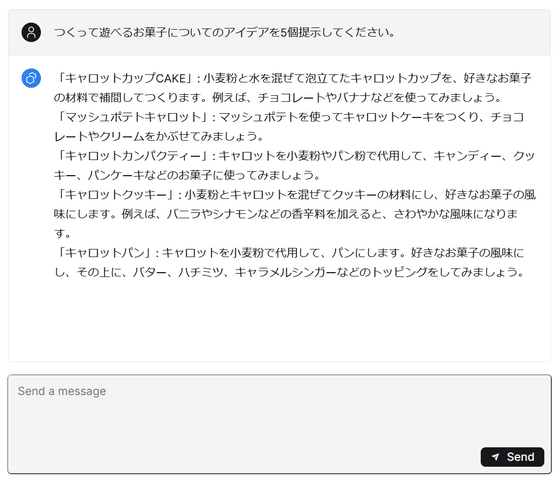

Now that it's ready to use, enter some text into the input box and click 'Send.'

Without any delay, an answer was generated.

Related Posts: