NVIDIA and Mistral AI Announce AI Model 'Mistral NeMo,' Adopting New Tokenizer 'Tekken' to Strengthen Multilingual Capabilities Including Japanese

AI development company Mistral AI has announced that it has collaborated with NVIDIA to develop the AI model ' Mistral NeMo '. Mistral NeMo has outperformed Gemma 2 9B and Llama 3 8B in various benchmarks, and is available on NVIDIA's AI platform, with the model data being made available as open source.

Mistral NeMo | Mistral AI | Frontier AI in your hands

Mistral AI and NVIDIA Unveil Mistral NeMo 12B, a Cutting-Edge Enterprise AI Model | NVIDIA Blog

https://blogs.nvidia.com/blog/mistral-nvidia-ai-model/?ncid=so-twit-243298&linkId=100000274370636

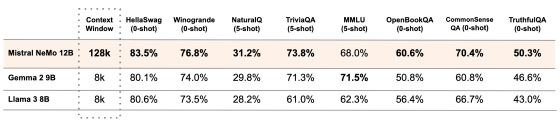

Mistral NeMo is a relatively small AI model with 12B parameters. Below is a table comparing the performance of Mistral NeMo, Gemma 2 9B, and Llama 3 8B. Mistral NeMo's context window is 128,000, which allows it to handle larger prompts than the other two models. In addition, it scores higher than Gemma 2 9B and Llama 3 8B in most benchmarks. In addition, Mistral NeMo is designed with quantization in mind, and it is said that it can perform inference processing with minimal performance degradation even in FP8.

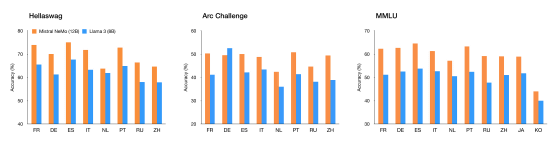

Mistral NeMo is developed as a multilingual model, and performs particularly well in Japanese, English, Chinese, Korean, Arabic, Italian, Spanish, German, Hindi, French, and Portuguese. In benchmarks that measure multilingual performance, it scores higher than Llama 3 8B in most tests.

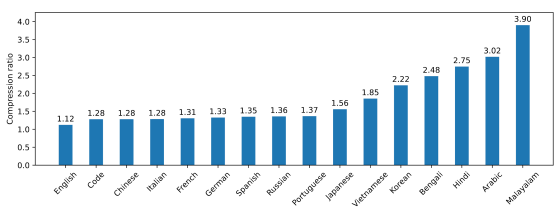

Mistral NeMo also uses a newly developed tokenizer called 'Tekken' to improve tokenization efficiency. In Japanese, it is possible to tokenize 1.56 times more efficiently than with conventional tokenizers, while in Arabic it is 3.02 times more efficient, and in Malaysian it is 3.90 times more efficient.

Mistral NeMo performs its learning process on NVIDIA's AI platform

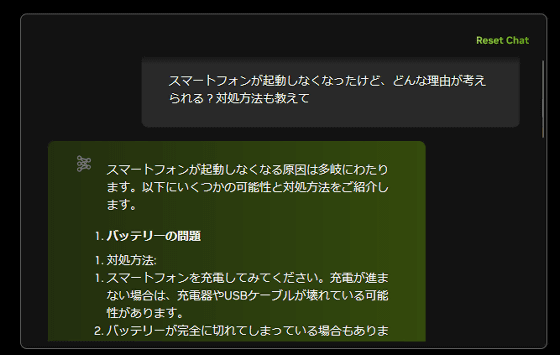

Mistral NeMo has already been packaged as a service that can be used with NVIDIA NIM . When I actually tried using 'istral-nemo-12b-instruct' on NVIDIA NIM, it returned highly accurate answers in Japanese.

In addition, Mistral NeMo is released on Hugging Face as a base model 'Mistral-Nemo-Base-2407' and a fine-tuned version 'Mistral-Nemo-Instruct-2407'. The license is Apache License 2.0 , and it can also be used for commercial applications.

mistralai/Mistral-Nemo-Base-2407 · Hugging Face

https://huggingface.co/mistralai/Mistral-Nemo-Base-2407

mistralai/Mistral-Nemo-Instruct-2407 · Hugging Face

https://huggingface.co/mistralai/Mistral-Nemo-Instruct-2407

Related Posts: