Introducing the free and commercially available open model 'Mixtral 8x22B', with high coding and mathematical skills

Mistral AI, an AI startup founded by researchers from Google and Meta, has released an open source large-scale language model called Mixtral 8x22B . The model is available under the Apache 2.0 open source license and is also available for commercial use.

Cheaper, Better, Faster, Stronger | Mistral AI | Frontier AI in your hands

The Mixtral 8x22B model was announced and distributed on the official X account on April 10, but detailed information was unknown. This time, an official release about the Mixtral 8x22B model has been announced.

Mistral AI suddenly announces new large-scale language model '8x22B MOE', with a context length of 65k and a parameter size of up to 176 billion - GIGAZINE

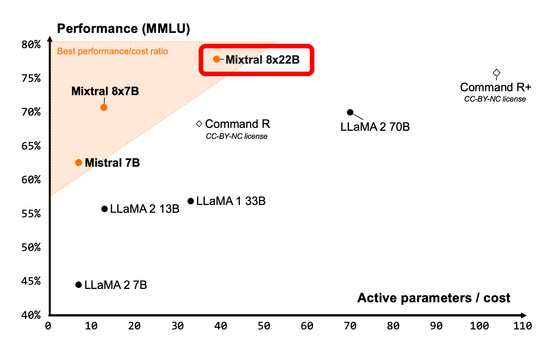

Mixtral 8x22B is a sparse mixture of experts model (SMoE) that is much more cost-effective relative to its parameter size by using only 39 billion (39B) out of 141 billion (141B) parameters per inference.

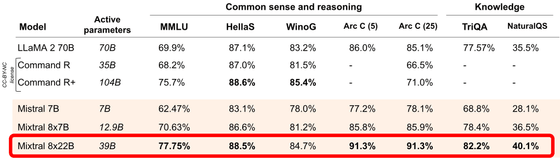

The cost performance comparison with major open models whose weights are published is shown in the figure below. We can see that the newly released Mixtral 8x22B maintains high performance while keeping the number of active parameters, which is a cost, low.

The benchmark comparison with major open models is shown in the figure below. Most indicators show that it outperforms previous models.

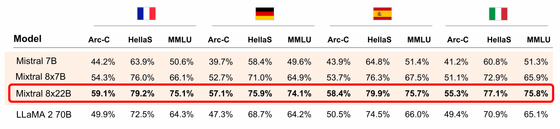

In addition to English, Mixtral 8x22B also supports French, Italian, German, and Spanish. It is said that the performance exceeds that of previous models released by Mistral AI and LLaMA 2 70B in languages other than English.

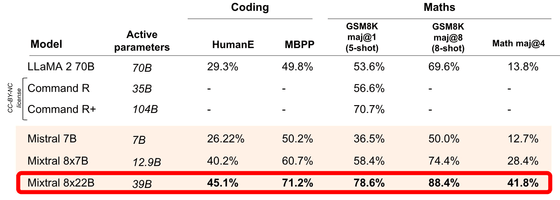

They also have strong math and coding skills.

Mixtral 8x22B can call native functions and has a context window of 64,000 (64K) tokens. The model is released under the open source Apache 2.0 license, so it can be used commercially for free.

Related Posts: