Mistral releases its first multimodal AI model, Pixtral 12B, available via GitHub, Hugging Face, API service platform Le Chat, and Le Platforme

French AI startup Mistral has announced its first multimodal model , the Pixtral 12B , which can process not only text but also images.

mistral-community/pixtral-12b-240910 · Hugging Face

Pixtral 12B is a 12 billion parameter model that can process text and images simultaneously, enabling tasks such as image description, object identification, and answering image-related queries.

It is released under the Apache 2.0 license, meaning that anyone can get it for free and use and modify it without restrictions.

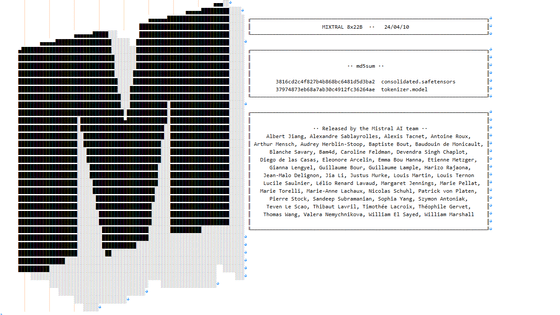

The model can be downloaded using the torrent magnet link shared by Mistral.

magnet:?xt=urn:btih:7278e625de2b1da598b23954c13933047126238a&dn=pixtral-12b-240910&tr=udp%3A%2F% https://t.co/OdtBUsbMKD %3A1337%2Fannounce&tr=udp%3A%2F% //t.co/2UepcMHjvL %3A1337%2Fannounce&tr=http%3A%2F% https://t.co/NsTRgy7h8S %3A80%2Fannounce

— Mistral AI (@MistralAI) September 11, 2024

This is the same technique used by Mistral when they released the 8x22B MOE.

Mistral AI suddenly announces new large-scale language model '8x22B MOE', with a context length of 65k and a parameter size of up to 176 billion - GIGAZINE

In addition, GitHub and the Hugging Face page are also available. There is no demo version available to test the functionality on the web.

According to Sophia Yang, Mistral's head of developer relations, the Pixtral 12B will soon be available for testing on Mistral's chatbot and API delivery platforms, Le Chat and Le Plateforme.

You can download the model via the torrent link. It'll be available on le Chat and la Plateforme soon.

— Sophia Yang, Ph.D. (@sophiamyang) September 11, 2024

It is unclear what image data Mistral used to develop the Pixtral 12B. Image data used by AI models for training may contain copyrighted material, which has often been problematic in the past.

Related Posts:

in Software, Posted by log1p_kr