Microsoft releases cost-effective, small language model 'Phi-3', open model available for commercial use

Microsoft has released the 'Phi-3' family as a language model that delivers great performance on a small scale. The smallest model in the family, Phi-3-mini, is an open model and can be used for commercial purposes free of charge.

Introducing Phi-3: Redefining what's possible with SLMs | Microsoft Azure Blog

Tiny but mighty: The Phi-3 small language models with big potential - Source

https://news.microsoft.com/source/features/ai/the-phi-3-small-language-models-with-big-potential/

[2404.14219] Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

https://arxiv.org/abs/2404.14219

The article below is from when the previous model, the Phi-2, was released in December 2023. At the time of the Phi-2, it was said to be able to perform at least as well as models up to 25 times its size.

Microsoft releases small language model 'Phi-2', which performs as well as models up to 25 times its size despite being small

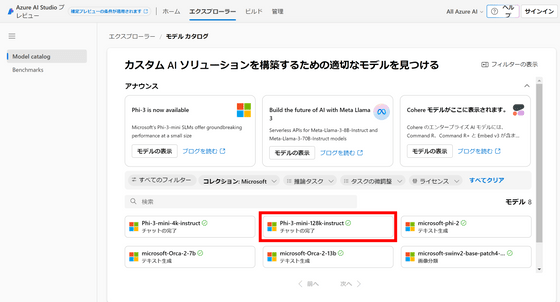

At the same time as the announcement of the 'Phi-3' model family, the smallest model in the Phi-3 family, the 'Phi-3-mini' with 3.8 billion (3.8B) parameters, was released and is now available in the Azure AI model catalog. The other models in the Phi-3 family are the 'Phi-3-small' with 7 billion (7B) parameters and the 'Phi-3-medium' with 14 billion (14B) parameters, and these two models are expected to be available in the Azure AI model catalog in the near future.

Larger language models with more parameters tend to be better at complex tasks such as advanced inference, data analysis, and context understanding. However, the more parameters a model has, the more computing power it requires for both training and inference.

Smaller models like the Phi-3 require less computing power, which keeps costs down, makes fine-tuning easier to customize, and allows for faster response times and runs locally on the device. The key is to maintain performance while reducing the number of parameters.

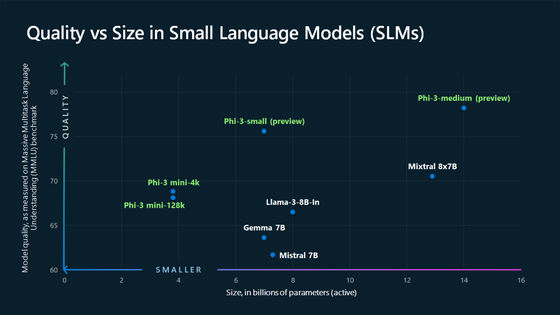

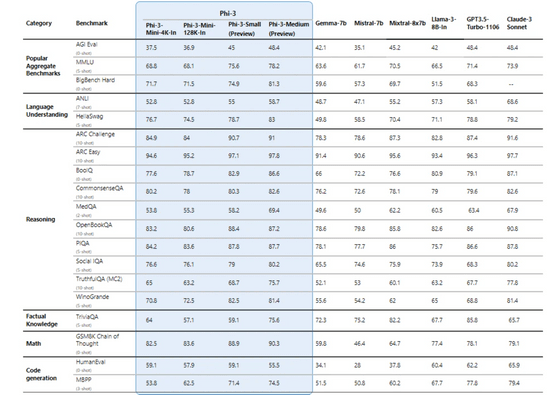

The comparison with open models such as Mixtral 8x7B , Llama 3 8B , Gemma 7B , and Mistral 7B is shown in the figure below. The vertical axis represents performance and the horizontal axis represents size, and the upper left indicates that the models are superior in terms of their small size and high performance. It seems that the Phi-3 family of models has succeeded in pushing the 'quality vs. cost trade-off curve' to a new position.

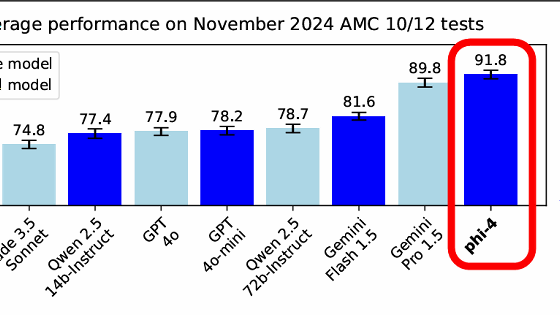

The specific benchmark results for each model are shown in the figure below. It can be said that all of the Phi-3 models are capable of beating models of a larger size, but the small model size reduces the capacity to memorize facts, and the results are not very good in benchmarks that measure factual knowledge such as TriviaQA.

Released at the same time as the announcement, Phi-3-mini is available under the MIT license and is available in

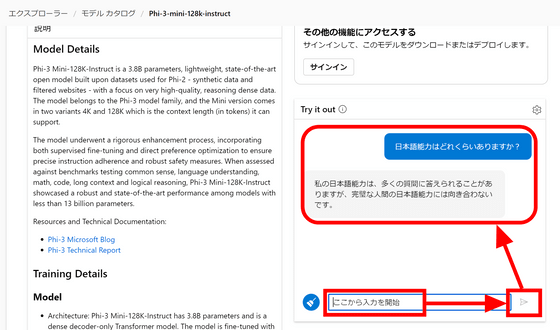

You can actually see how the Phi-3-mini works in the Azure AI model catalog . When you access the site, the AI model is displayed at the bottom, so click 'Phi-3-mini-128k-instruct'.

If you enter a prompt in the chat box at the bottom right and click the paper airplane icon, Phi-3-mini will respond. When I asked, 'How good is your Japanese ability?', it replied in Japanese, albeit in a slightly unnatural way.

Related Posts: