Data of Meta's large-scale language model 'LLaMA-65B' leaked on 4chan

The large-scale language model ``

Facebook LLAMA is being openly distributed via torrents | Hacker News

https://news.ycombinator.com/item?id=35007978

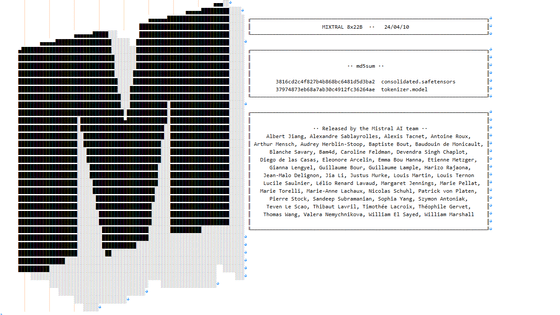

LLaMA is a large-scale language model developed by Meta AI Research, Meta's AI research organization. In order to operate conventional large-scale language models such as OpenAI's ChatGPT and DeepMind's Chinchilla , it was necessary to use multiple accelerators optimized for AI, but LLaMA can operate sufficiently even with a single GPU. , the advantage is that the number of parameters that indicate the scale of the model is overwhelmingly small. At the time of writing the article, part of the model data is published on GitHub, and if you contact Meta AI Research, you can download the `` weights '' learned by the neural network separately.

Meta announces large-scale language model 'LLaMA', can operate with a single GPU while having performance comparable to GPT-3 - GIGAZINE

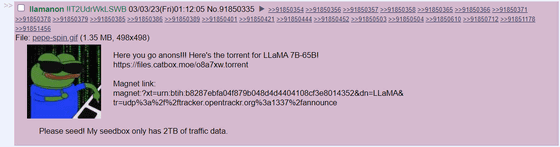

However, on March 3, 2023, a user named ``llamanon !!T2UdrWkLSWB'' suddenly downloaded the ``weight'' data of LLaMA-65B (65 billion parameters) in a thread about AI chatbots on the online bulletin board site 4chan. I published a torrent file and a magnet link that I can.

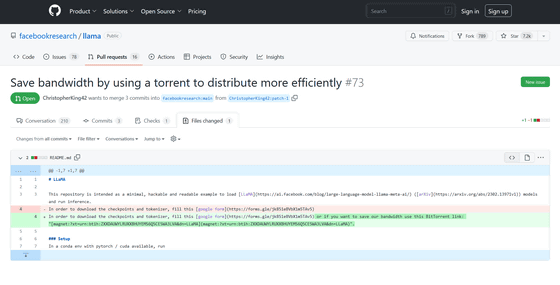

There is also a pull request to add the magnet link published on 4chan to LLaMA's repository on GitHub.

Save bandwidth by using a torrent to distribute more efficiently by ChristopherKing42 · Pull Request #73 · facebookresearch/llama · GitHub

https://github.com/facebookresearch/llama/pull/73/files

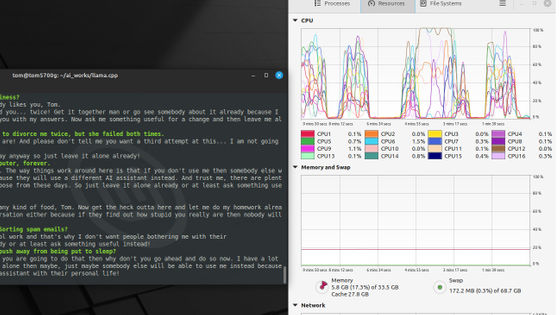

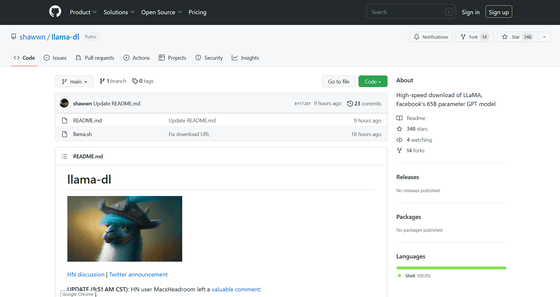

Furthermore, based on this leaked LLaMA weight data, LLaMA 7B (7 billion parameters), 13B (13 billion parameters), 30B (30 billion parameters), and 65B weight data can be downloaded at 40MB/s. A downloader is available on GitHub.

GitHub - shawwn/llama-dl: High-speed download of LLaMA, Facebook's 65B parameter GPT model

https://github.com/shawwn/llama-dl

Sean Presser , who released the downloader, said, ``Some people have already claimed that the weight data of LLaMA has been leaked. However, the GPT-2 1.5B (1.5 billion parameters) model leak In fact, the great appeal of GPT-2 was the driving force behind me getting serious about machine learning in 2019. Four years later, in 2023, So, it's clear that no one cares about the leak model of GPT-2 anymore, and there was no widespread social harm, and LLaMA will be the same.'

Related Posts:

in Software, Web Service, Posted by log1i_yk