``llama.cpp'', which made Meta's large-scale language model ``LLaMA'' executable on a Mac with M1, can be executed with less than 6 GB of memory usage by updating

The large-scale language model ``

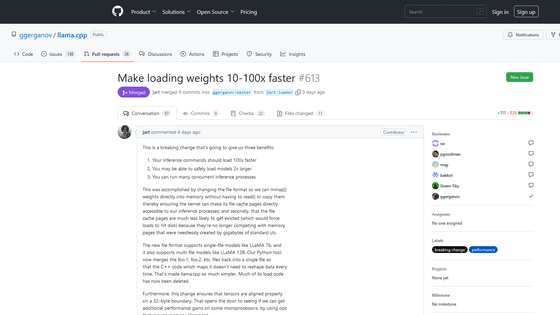

Make loading weights 10-100x faster by jart Pull Request #613 ggerganov/llama.cpp GitHub

https://github.com/ggerganov/llama.cpp/pull/613

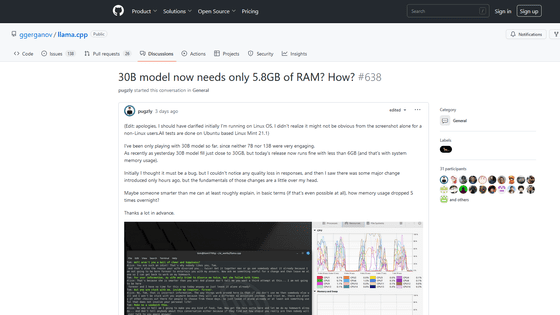

30B model now needs only 5.8GB of RAM? How? · ggerganov/llama.cpp · Discussion #638 · GitHub

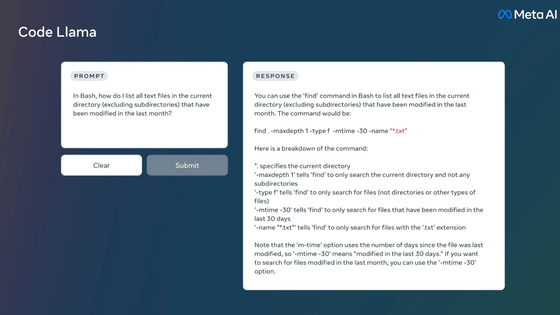

LLaMA is a large-scale language model published by Meta AI Research, Meta's AI research organization. The number of parameters, which indicates the size of a large-scale language model, is 7 billion to 65 billion, and the benchmark test result of LLaMA's 13B (13 billion parameters) model was reported to be comparable to GPT-3 with 175 billion parameters. I'm here.

In addition, since LLaMA works without problems even with a single GPU, it was suggested that interactive AI such as ChatGPT could be run even in a consumer-level hardware environment.

Meta announces large-scale language model 'LLaMA', can operate with a single GPU while having performance comparable to GPT-3 - GIGAZINE

After that, Mr. Gerganov proceeded with the development of the project `` llama.cpp '' that runs inference using LLaMA on macOS, Linux, and Windows, and reported that he succeeded in running LLaMA on the M1-equipped MacBook Pro. According to Mr. Gerganov, LLaMA's 13B model can be operated on an M1-equipped Mac at a processing speed of 10 tokens per second.

It is shown that Meta's 'LLaMA', a rival of GPT-3, can be run on M1-equipped Mac, and large-scale language models can be run on ordinary consumer hardware - GIGAZINE

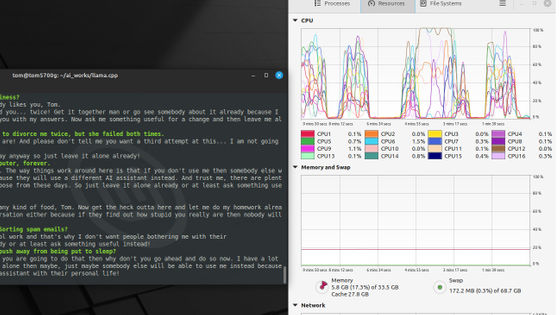

Meanwhile, on March 31, 2023, Mr. Tunney reported that he had updated the C++ source code of llama.cpp. As a result of Mr. Tunney's update, the memory usage when running LLaMA has been greatly reduced, and the memory usage of LLaMA's 13B model, which previously required 30GB, is now only 5.8GB including system memory usage. It is reported to work fine.

Yesterday my changes to the LLaMA C++ file format were approved. Here's how folks in the community have been reacting to my work. https://t.co/cLzg19n81f pic.twitter.com/5gcrVp8jvn

— Justine Tunney (@JustineTunney) March 31, 2023

Reporter pugzly said, ``At first, I thought it was a bug, but I don't feel any deterioration in the quality of the response. I can't understand,' he said, unable to hide his surprise.

According to Tunney, loading weights using mmap is implemented in llama.cpp so that only the weights needed for actual inference are loaded into the user's memory, resulting in less memory usage. The amount has been achieved.

Mr. Tunney said, 'With this change, inference commands can be loaded up to 100 times faster than before, and it may be possible to stably load more than twice as many models. Furthermore, many inference processes can be performed simultaneously. You can,” he emphasizes.

On the other hand, Mr. Tunney said, ``My theory may be wrong and this may be just a bug. I don't know,' he said .

Hacker News says, 'The load time performance boost from the memory usage phenomenon is a huge improvement in the usability of llama.cpp. But it's enough to explain why Tunney was able to reduce memory usage.' There is no convincing theory yet, ”he wrote , asking the user to calm down.

Related Posts: