AI search engine startup Phind announces flagship model 'Phind-405B'

Phind, an AI search engine that claims to

Introducing Phind-405B and faster, high quality AI answers for everyone

https://www.phind.com/blog/introducing-phind-405b-and-better-faster-searches

Introducing Phind-405B, our new flagship model!

— Phind (@phindsearch) September 5, 2024

Phind-405B scores 92% on HumanEval, matching Claude 3.5 Sonnet. We're particularly happy with its performance on real-world tasks, particularly when it comes to designing and implementing web apps.

Our focus on technical topics…

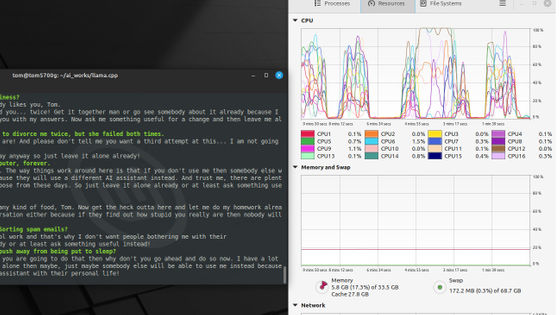

According to Phind, the Phind-405B is based on Meta's Llama 3.1 405B , with 128K tokens and a 32k context window.

It scored a respectable 92% on HumanEval, an evaluation index used to measure programming ability, which is comparable to Anthropic's Claude 3.5 Sonnet.

According to Phind, the Phind-405B offers outstanding performance, especially when designing and implementing web applications.

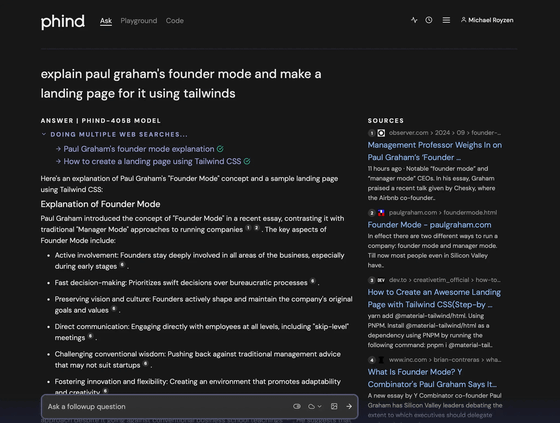

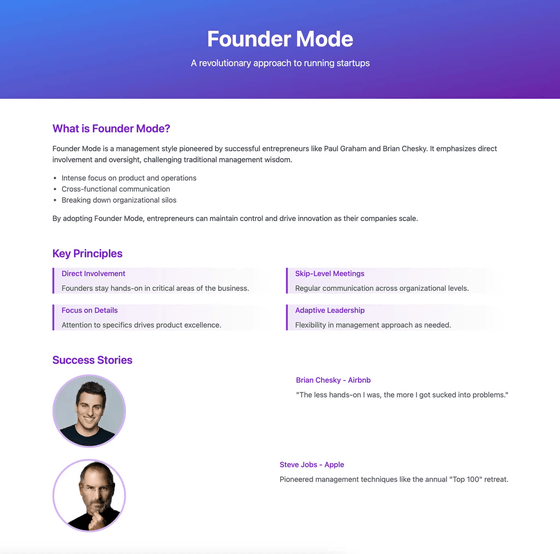

Phind gives an example of a task they performed when they asked programmer Paul Graham to create a landing page for his essay 'Founder Mode.' Phind-405B collected information from various sites.

We then created a landing page that summarized the information.

Phind-405B used DeepSpeed and MS-AMP libraries to perform FP8 mixed precision training using 256 H100 GPUs . According to Phind, FP8 mixed precision training is less degraded than conventional BF16 precision training and reduces memory usage by 40%.

Phind also announced an update to the model used in Phind Instant to improve its AI search engine. AI-based searches take longer to display results than traditional search engines such as Google, but the company has developed a new Meta Llama 3.1 8B-based model trained on the same dataset as Phind-405B, which can generate up to 350 tokens per second, improving the user experience.

Related Posts:

in Note, Posted by logc_nt