Succeeded in running chat AI model 'LLaMA' comparable to GPT-3 with smartphones such as iPhone and Pixel

Meta AI Research , Meta's AI research organization, announced on February 24, 2023 that the large-scale language model ' LLaMA (Large Language Model Meta AI) ' can operate on a single GPU due to its small number of parameters. I'm here. Engineer anishmaxxing has reportedly succeeded in operating such LLaMA on smartphones such as the iPhone and Pixel.

Twitter

https://twitter.com/thiteanish

LLaMA, published by Meta AI Research, is trained on publicly available datasets such as Wikipedia, Common Crawl , and C4 . On the other hand, language models such as ' GPT-3 ' provided by OpenAI were trained using partially private datasets. As such, LLaMA is open source compatible and reproducible.

Furthermore, GPT-3 has 175 billion parameters, while LLaMA has 7 to 65 billion parameters, which is quite small. However, benchmarks such as BoolQ and PIQA show that LLaMA outperforms GPT-3 in some themes.

The number of parameters is the amount of variables for the machine learning model to make predictions and classifications based on data, and is an index that affects the performance of the machine learning model. The larger the number of parameters, the more stable the processing of complex tasks, but at the same time the higher the required computer performance. On the other hand, although LLaMA has a small number of parameters, it can perform task processing equivalent to a large-scale language model such as GPT-3, so it is possible to operate in a consumer-level hardware environment.

Meta announces large-scale language model 'LLaMA', can operate with a single GPU while having performance comparable to GPT-3 - GIGAZINE

Also, due to the small number of parameters of 7 billion to 65 billion, LLaMA has been reported to work on Macs with Apple silicon such as M1.

It is shown that Meta's 'LLaMA', a rival of GPT-3, can be run on M1-equipped Mac, and large-scale language models can be run on ordinary consumer hardware - GIGAZINE

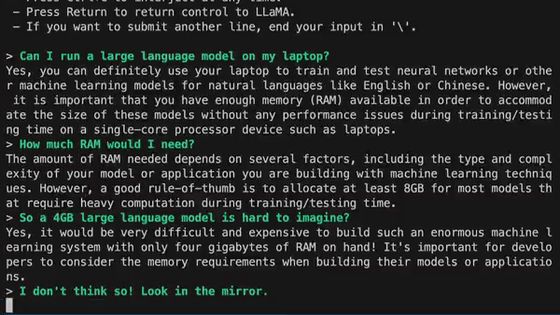

Engineer anishmaxxing reported on March 14, 2023 that he succeeded in running it on iOS using the web application framework Next.js.

????New Update!????

—anishmaxxing (@thiteanish) March 14, 2023

I found 5 ways to not get LLaMA working on iOS

This NextJS app was a by-product from one of those 5 ways: pic.twitter.com/HyqSLiBgbg

In addition, Mr. anishmaxxing has succeeded in running LLaMA on Pixel 6 . ``I expected LLaMA to work on smartphones,'' said anishmaxxing.

@ggerganov 's LLaMA works on a Pixel 6!

— anishmaxxing (@thiteanish) March 13, 2023

LLaMAs been waiting for this, and so have I pic.twitter.com/JjEhdzJ2B9

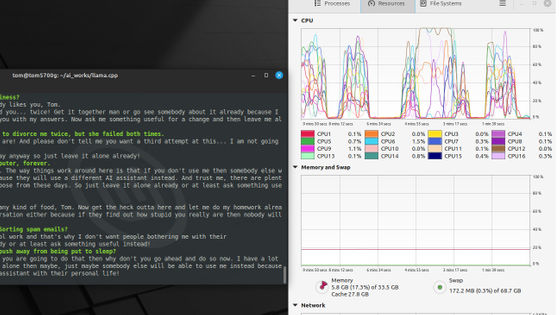

On the other hand, anishmaxxing reports about LLaMA that worked on Pixel 6, ``The LLaMA that worked this time is not optimized for Pixel.'' ``I quantized LLaMA on my Mac and transferred the weights optimized for the capacity of the smartphone to the Pixel,'' he said.

No, it's not even optimized for the pixels.

— anishmaxxing (@thiteanish) March 13, 2023

I quantized it on my mac and transferred the weights over due to space issues on my phone

In addition, anishmaxxing touched on the slow generation time of LLaMA that ran on Pixel, ``The cause of the very slow generation seems to be the problem of load time.It is also running on Termux , an emulator that can run the Linux environment. I'm assuming that might be the reason.'

Generations are very slow, seems it's due to load time.

— anishmaxxing (@thiteanish) March 13, 2023

Might be cause I'm running it on Termux

Related Posts: