The latest version of the sentence generation language model 'GPT-3', which was regarded as 'too dangerous' because it produces too high-precision text, was released.

' GPT-3 ', the successor to the language model 'GPT-2' that can generate sentences with high accuracy that is indistinguishable from those written by humans, has been released by OpenAI, an organization that studies artificial intelligence. ..

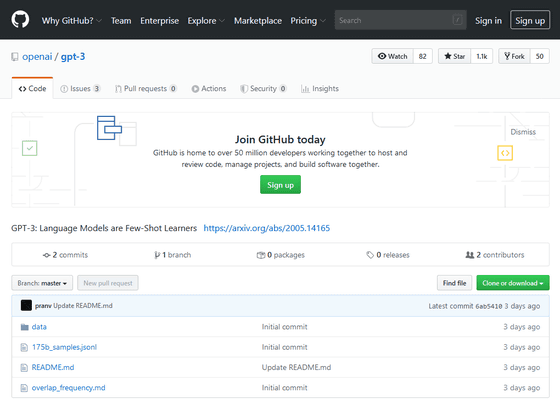

GitHub --openai / gpt-3: GPT-3: Language Models are Few-Shot Learners

[2005.14165] Language Models are Few-Shot Learners

OpenAI debuts gigantic GPT-3 language model with 175 billion parameters

https://venturebeat.com/2020/05/29/openai-debuts-gigantic-gpt-3-language-model-with-175-billion-parameters/

The 'GPT-2' released in 2019 was regarded as 'too dangerous' even by the development team due to its excellence, and the publication of the paper with technical details was postponed.

The automatic writing tool by AI easily creates text with too high precision, so the development team considers it 'too dangerous' --GIGAZINE

This 'GPT-2' also had 1.5 billion parameters, but the new version 'GPT-3' has 175 billion parameters.

However, it is not the best language model for every situation, and although the most advanced results can be obtained in natural language processing such as language translation, news article generation, and answers to university entrance exams, in the part of common sense reasoning. It may be unsatisfactory, and it seems that there will be some omissions in word context analysis and answering exam questions in junior high and high school.

In the treatise, it is reported that 'GPT-3' was used to generate news articles that were indistinguishable from those written by humans.

Related Posts:

in Note, Posted by logc_nt