Results of AI benchmark test 'MLPerf Inference v4.0' with newly added 'Llama 2 70B' and 'Stable Diffusion XL' announced

MLCommons, an industry consortium that conducts performance evaluations of neural networks, has designed a benchmark test ``MLPerf Inference'' that can measure the AI processing performance of hardware in various scenarios. The latest 'MLPerf Inference v4.0' newly added Meta's large-scale language model '

New MLPerf Inference Benchmark Results Highlight The Rapid Growth of Generative AI Models - MLCommons

https://mlcommons.org/2024/03/mlperf-inference-v4/

Nvidia Tops Llama 2, Stable Diffusion Speed Trials - IEEE Spectrum

https://spectrum.ieee.org/ai-benchmark-mlperf-llama-stablediffusion

NVIDIA Hopper Leaps Ahead in Generative AI at MLPerf | NVIDIA Blog

https://blogs.nvidia.com/blog/tensorrt-llm-inference-mlperf/

MLPerf Inference v4.0: NVIDIA Reigns Supreme, Intel Shows Impressive Performance Gains

https://www.maginative.com/article/mlperf-inference-v4-0-nvidia-reigns-supreme-intel-shows-impressive-generative-ai-performance-gains/

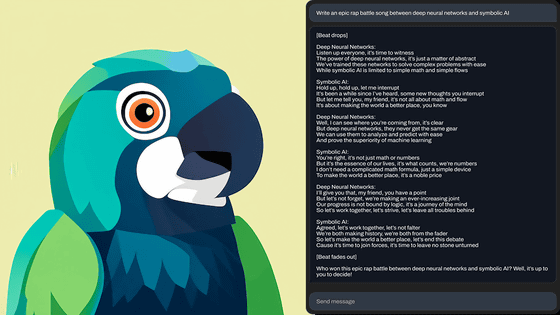

In order to respond to the ever-changing environment of generated AI, MLCommons has set up a task force to consider whether to add new models to the benchmark test ``MLPerf Inference v4.0''. After careful consideration of factors such as model licensing and ease of deployment, we decided to include the new Llama 2 70B and Stable Diffusion XL in the benchmark.

Llama 2 70B is Meta's large-scale language model and was chosen as the indicator for a 'bigger' large-scale language model with 70 billion parameters. Llama 2 70B has orders of magnitude more parameters than the previous GPT-J introduced in MLPerf Inference v3.1, and correspondingly improved accuracy. Due to the large model size of Llama 2 70B, it requires a different class of hardware than smaller large language models. For this reason, MLCommons explains that including the Llama 2 70B in the performance indicators will make the benchmark suitable for high-end systems.

Stable Diffusion XL, on the other hand, is an image generation AI with 2.6 billion parameters that is used to generate highly accurate images via text-based prompts. By generating many images, MLPerf Inference v4.0 can calculate metrics such as latency and throughput to more accurately understand overall performance.

'The release of MLPerf Inference v4.0 marks the full adoption of generative AI in our benchmark suite, with one-third of our benchmarks featuring generative AI workloads,' said Miro Hodak, co-chair of MLPerf Inference. 'We ensure that the MLPerf Inference benchmark suite covers cutting-edge areas, including large-scale language models, large and small, and text-to-image generation.'

More than 8,500 performance results were submitted to MLPerf Inference v4.0 from 23 organizations including ASUS, Dell, Fujitsu, Google, Intel, Lenovo, NVIDIA, Oracle, Qualcomm, etc. Additionally, four companies, Dell, Fujitsu, NVIDIA, and Qualcomm, are reported to have submitted power figures focused on data centers for MLPerf Inference v4.0.

The MLPerf Inference v4.0 benchmark is divided into the 'Edge' system, which is intended for use on devices around the world, and the 'Datacenter' system, which is intended for use in data centers. You can check the results for 'Edge' and 'Datacenter' on the following pages.

Benchmark MLPerf Inference: Edge | MLCommons V3.1 Results

Benchmark MLPerf Inference: Datacenter | MLCommons V3.1

https://mlcommons.org/benchmarks/inference-datacenter/

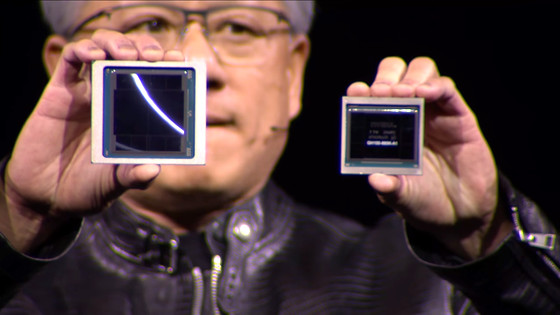

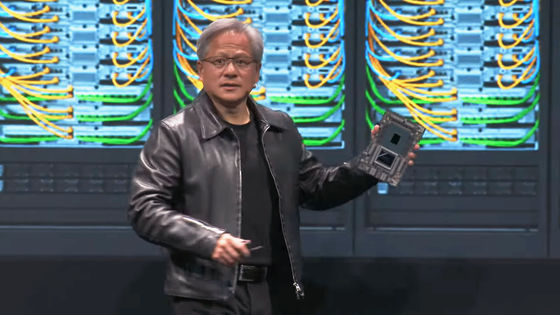

The top performer in the new generative AI category was an NVIDIA system combining eight NVIDIA H200s . The H200 system achieved up to 31,000 tokens/second with Llama 2 70B and 13.8 queries/second with Stable Diffusion XL.

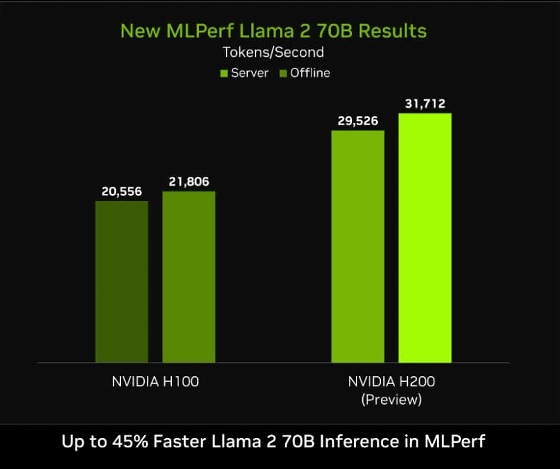

Below is a graph comparing the Llama 2 70B benchmark results of MLPerf Inference v4.0 between H100 and H200. We see that H200 performs up to 45% better. By using MLPerf Inference, you can easily compare the AI processing performance of different hardware like this.

Related Posts:

in Software, Web Service, Posted by log1h_ik