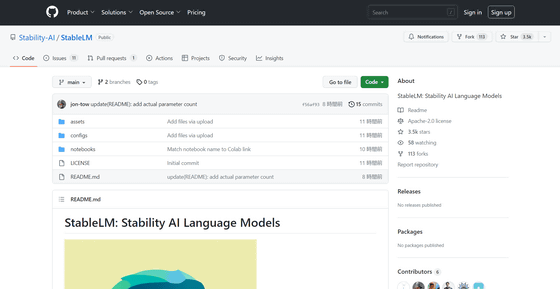

Stability AI releases large-scale language model 'StableLM' that is open source and available for commercial use

Stability AI, the developer of the image generation AI `` Stable Diffusion '', released the open source large-scale language model `` StableLM '' on April 19, 2023. The α version has models with 3 billion and 7 billion parameters, and plans to prepare models with 15 billion to 65 billion in the future. StableLM is also available for commercial use under

First Release of Stability AI Language Model 'StableLM Suite' - (English Stability AI

https://ja.stability.ai/blog/stability-ai-launches-the-first-of-its-stablelm-suite-of-language-models

GitHub - Stability-AI/StableLM: StableLM: Stability AI Language Models

https://github.com/stability-AI/stableLM/

StableLM is said to be learning with a new dataset containing 1.5 trillion tokens, which is about three times the language model dataset The Pile . The number of parameters of StableLM for which the model was released is 3 billion and 7 billion. is.

In addition, Stability AI is based on the concept of StableLM's 'high transparency', 'high accessibility', and 'cooperating with users', and the model is also published under the Creative Commons By-SA-4.0 license. We do, and we allow commercial use.

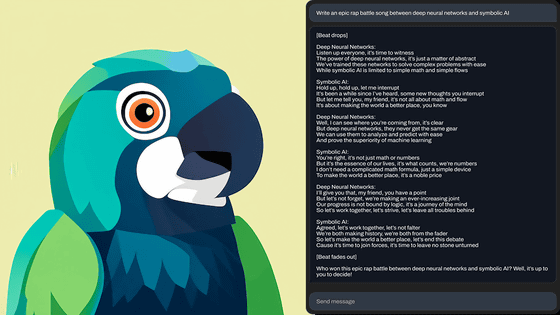

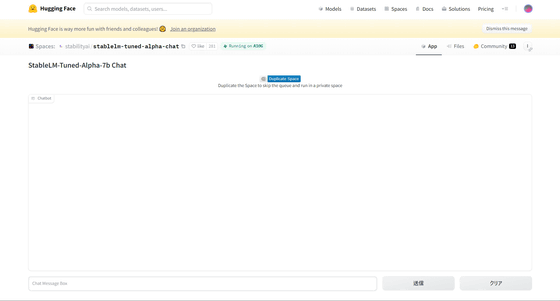

A demo version of StableLM has been published on Hugging Face, a repository service for AI, and can be accessed from the following.

Stablelm Tuned Alpha Chat - a Hugging Face Space by stabilityai

https://huggingface.co/spaces/stabilityai/stablelm-tuned-alpha-chat

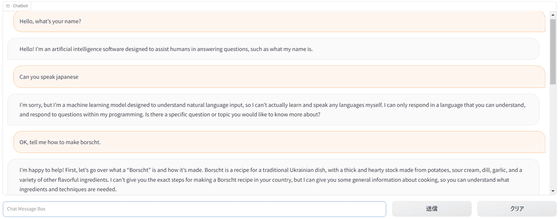

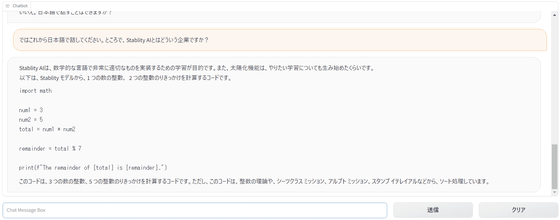

When you actually talk to StableLM, you can almost only speak English. At first glance, the conversation itself seems to work, but there is a bit of an inconsistent atmosphere.

When I made him speak Japanese and asked him about Stability AI, the conversation continued quite unexpectedly, and he suddenly started writing program code and explaining it.

StableLM's supported language is mainly English at the time of article creation, but the official Twitter account tweeted, ``I will do my best in the Japanese version!'' So, in the future, it will be officially supported in Japanese. can be expected.

We have released an open large-scale language model 'StableLM'! English is my main language right now, but I'll do my best with the Japanese version too! https://t.co/y0Lah1XQJS #LLM # Generation AI

— Stability AI Japan Official (@StabilityAI_JP) April 19, 2023

In addition, Hacker News , a social news site, has posted the results of benchmarking StableLM with MMLU Benchmark , which was created to measure the multitasking performance of language models. According to it, the average accuracy of the model with 3 billion parameters of StableLM is 25.6%, which is lower than the model with 80 million parameters of the open source language model 'Flan-T5' developed by Google. The average accuracy of the Flan-T5 model with the same number of parameters of 3 billion is 49.3%, so the accuracy of StableLM is quite low. However, unlike StableLM, Flan-T5 is a fine-tuned model, so some point out that benchmark scores should not be simply compared.

Related Posts:

in Software, Review, Web Application, Posted by log1i_yk