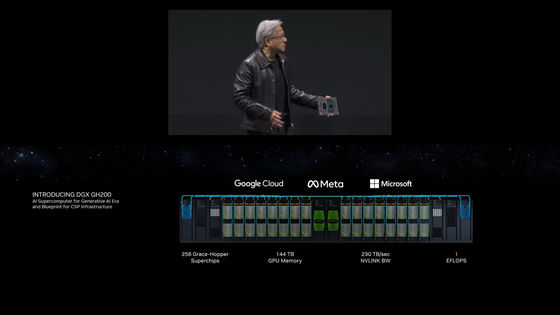

NVIDIA announces large-scale supercomputer 'DGX GH200' for generation AI with processing capacity of 1 ExaFLOPS and memory of 144 TB

NVIDIA CEO Jensen Huang will be presenting large-scale AI such as generative AI training and large-scale language model workloads at

DGX GH200 for Large Memory AI Supercomputer | NVIDIA

https://www.nvidia.com/en-us/data-center/dgx-gh200/

Announcing NVIDIA DGX GH200: The First 100 Terabyte GPU Memory System | NVIDIA Technical Blog

https://developer.nvidia.com/blog/announcing-nvidia-dgx-gh200-first-100-terabyte-gpu-memory-system/

Nvidia Unveils DGX GH200 Supercomputer, Grace Hopper Superchips in Production | Tom's Hardware

https://www.tomshardware.com/news/nvidia-unveils-dgx-gh200-supercomputer-and-mgx-systems-grace-hopper-superchips-in-production

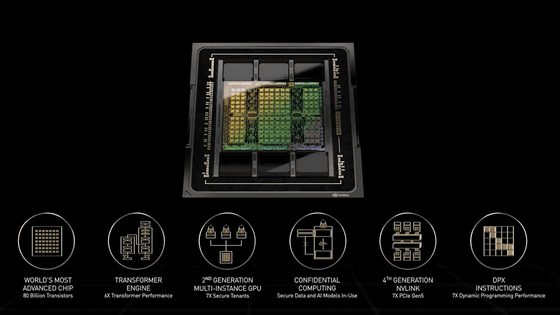

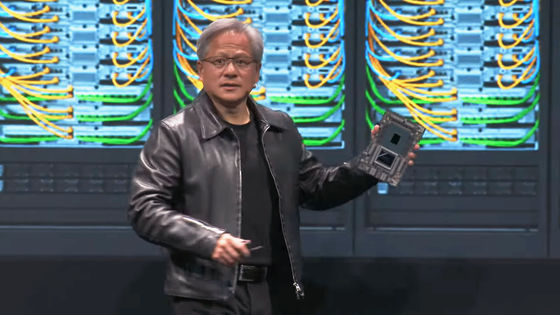

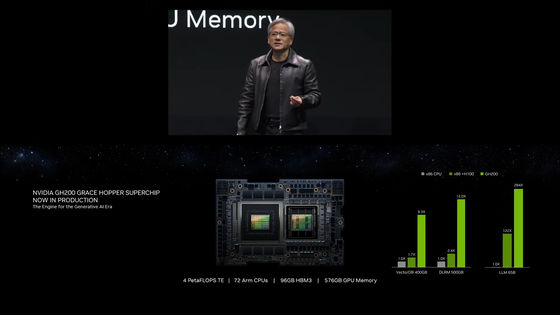

First, CEO Huang announced that the NVIDIA GH200 Grace Hopper Superchip, a chipset for AI and high-performance computing (HPC) applications that combines NVIDIA's own Arm CPU ' Grace ' and GPU ' Hopper ' for AI, has entered production. announced that

This NVIDIA GH200 Grace Hopper Superchip connects Grace and Hopper with

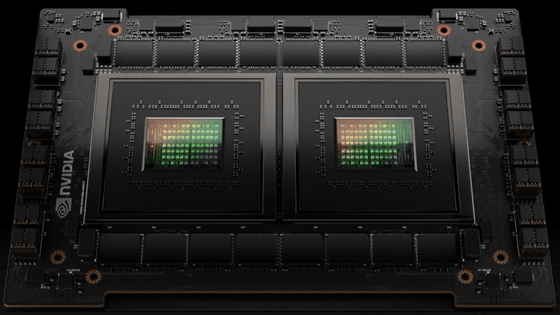

'DGX GH200' is a platform that combines this NVIDIA GH200 Grace Hopper Superchip and

Ian Buck, vice president of hyperscale and HPC at NVIDIA, said, ``When performing arithmetic processing using a huge model such as generative AI or a large-scale language model, the memory capacity has already reached its limit. AI researchers need huge memory capacity of terabyte size or more, and DGX GH200 can meet such needs by allowing up to 256 Hopper GPUs to be integrated and run as a single GPU. It will be possible, ”he said, appealing that the DGX GH200 will be a big presence to eliminate the bottlenecks of generative AI and large-scale language models.

Already Google Cloud, Meta and Microsoft will have early access to the DGX GH200 as they figure out its capabilities in generative AI workloads.

“Building advanced generative models requires an innovative approach to AI infrastructure,” said Mark Romeyer, vice president of computing at Google Cloud. Large shared memory eliminates a major bottleneck that can occur in large-scale AI workloads, and we look forward to Google Cloud exploring its capabilities.'

'NVIDIA's Grace Hopper design allows us to explore new approaches to solving our researchers' biggest challenges,' said Alexis Bjorlin, vice president of infrastructure, AI systems and accelerated platforms at Meta. 'The ability of the DGX GH200 to handle terabyte-sized datasets will help developers to allows us to conduct larger, faster and more advanced research.'

In addition, NVIDIA is proposing a supercomputer 'NVIDIA Helios' that combines four DGX GH200s. In NVIDIA Helios, each DGX GH200 is interconnected by the NVIDIA Quantum-2 InfiniBand platform . So in total you will be able to use 1024 NVIDIA GH200 Grace Hopper Superchips as one unit. This NVIDIA Helios is scheduled to be online by the end of 2023.

You can see the full story of CEO Huang's keynote speech at COMPUTEX TAIPEI 2023 below.

NVIDIA Keynote at COMPUTEX 2023-YouTube

Related Posts:

in Hardware, Posted by log1i_yk