Google announces the 5th generation model 'TPU v5e' of the AI specialized processor TPU, up to 2 times the training performance per dollar and up to 2.5 times the inference performance compared to the previous model

On August 30, 2023, Google announced `` TPU v5e '', the fifth generation model of the ``

Announcing Cloud TPU v5e and A3 GPUs in GA | Google Cloud Blog

https://cloud.google.com/blog/products/compute/announcing-cloud-tpu-v5e-and-a3-gpus-in-ga/

Inside a Google Cloud TPU Data Center-YouTube

Google Cloud and NVIDIA Expand Partnership to Advance AI Computing, Software and Services | NVIDIA Newsroom

https://nvidianews.nvidia.com/news/google-cloud-and-nvidia-expand-partnership-to-advance-ai-computing-software-and-services?=&linkId=100000216031272

Google Cloud announces the 5th generation of its custom TPUs | TechCrunch

https://techcrunch.com/2023/08/29/google-cloud-announces-the-5th-generation-of-its-custom-tpus/

Google announces new TPUs, rolls out Nvidia H100 GPUs, as it adds generative AI-focused cloud services - DCD

https://www.datacenterdynamics.com/en/news/google-announces-new-tpus-rolls-out-nvidia-h100-gpus-as-it-adds-generative-ai-focused-cloud-services/

Google Cloud unveils AI-optimized infrastructure enhancements

https://www.cloudcomputing-news.net/news/2023/aug/29/google-cloud-unveils-ai-optimised-infrastructure-enhancements/

Details on Google's AI updates to cloud infrastructure • The Register

https://www.theregister.com/2023/08/29/google_next_ai_updates/

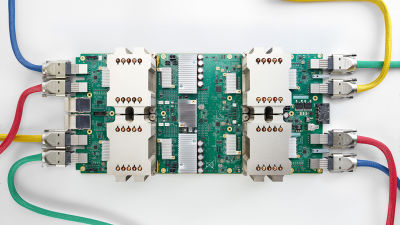

TPU v5e is a new generation TPU purpose-built for the cost-effectiveness and performance needed for medium and large-scale training and inference. Large Language Models (LLM) and generative AI models can achieve up to 2x more training performance per dollar and up to 2.5x more inference performance per dollar compared to the previous generation TPU v4 . . By using TPU v5e, more organizations can train and deploy large and complex AI models at less than half the cost of using TPU v4.

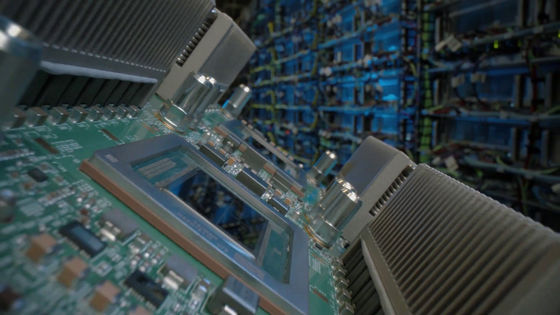

It doesn't sacrifice performance or flexibility for cost benefits, it balances performance, flexibility and efficiency, delivering up to 256 chips with over 400Tb/s aggregate bandwidth and 100 PetaOPS can be interconnected. TPU v5e is extremely versatile, supporting 8 different virtual machine (VM) configurations, ranging from 1 chip to 250+ chips in a single slice. This allows users to accommodate a wide range of LLM and generative AI model sizes.

“TPU v5e delivers up to four times the performance per dollar of comparable solutions on the market when running inference on our production ASR models,” said Domenick Donat, vice president of technology at AssemblyAI . The Google Cloud software stack is ideal for production AI workloads, taking full advantage of TPU v5e hardware purpose-built to run advanced deep learning models. 'This powerful combination of hardware and software has dramatically accelerated our ability to deliver cost-effective AI solutions to our customers.'

TPU v5e also provides built-in support for leading AI frameworks such as

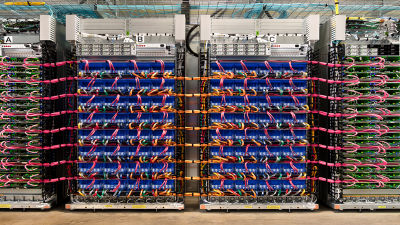

In addition, we are testing multi-slice technology to make it easier to scale up training jobs. This allows users to easily scale AI models up to tens of thousands of TPU v5e or TPU v4 beyond the boundaries of physical TPU pods. Until now, training jobs using TPUs were limited to a single slice of the TPU, and for TPU v4, the maximum slice size was limited to 3072 chips, which also limited the maximum job size. . Multislice allows developers to scale workloads up to tens of thousands of chips via chip-to-chip interconnect (ICI) within a single pod or across multiple pods on a data center network (DCN). This multi-slicing technology is also used to create Google's state-of-the-art PaLM model, which Google said was 'excited to bring it to Google Cloud customers.'

Google Cloud provides a cloud VM ' G2 VM ' equipped with NVIDIA L4 GPU to make it easier to use AI in a wide range of workloads together with NVIDIA. As a further high-end cloud VM, Google Cloud announced 'A3 VM'. The A3 VM is an NVIDIA H100 Tensor Core GPU powered model specifically built for particularly demanding generative AI workloads and LLM training. Combining NVIDIA GPUs with Google Cloud's leading infrastructure technology provides massive scale and performance, delivering 3x faster training speeds and 10x more network bandwidth than the previous generation. A3 is also capable of large-scale operations, allowing users to scale models to tens of thousands of NVIDIA H100 GPUs.

A3 VMs feature two 4th Gen Intel Xeon Scalable processors, eight NVIDIA H100 GPUs per VM, and 2TB of host memory. Built on the latest NVIDIA HGX H100 platform, the A3 VM delivers 3.6TB/s bisection bandwidth across 8 GPUs via 4th Gen NVIDIA NVLink technology. Increased network bandwidth is achieved through Titanium network adapter and NVIDIA Collective Communications Library (NCCL) optimizations. Overall, the A3 VM will be a big boost for AI innovators and companies looking to build state-of-the-art AI models.

David Holz, founder and CEO of Midjourney, which provides image-generating AI 'Midjourney', said, 'Midjourney is an independent research institute that explores new mediums of thought and expands the human imagination. Powered by Google Cloud's latest G2 and A3, the G2 was 15% more efficient than the T4, but the A3 can produce 2x faster than the A100. , users will be able to stay in a flow state while exploring and creating.'

Related Posts:

in AI, Hardware, Web Service, Posted by logu_ii