The new model of Google machine learning machine 'Cloud TPU Pod' is 200 times faster than NVIDIA Tesla V100

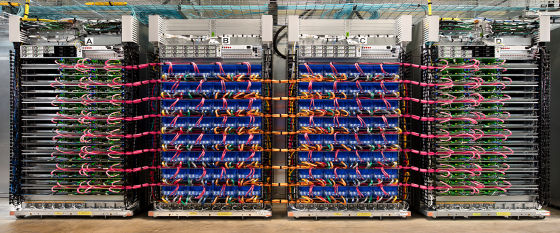

Google is machine learning for processor "to develop TPU new version of was connected to the Google data center network a" machine "TPU Pod" was initiated provided in the cloud. Google is appealing that cost of time and expense in machine learning with TPU Pod dramatically drops.

Now you can train TensorFlow machine learning models faster and at lower cost on Cloud TPU Pods | Google Cloud Blog

https://cloud.google.com/blog/products/ai-machine-learning/now-you-can-train-ml-models-faster-and-lower-cost-cloud-tpu-pods

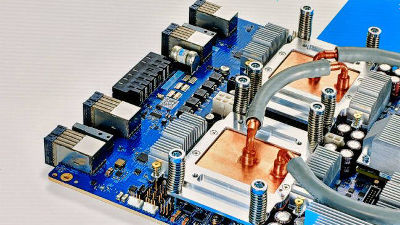

Google has developed its own processor "TPU" optimized for machine learning and deep learning, and the TPU has already evolved until the 3 rd Generation.

Google announced the third-generation processor dedicated to machine learning "TPU 3.0", in a situation where cooling can not catch up and introduced liquid cooling system - GIGAZINE

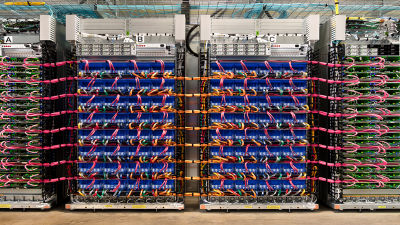

In machine learning and deep learning, it is necessary to process huge calculations to train the model, so we may wait a few days to several weeks for training execution. Increasing the computation speed with training etc. is a big challenge in order to increase the productivity of development. So, the Google Cloud Platform (GPC) provides Cloud TPU, a service that rents the TPU system on the cloud. In the case of performing machine learning and deep learning training requiring large-scale computing resources with TensorFlow , by using "TPU Pod" which connects the TPU in the data center network, researchers and developers can operate at high speed and low The result of machine learning calculation can be obtained for cost.

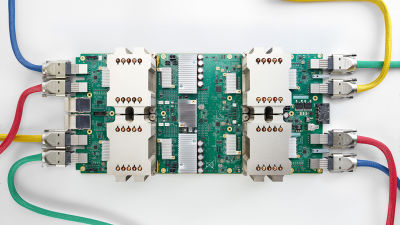

By introducing "TPU v2 Pod" which adopted 2nd Generation TPU, Google announced that computing speed of machine learning has greatly improved and users can calculate at a lower cost.

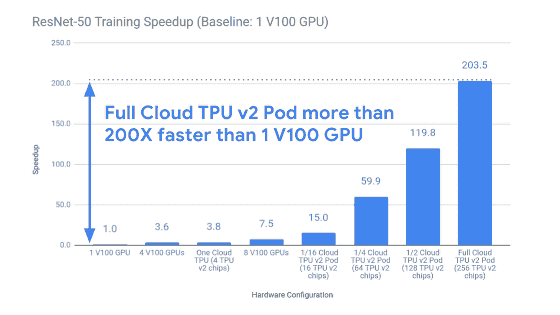

According to Google, the full-scale TPU v2 Pod with 256 TPUs has more than 200 times more machine learning computing speed than NVIDIA's " Tesla V100 ".

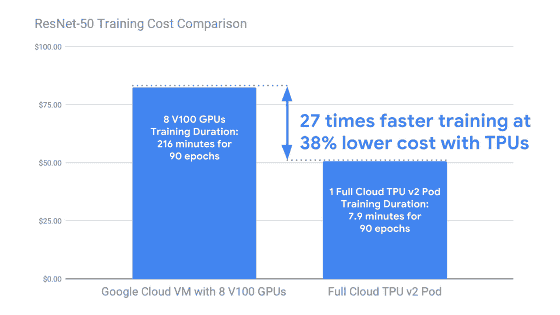

When training " ResNet - 50 ", TPU v2 Pod is 27 times faster than the machine with 8 NVIDIA Tesla V100 in n1 - standard - 64 Google Cloud VM, the total calculation cost is It is 38% lower than that.

You can request early access to TPU Pod available as alpha version from the following site.

Cloud TPU Pod Alpha Access

https://goo.gl/forms/adfRDfl4uRpbosjH3

Related Posts:

in Software, Web Service, Hardware, Posted by darkhorse_log