Google unveils 6th generation TPU 'Trillium', supporting Google Cloud AI with 4.7x better performance and 67% more energy efficiency per chip than TPU v5e

During

Introducing Trillium, sixth-generation TPUs | Google Cloud Blog

https://cloud.google.com/blog/products/compute/introducing-trillium-6th-gen-tpus/

Trillium is our latest generation of TPUs and delivers a 4.7x improvement in compute performance per chip over the previous generation, TPU v5e. #GoogleIO

— Google (@Google) May 14, 2024

Google has announced its sixth-generation TPU, Trillium, which is the most performant and energy-efficient to date. Trillium delivers 4.7x better peak performance per chip than the previous-generation TPU v5e . To achieve this, Google is expanding the size and increasing the clock speed of the TPU's matrix multiplication unit (MXU) .

Trillium also doubles the capacity and bandwidth of its high-bandwidth memory (HBM) compared to the TPU v5e, allowing it to operate on larger models with larger key-value caches. The next-generation HBM adopted by Trillium enables high memory bandwidth, improved power efficiency, and a flexible channel architecture, and also improves memory throughput. This reduces the training time and processing latency of large models.

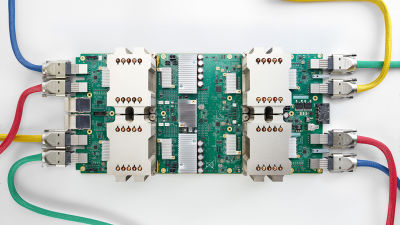

Additionally, Trillium has doubled the inter-chip interconnect (ICI) bandwidth compared to TPU v5e. The doubled ICI bandwidth enables the strategic combination of a custom optical ICI interconnect with 256 chips in a pod and

In addition, it is equipped with the third-generation SparseCore, a specialized accelerator for processing ultra-large-scale embeddings common in advanced ranking and recommendation workloads. SparseCore is said to be successful in accelerating embedding-heavy workloads by strategically offloading random, fine-grained access from TensorCore.

Trillium will enable us to train the next wave of foundational models faster, reduce latency and lower cost to serve those models, and is over 67% more energy efficient than the TPU v5e.

Trillium can scale up to 256 chips in a single high-bandwidth, low-latency pod. Beyond this pod-level scalability, using multi-slice technology and Titanium Intelligence Processing Units (IPUs), Trillium can scale to hundreds of pods, connecting tens of thousands of chips in building-scale supercomputers interconnected at multi-petabits per second.

Trillium will be available to Google Cloud users in the second half of 2024.

The Google I/O 2024 keynote can be viewed below, and you can check out Trillium's presentation from around the 39 minute mark of the video.

Google Keynote (Google I/O '24) - YouTube

Related Posts: