Google researchers claim that Google's AI processor ``TPU v4'' is faster and more efficient than NVIDIA's ``A100''

In 2021, Google

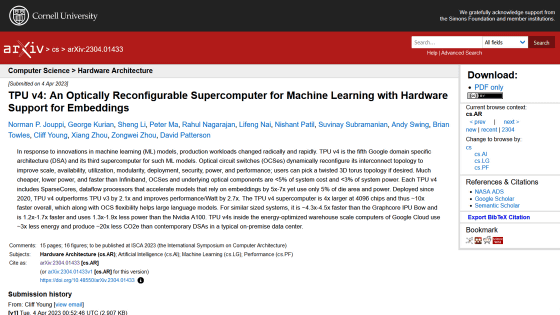

[2304.01433] TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings

https://arxiv.org/abs/2304.01433

TPU v4 enables performance, energy and CO2e efficiency gains | Google Cloud Blog

https://cloud.google.com/blog/topics/systems/tpu-v4-enables-performance-energy-and-co2e-efficiency-gains

Google Claims Its TPU v4 Outperforms Nvidia A100

https://www.datanami.com/2023/04/05/google-claims-its-tpu-v4-outperforms-nvidia-a100/

Google reveals its newest AI supercomputer, says it beats Nvidia

https://www.cnbc.com/2023/04/05/google-reveals-its-newest-ai-supercomputer-claims-it-beats-nvidia-.html

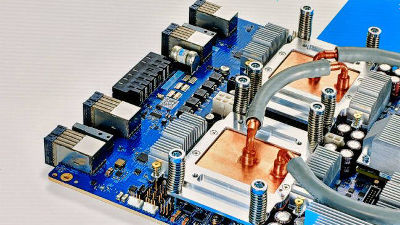

Developing machine learning models requires training large amounts of data, so as models become larger and more complex, so does the demand on computing resources. The TPU developed by Google is a processor specialized for machine learning and deep neural networks, and the performance of TPU v4 is 2.1 times higher than the previous generation TPU v3, and the performance per power is 2.7 times better. claims.

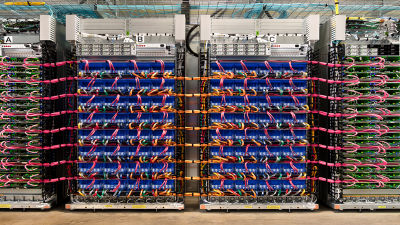

Google offers a supercomputer 'TPU v4 Pod' that combines 4096 TPU v4s through Google Cloud, a cloud computing service. TPU v4 Pod interconnects 4096 TPU v4 units via its own optical circuit switches (OCS), and is excellent in performance, scalability, availability, etc. Large-scale such as LaMDA , MUM , and PaLM It is said that it is the main product in the language model.

The following pictures are part of the TPU v4 Pod. Google says OCS for TPU v4 Pods is much cheaper, lower power, and faster than competing interconnect technology InfiniBand , and OCS is a small percentage of TPU v4 Pod system cost and system power. said less than 5%.

The advantage of using OCS for machine learning supercomputers is that it has excellent availability because it can easily bypass failed components by switching circuits. Availability is important for machine learning supercomputers because training large AI models and products such as Google's Bard and OpenAI's ChatGPT requires running a large number of chips for weeks to months.

In a paper, Google claims that TPU v4 Pods are 1.2-1.7 times faster and consume 1.3-1.9 times less power than similarly sized systems using NVIDIA's A100. Google Cloud-optimized TPU v4 Pods also consume 2-6x the energy and emit 20x the CO2 of modern domain-specific architectures (DSAs) in typical on-premises data centers. It is said that it is decreasing.

Regarding the reason why it is not compared with NVIDIA's latest AI chip '

The TPU v4 Pod was also used to train version 4 of the image generation AI 'Midjourney' . 'We are proud to work with Google Cloud to provide the creative community with a seamless experience leveraging Google's globally scalable infrastructure,' said David Holtz, founder and CEO of Midjourney. From algorithm training for version 4 with Google JAX on TPU v4 to running inference on GPUs, we are thrilled with the speed with which TPU v4 brings users' vibrant ideas to life.'

In a blog on April 5, 2023, NVIDIA reported that the performance of ``H100'' exceeded the previous generation ``A100'' by 4 times, citing benchmark test data published by third-party benchmark ``MLPerf''. doing.

H100, L4 and Orin Raise the Bar for Inference in MLPerf | NVIDIA Blogs

https://blogs.nvidia.com/blog/2023/04/05/inference-mlperf-ai/

Related Posts:

in Hardware, Posted by log1h_ik