NVIDIA announces GPU 'H200' for AI and HPC, inference speed is twice as fast as H100 and HPC performance is 110 times that of x86 CPU

NVIDIA announced the GPU `` H200 '' for AI and high performance computing (HPC) on Monday, November 13, 2023. The H200 is touted to have double the inference speed compared to the previous generation model '

H200 Tensor Core GPU | NVIDIA

https://www.nvidia.com/en-us/data-center/h200/

NVIDIA Supercharges Hopper, the World's Leading AI Computing Platform | NVIDIA Newsroom

https://nvidianews.nvidia.com/news/nvidia-supercharges-hopper-the-worlds-leading-ai-computing-platform

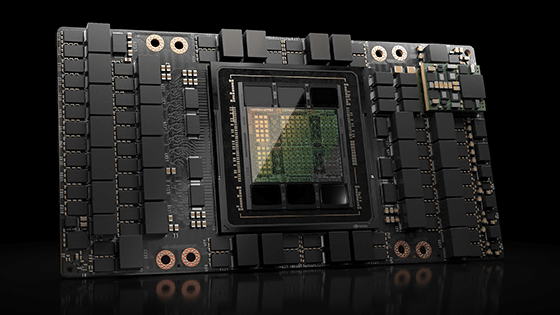

H200 is a GPU that uses GPU architecture 'Hopper' and is optimized for AI processing and HPC. The previous generation model H100, which also uses Hopper, demonstrated extremely high performance in the inference and learning required for AI development, and became so popular that excessive demand led to product shortages .

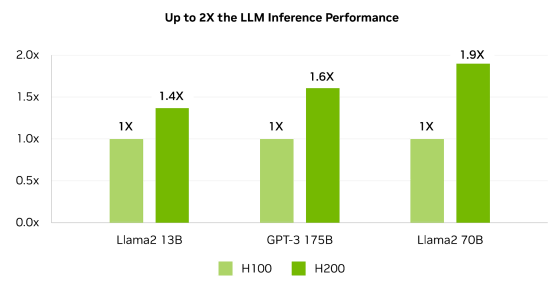

The newly announced H200 uses high-speed and large-capacity memory technology 'HBM3e', with a memory capacity of 141GB and a memory bandwidth of 4.8TB/s. The H200 is said to achieve twice the inference speed compared to the H100 by adopting high-performance memory. If you check the inference speed comparison graph of H100 (light green) and H200 (green) shown by NVIDIA, H200 is 1.4 times faster with Llama2 13B, 1.6 times faster with GPT-3 175B, and 1.9 times faster with Llama2 70B compared to H100. It can be seen that the inference speed is achieved.

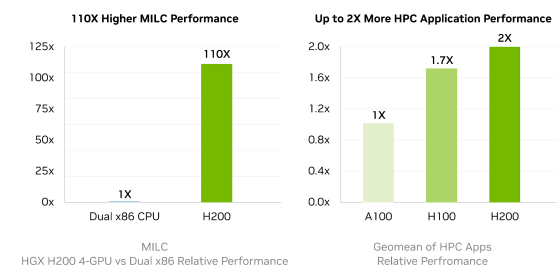

In addition, the HPC performance is 110 times that of X86 CPU, and it has been shown to be twice as high performance as NVIDIA's GPU '

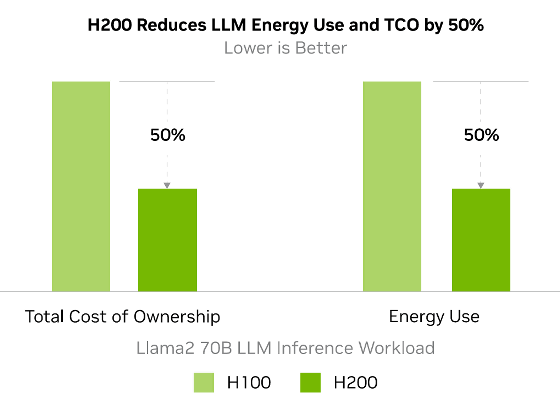

Furthermore, the H200's operating costs and energy consumption are halved compared to the H100.

The detailed specifications of H200 are as follows.

| form factor | H200SXM |

|---|---|

| FP64 | 34TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS |

| BFLOAT16 Tensor Core | 1979 TFLOPS |

| FP16 Tensor Core | 1979 TFLOPS |

| FP8 Tensor Core | 3958 TFLOPS |

| INT8 Tensor Core | 3958 TFLOPS |

| Memory capacity | 141GB |

| memory bandwidth | 4.8TB/s |

| decoder | 7 NVDEC 7 JPEG |

| power consumption | Max 700W |

| Multi-instance GPU (MIG) | Up to 7 MIGs @16.5GB each |

| form factor | SXM |

| Connectivity | NVIDIA NVLink: 900GB/s PCIe Gen5: 128GB/s |

The H200 will be offered as an NVIDIA HGX H200 server board in a 4-way or 8-way configuration, and can also be incorporated into the AI and HPC specialized chip system ' NVIDIA GH200 Grace Hopper Superchip .'

Related Posts:

in Hardware, Posted by log1o_hf