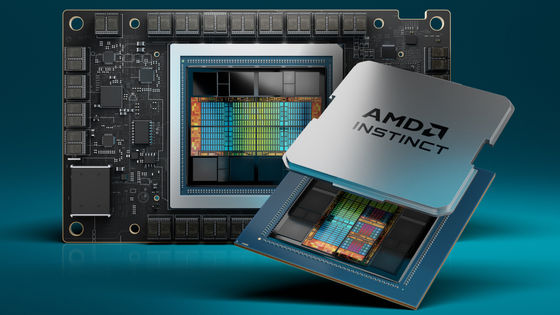

AMD unveils AI accelerator 'Instinct MI325' with 256GB memory and 6.0TB/s bandwidth, beating NVIDIA's H200 in some tests

At the

AMD Delivers Leadership AI Performance with AMD Instinct MI325X Accelerators :: Advanced Micro Devices, Inc. (AMD)

https://ir.amd.com/news-events/press-releases/detail/1220/amd-delivers-leadership-ai-performance-with-amd-instinct

AMD reveals core specs for Instinct MI355X CDNA4 AI accelerator — slated for shipping in the second half of 2025 | Tom's Hardware

https://www.tomshardware.com/tech-industry/artificial-intelligence/amd-reveals-core-specs-for-instinct-mi355x-cdna4-ai-accelerator-slated-for-shipping-in-the-second-half-of-2025

The existence of the Instinct MI325 was announced during the company's Q2 2024 financial results call in July 2024.

AMD's AI data center division doubles year-over-year as AI-specific chips hit, recording record revenue of $2.8 billion in the second quarter of 2024 - GIGAZINE

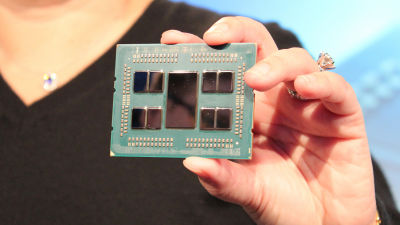

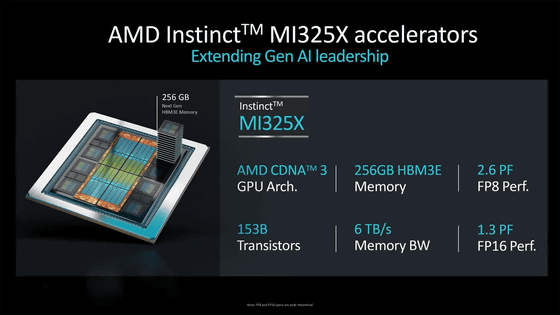

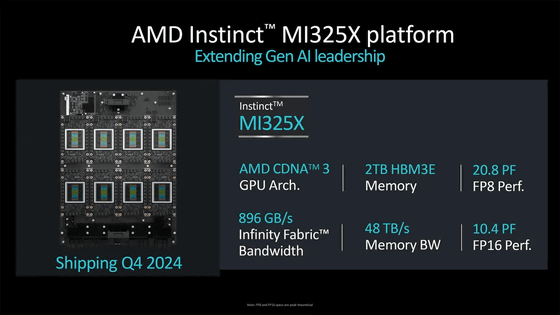

The main change from MI300X to MI325X is the memory capacity per GPU, which is now up to 256GB instead of the maximum 192GB in MI300X. However, since the financial report reported a maximum of 288GB, the memory capacity was slightly reduced in the official announcement. The memory communication bandwidth is 6TB/s, and the number of transistors is 153 billion. The processing power is 2.6PFLOPS for 8-bit floating point operations and 1.3PFLOPS for 16-bit floating point operations.

A platform with eight MI325s combined has a processing power of 20.8 PFLOPS for FP8 (8-bit floating point) operations and 10.4 PFLOPS for FP16 (16-bit floating point) operations.

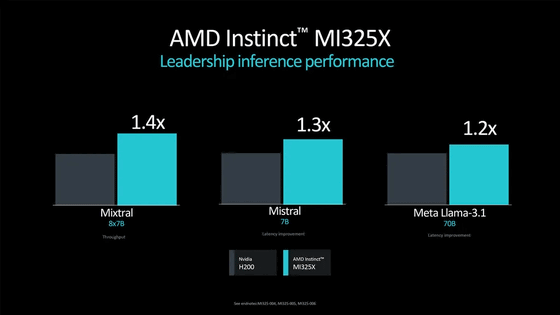

AMD showed a comparison of single GPU performance between the MI325X and NVIDIA's H200 when running large language models. The MI325 outperformed the H200 on all three GPUs:

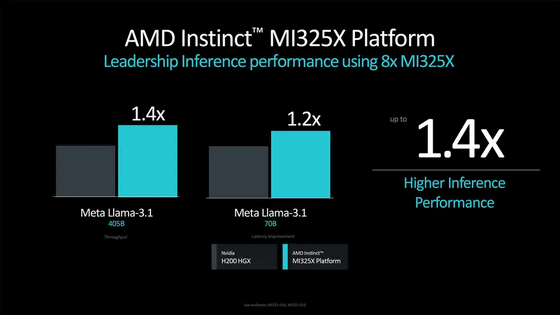

In addition, the results of a comparison of multi-GPU performance when eight MI325X and eight H200 were combined to run the 405GB and 70GB models of Llama 3.1 are below. The MI325 platform showed 1.4 times the performance of the H200 HGX.

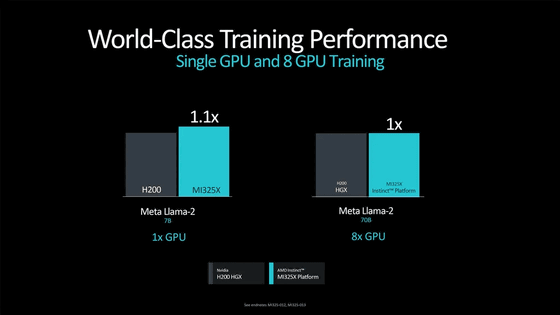

AMD claims that the performance of the MI325X when training Meta's Llama-2 is roughly equivalent to that of the H200 in both single and multi-core.

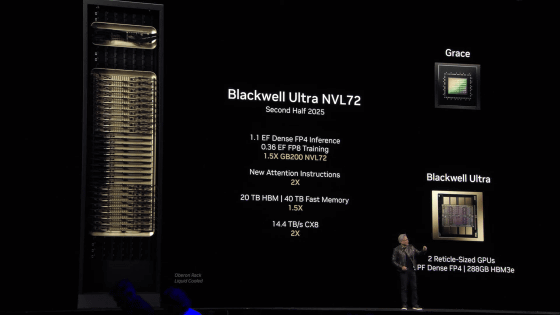

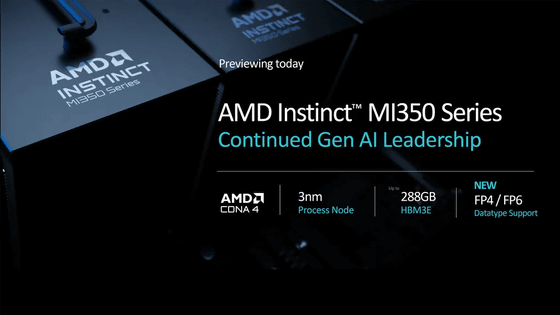

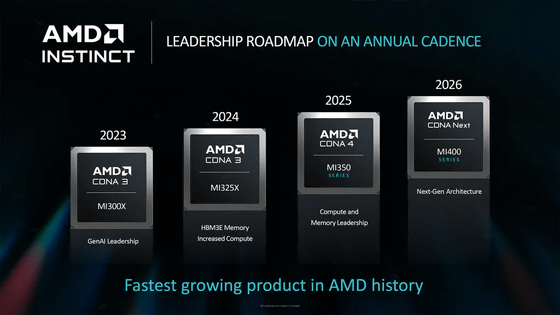

In addition, AMD has announced that it will release the 'Instinct MI350' series as a higher-end model of the Instinct MI300 series. It will use the CDNA 4 architecture and have a maximum memory capacity of 288GB.

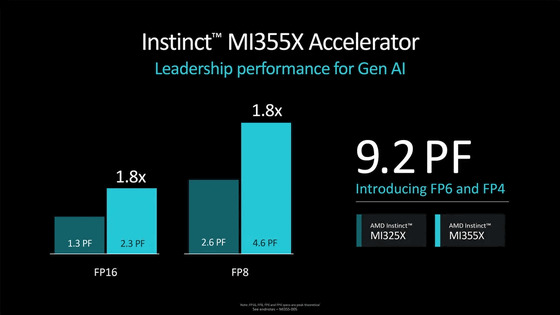

The MI355X, one of the Instinct MI350 series, is said to deliver 1.8 times the performance of the MI325 in both FP16 and FP8 operations. The Instinct MI350 series also supports FP6 and FP4 data types, and the MI355X is said to have a computing power of 9.2 PFLOPS.

According to AMD, the MI355X is scheduled to ship in the second half of 2025. AMD also stated that the Instinct MI400 series, which uses the next-generation architecture, is aiming for mass production shipments in 2026.

You can see AMD Advancing AI 2024 in the following movie.

Advancing AI 2024 @AMD - YouTube

Related Posts:

in Hardware, Posted by log1i_yk