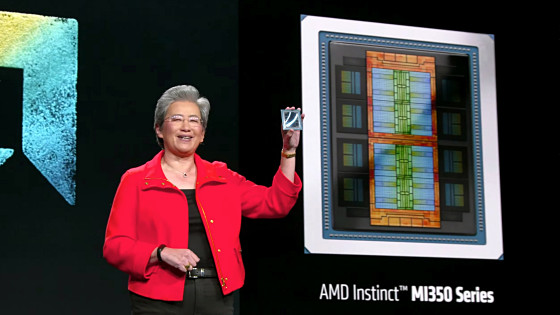

AMD breaks into NVIDIA's stronghold with AI chip 'Instinct MI300' series, OpenAI, Microsoft, Meta, and Oracle have already decided to adopt it

AMD has announced ``Instinct MI300X'', a GPU that boasts up to 1.6 times the performance of NVIDIA H100, and ``Instinct MI300A'',

AMD Delivers Leadership Portfolio of Data Center AI Solutions with AMD Instinct MI300 Series :: Advanced Micro Devices, Inc. (AMD)

https://ir.amd.com/news-events/press-releases/detail/1173/amd-delivers-leadership-portfolio-of-data-center-ai

Meta and Microsoft to buy AMD's new AI chip as alternative to Nvidia

https://www.cnbc.com/2023/12/06/meta-and-microsoft-to-buy-amds-new-ai-chip-as-alternative-to-nvidia.html

AMD introduces data center and PC chips aimed at accelerating AI | VentureBeat

https://venturebeat.com/ai/amd-introduces-data-center-and-pc-chips-aimed-at-accelerating-ai/

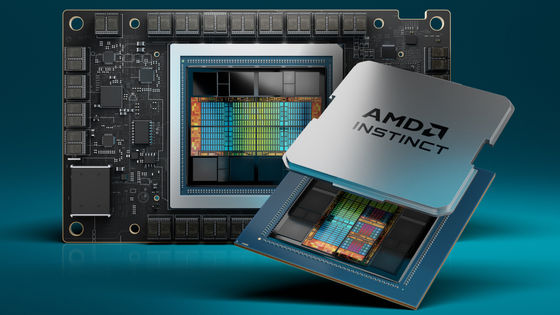

◆Instinct MI300X

Instinct MI300X is a GPU equipped with AMD CDNA 3 architecture, and has approximately 40% more computing units, 1.5 times more memory capacity, and 1.7 times more peak theoretical memory bandwidth than the previous generation Instinct MI250X.

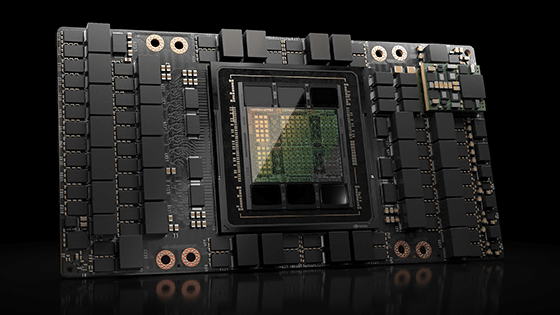

Instinct MI300X maintains an advantage over competing products like NVIDIA H100, delivering up to 1.6x more throughput when performing inference on large language models. In addition, the memory capacity is 2.4 times larger to 1.5TB, and the only current product that can handle inference of a model like Llama2 with a parameter size of 70B (70 billion) on a single device is the Instinct MI300X.

Several major AI companies have already announced their adoption of Instinct MI300X, and Microsoft CTO Kevin Scott said, ``We will provide access to Instinct MI300X through Azure web services.''

Additionally, Meta, which along with Microsoft was the company that purchased the most H100s in 2023, announced that it will use the Instinct MI300X for AI inference workloads such as AI sticker processing, image editing, and assistant interaction.

In addition, OpenAI will provide access to Instinct MI300X with its

◆Instinct MI300A

Instinct MI300A is an APU that combines AMD CDNA 3's GPU core and Zen 4's CPU core, and is positioned as ``the world's first data center APU for high performance computing (HPC) and AI.''

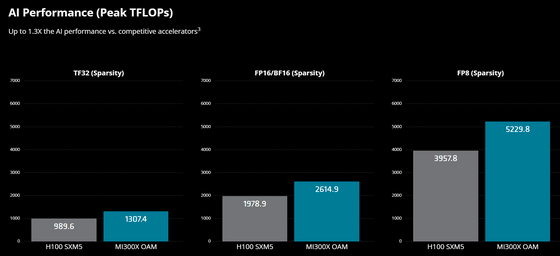

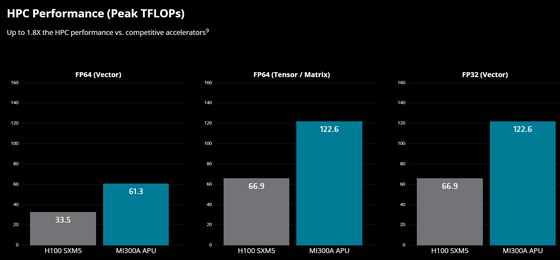

It is particularly energy efficient, with approximately 2.6 times the HPC workload performance per watt compared to the previous generation Instinct MI250X. In addition, it is almost on par with NVIDIA H100 in terms of AI performance, but shows up to 1.8 times the performance in the HPC field.

◆Forum now open

A forum related to this article has been set up on the GIGAZINE official Discord server . Anyone can write freely, so please feel free to comment! If you do not have a Discord account, please create one by referring to the article explaining how to create an account!

• Discord | 'Do you think AMD can compete with NVIDIA with chips for AI development?' | GIGAZINE

https://discord.com/channels/1037961069903216680/1182242859919884289

Related Posts:

in Hardware, Posted by log1l_ks