Ampere Computing announces 3nm process '256-core CPU'

American semiconductor company Ampere Computing announced the 256-core 'AmpereOne CPU' using 3nm process technology on May 16, 2024. The company also said it will partner with Qualcomm to build AI inference servers equipped with Ampere CPUs and Qualcomm's AI accelerators.

Ampere Scales AmpereOne Product Family to 256 Cores, Announces Joint Effort with Qualcomm on CPUs and Accelerators

Ampere announces 256-core 3nm CPU, unveils partnership with Qualcomm | Tom's Hardware

https://www.tomshardware.com/pc-components/cpus/ampere-announces-256-core-3nm-cpu-unveils-partnership-with-qualcomm

Ampere scales CPU to 256 cores and partners with Qualcomm on cloud AI | VentureBeat

https://venturebeat.com/ai/ampere-scales-cpu-to-256-cores-and-partners-with-qualcomm-on-cloud-ai/

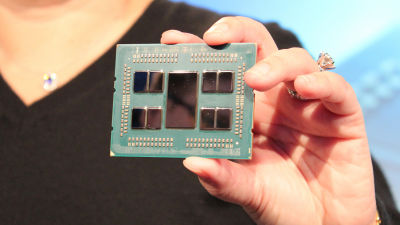

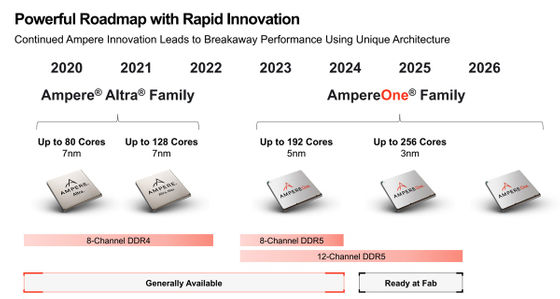

The CPU announced by Ampere Computing this time is a 12-channel 256-core CPU manufactured using TSMC's 3nm process technology (N3), and is the successor to the 192-core AmpereOne that the company announced in May 2023.

'We are expanding our product family with a new 256-core product that delivers 40% more performance than anything else on the market,' said Ampere Computing CEO Lennie James. 'The key to this CPU is not the number of cores, but what you can do with this platform. Performance, memory, cache and AI computing efficiency will enable a variety of new features.'

Ampere Computing's chips are particularly strong in efficiency, and Ampere Computing reports that when running Meta's large-scale language model (LLM) Llama 3 on the 128-core Ampere Altra CPU, it achieves the same performance as a combination of an x86 CPU and an NVIDIA A10 GPU while consuming one-third the power.

Ampere Computing said the new 256-core CPU will have the same air-cooling solution as the previous model, and IT news site Tom's Hardware said, 'This means that

Although Ampere CPUs are suitable for many general-purpose cloud instances due to their low power consumption, Tom's Hardware says that their AI capabilities are limited. Therefore, Ampere Computing has partnered with Qualcomm to develop a platform for LLM inference based on the company's Cloud AI 100 Ultra accelerator.

Regarding AI efforts, Jeff Wittich, chief product officer at Ampere Computing, said, 'Our CPUs can run a variety of workloads, from the most common cloud-native applications to AI. This includes AI integrated with traditional cloud-native applications such as data processing and media distribution.'

Related Posts:

in Hardware, Posted by log1l_ks