AMD announces 'AMD Instinct MI300X', an accelerator for generation AI that supports up to 192 GB of memory

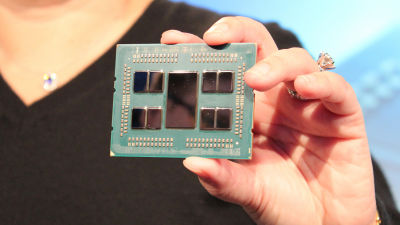

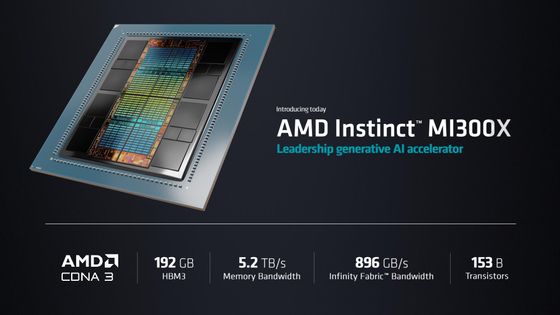

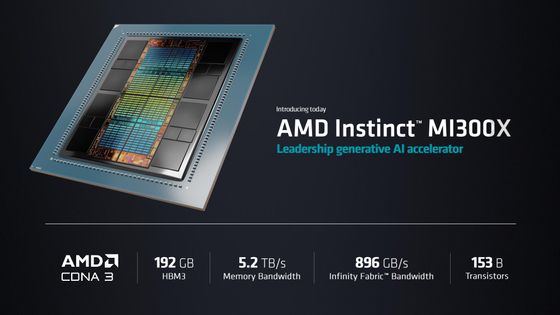

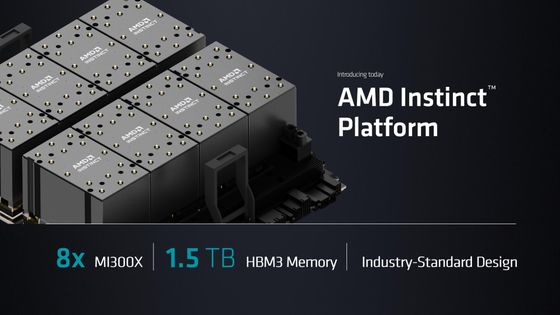

On June 13, 2023, AMD announced the accelerator ` ` AMD Instinct MI300X '' designed for training large-scale language models used in AI. The MI300X supports up to 192GB of HBM3 memory using only GPU tiles instead of a combination of CPU and GPU tiles.

AMD Expands Leadership Data Center Portfolio with New EPYC CPUs and Shares Details on Next-Generation AMD Instinct Accelerator and Software Enablement for Generative AI

AMD Expands AI/HPC Product Lineup With Flagship GPU-only Instinct MI300X with 192GB Memory

https://www.anandtech.com/show/18915/amd-expands-mi300-family-with-mi300x-gpu-only-192gb-memory

AMD Preps GPU to Challenge Nvidia's Grip on the Generative AI Market | PCMag

https://www.pcmag.com/news/amd-preps-gpu-to-challenge-nvidias-grip-on-the-generative-ai-market

In the event 'Data Center and AI Technology Premiere' held on June 13, AMD will introduce Amazon Web Services (AWS), Hugging Face, Meta to market next-generation high-performance CPU and AI accelerator solutions. , announced that it has entered into technical partnerships with Microsoft Azure and others.

``AI is the defining technology that will shape next-generation computing, and frankly, AMD's biggest and most strategic long-term growth opportunity,'' said AMD CEO Lisa Su. and new products for data centers.

The newly announced AI accelerator 'MI300X' by AMD is designed for training large-scale language models used for AI such as ChatGPT. Based on the next-generation AMD CDNA 3 accelerator architecture, it supports up to 192GB of HBM3 memory, providing the compute and memory efficiency needed for training and inference of large-scale language models critical for generative AI.

“The MI300X is very similar to the MI300A,” Su said. was designed just for generative AI.” “It can reduce the number of GPUs required for the largest language models, significantly improving performance, especially inference, and reducing total cost of ownership.” It is.'

MI300X's large-capacity memory allows large-scale open-source language models such as

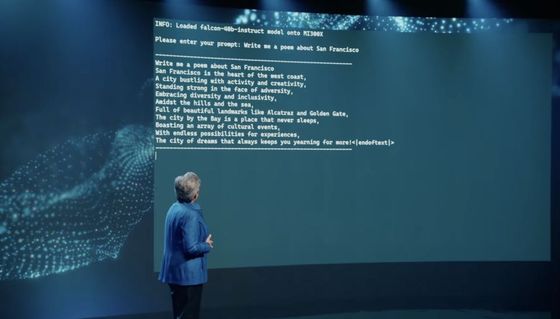

The event also demonstrated running the Falcon-40b on a single MI300X to create text in seconds for a command to 'write a poem about San Francisco.' AMD plans to start offering MI300X to major customers from the third quarter of 2023.

AMD predicts that the data center chip market for generative AI, which is about $ 30 billion (about 4.2 trillion yen) in 2023, will expand to about $ 150 billion (about 21 trillion yen) in 2027. doing. Technology media PCMag pointed out that with the announcement of a new enterprise accelerator, AMD is trying to take away the market pie that NVIDIA has.

In addition, AMD has updated the 4th generation AMD EPYC , a computing infrastructure optimized for the latest data centers, and the APU ' Ryzen Pro 7000 ' for desktop PCs and ' Ryzen Pro 7040 ' for laptop PCs. A series was also announced.

AMD Expands World Class Commercial Portfolio with Leadership Mobile and Desktop Processors for Business Users

https://www.amd.com/en/newsroom/press-releases/2023-6-13-AMD-Expands-World-Class-Commercial-Portfolio-with-.html

AMD Ryzen Pro 7000 and 7040 Series Processors : Zen 4 For Commercial Deployments

https://www.anandtech.com/show/18907/amd-ryzen-pro-7000-and-7040-series-processors-zen-4-for-commercial-deployments

Related Posts:

in Hardware, Posted by log1h_ik