Microsoft launches high-performance cloud service for AI development using AMD's AI chip 'MI300X'

NVIDIA's AI-specialized chips occupy a large share of the market for AI infrastructure used for AI learning and inference. Meanwhile, Microsoft has announced that it will begin offering the AI infrastructure ' ND MI300X v5 ' using AMD's AI-specialized chip '

Introducing the new Azure AI infrastructure VM series ND MI300X v5 - Microsoft Community Hub

https://techcommunity.microsoft.com/t5/azure-high-performance-computing/introducing-the-new-azure-ai-infrastructure-vm-series-nd-mi300x/ba-p/4145152

AMD Instinct MI300X Accelerators Power Microsoft Azure OpenAI Service Workloads and New Azure ND MI300X V5 VMs :: Advanced Micro Devices, Inc. (AMD)

https://ir.amd.com/news-events/press-releases/detail/1198/amd-instinct-mi300x-accelerators-power-microsoft-azure

Although small-scale AI learning and inference is possible with consumer GPUs and CPUs, general GPUs are inefficient when learning and inferring large-scale AI models such as large-scale language models. For this reason, semiconductor manufacturers such as NVIDIA, Intel, and AMD are developing chips specialized for AI learning and inference. However, at the time of writing, AI processing infrastructure using NVIDIA's AI-specialized chip ' H100 ' accounts for the majority of the market share, and AMD and Intel's AI-specialized chips are not prominent.

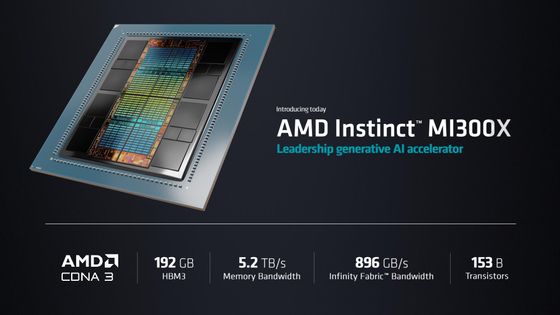

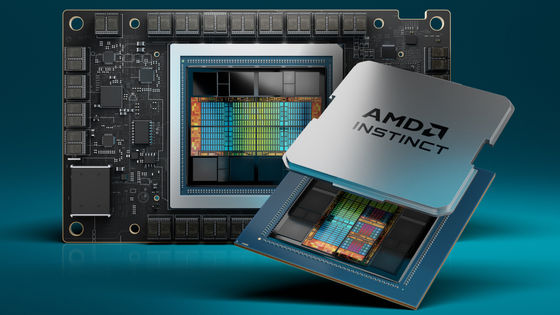

The MI300X developed by AMD is an AI-specialized chip with up to 1.6 times the performance of the H100, and it has been reported that it can perform up to 2.1 times better in some test environments. In addition, as of December 2023, AI infrastructure providers such as OpenAI, Microsoft, Meta, and Oracle have announced their adoption of the MI300X.

AMD to cut into NVIDIA's stronghold with AI chip 'Instinct MI300' series, OpenAI, Microsoft, Meta, Oracle have already decided to adopt it - GIGAZINE

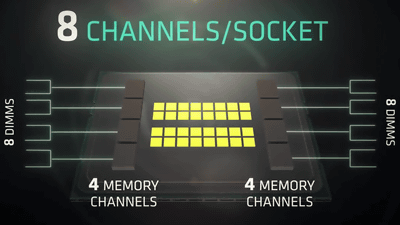

Microsoft has recently announced that it will begin offering the AI infrastructure 'ND MI300X v5' using MI300X as an Azure service. The ND MI300X v5 is equipped with 1.5TB HBM, with a memory bandwidth of 5.3TB/s. Microsoft claims that high-speed, large-capacity memory can reduce power consumption and costs while accelerating processing.

In addition to being optimized for Aure, the ND MI300X v5 is also optimized for OpenAI's large-scale language model 'GPT-4 Turbo.' In addition, Microsoft stated that 'ND MI300X v5 offers excellent cost performance for popular OpenAI models and open source models,' emphasizing that it also performs well on models other than OpenAI.

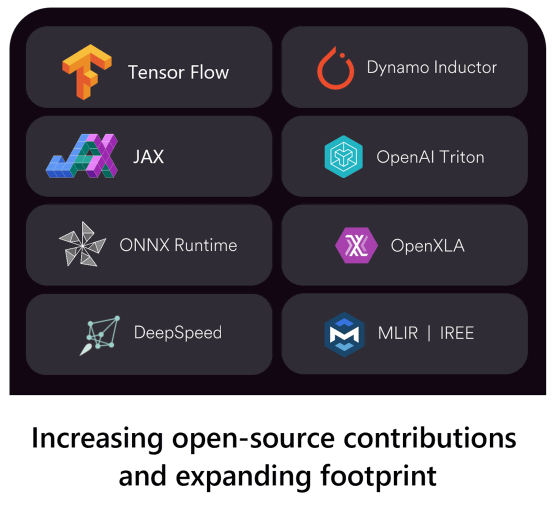

In addition, the ND MI300X v5 supports AMD's AI development software ' ROCm ', and in addition to common machine learning frameworks such as TensorFlow and PyTorch, it also supports Microsoft's AI acceleration libraries such as ' ONNX ', ' DeepSpeed ', and ' MSCCL '.

Jason Henderson, corporate vice president of Office 365 product management at Microsoft, said, 'The new Azure VMs based on the MI300X have delivered great results for the Microsoft Copilot service. Microsoft's investment in AI accelerators will provide ongoing performance benefits to Copilot users.' '(The ND MI300X v5) is part of the core AI infrastructure that powers Microsoft 365 Copilot, including Microsoft 365 Chat, Word Copilot, Teams Meeting Copilot, and more,' he said, highlighting how the ND MI300X v5 is helping Microsoft improve its products.

Related Posts:

in AI, Hardware, Web Service, Posted by log1o_hf