What exactly is NVIDIA's AI specialized GPU? Is it different from a gaming GPU?

As AI research and development progresses rapidly, NVIDIA, which develops 'GPU for AI', is also attracting a lot of attention. However, even though we know that GPUs are used for AI research, development and operation, it is difficult to imagine what kind of GPUs are being used and on what scale. Therefore, I have summarized NVIDIA's AI specialized GPUs and platforms.

H100 Tensor Core GPU - NVIDIA

https://www.nvidia.com/ja-jp/data-center/h100/

DGX H100: Enterprise AI | NVIDIA

https://www.nvidia.com/ja-jp/data-center/dgx-h100/

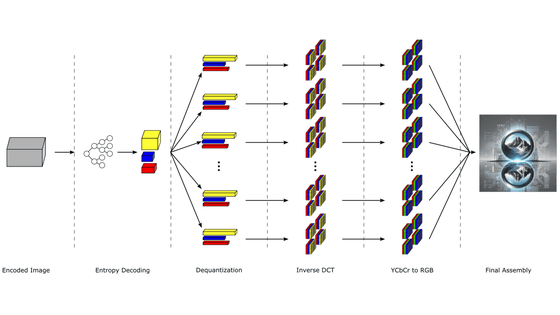

To provide AI services, it is necessary to perform two types of computational processing: 'learning', which collects the necessary data and constructs model data, and 'inference', which inputs prompts into the model data and generates output. there is. For example, in the case of image generation AI, learning is ``the computational process of building model data based on a large amount of images and image descriptions,'' and inference is ``the computational process of generating images using model data.'' Since this learning and inference requires a huge amount of calculation processing to be executed in parallel, GPUs with more cores can perform calculations more efficiently than CPUs.

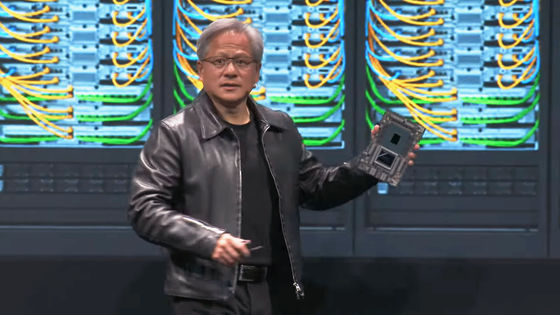

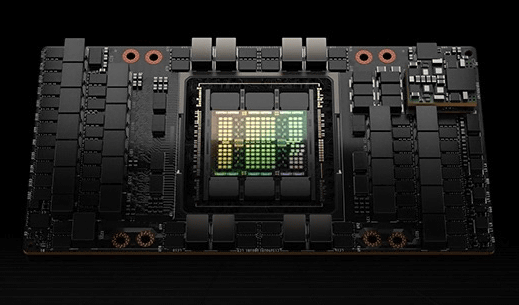

Learning and inference on small-scale model data can be executed on GPUs shipped for gamers, but GPUs for games are optimized for game-related processing, so they cannot achieve maximum efficiency. Therefore, NVIDIA developed architectures optimized for AI learning and inference, such as ' Ampere ' and ' Hopper ,' and commercialized AI-specific GPUs such as ' A100 ,' which uses Ampere, and ' H100 ,' which uses Hopper. Did.

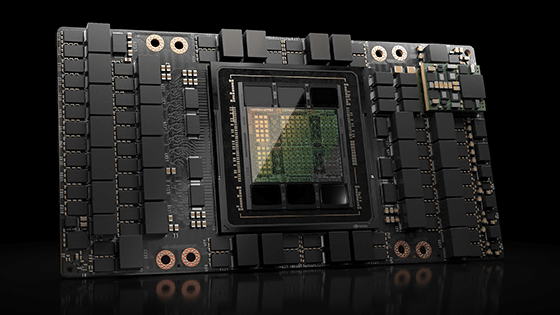

This is what H100 looks like. NVIDIA has not announced the price of H100, but the New York Times reports that the price of H100 is $ 25,000 (approximately 3.7 million yen) per unit. Since the H100 is so valuable, armored vehicles will be used for transportation.

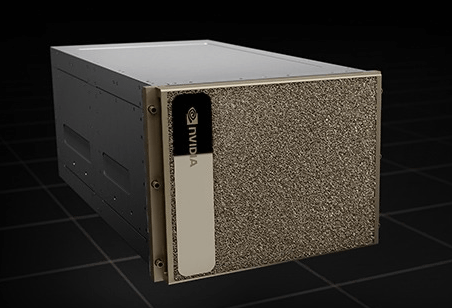

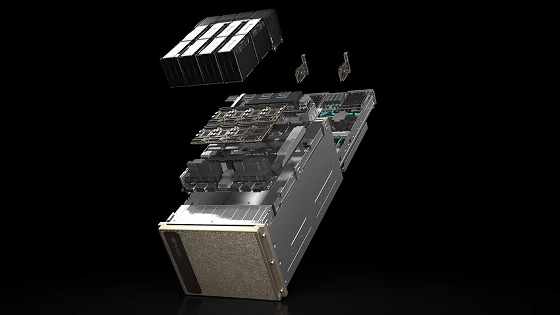

NVIDIA is developing not only AI-specific GPUs, but also the AI processing system 'DGX', which combines AI-specific GPUs with CPU, memory, storage, etc. The appearance of 'DGX H100' equipped with H100 is like this.

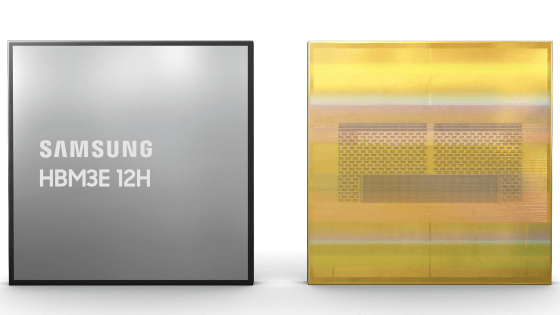

DGX H100 is equipped with 8 H100s and 640GB of GPU memory that can transfer data at 900GB per second. It also has two x86 CPUs, 2TB of system memory, and 30TB of storage.

Additionally, NVIDIA is also developing an AI infrastructure called DGX SuperPOD that incorporates 32 DGXs. At the time of article creation, there are two types of DGX SuperPOD: a model that uses DGX A100 and a model that uses DGX H100. In other words, DGX SuperPOD, which uses DGX H100, is equipped with 256 H100 units, each costing approximately 3.7 million yen.

In addition, NVIDIA has already developed 'H200' with twice the inference performance of H100, and has announced that it will start shipping in 2024.

NVIDIA announces GPU 'H200' for AI and HPC, inference speed is twice as fast as H100 and HPC performance is 110 times that of x86 CPU - GIGAZINE

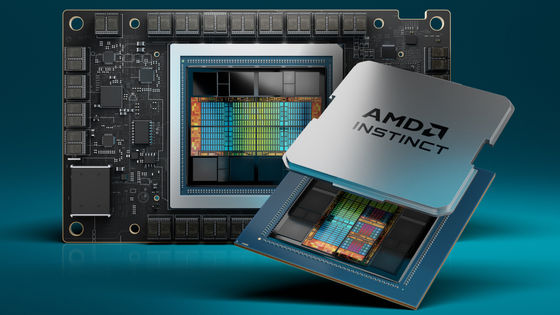

In addition, NVIDIA's rival AMD is also developing AI-specific GPUs, and in December 2023 announced the AI-specific GPU ``Instinct MI300'' with 1.6 times the performance of H100.

AMD breaks into NVIDIA's stronghold with AI chip 'Instinct MI300' series, OpenAI, Microsoft, Meta, and Oracle have already decided to adopt it - GIGAZINE

Related Posts:

in Hardware, Posted by log1o_hf