Samsung announces the ultra-high-speed memory 'HBM3E 12H' with a transfer speed of 1280 GB/s and a capacity of 36 GB, enabling faster AI learning and an increase in the number of parallel executions of inference

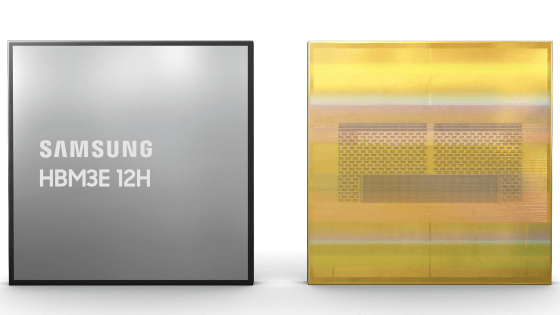

Samsung announced on Tuesday, February 27, 2024 , the HBM3E 12H , a stacked memory with a capacity of 36GB and data transfer speeds of up to 1280GB/s.

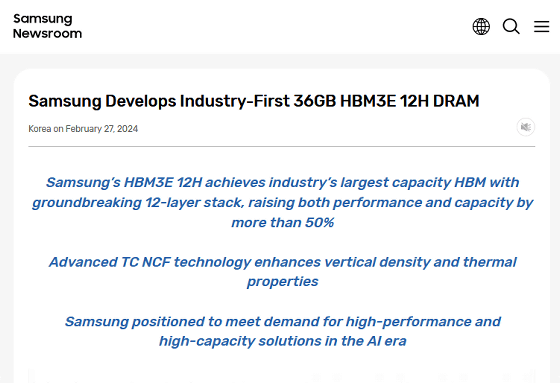

Samsung Develops Industry-First 36GB HBM3E 12H DRAM – Samsung Global Newsroom

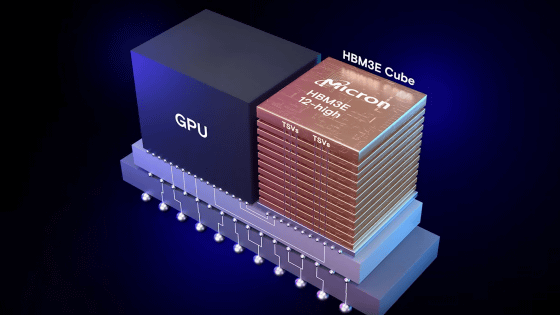

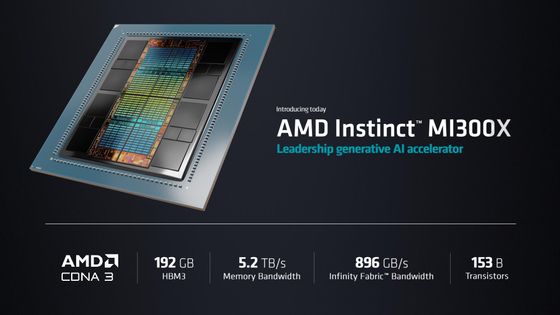

HBM is a stacked memory capable of ultra-high-speed data transfer, and is used in fields such as AI research and development that require large amounts of data to be transferred at high speed. For example, HBM3 is used in NVIDIA's ``DGX GH200 '' supercomputer for generative AI, and AMD's machine learning specialized chip ``Instinct MI300X'' also uses HBM3 as its memory.

AMD announces 'AMD Instinct MI300X', an accelerator for generative AI that supports up to 192GB of memory - GIGAZINE

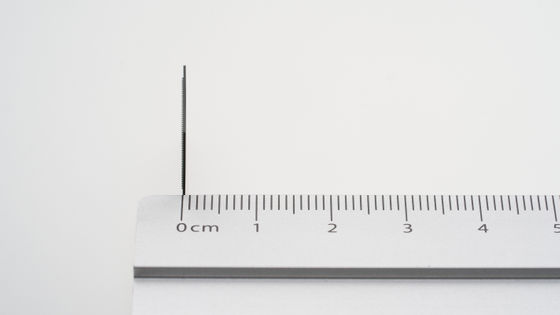

``HBM3E 12H'' announced by Samsung on Tuesday, February 27, 2024, is a 12-layer stacked memory with a capacity of 36GB and a transfer speed of 1280GB/s. Samsung claims ``50% performance improvement in both capacity and transfer speed compared to 8-layer HBM3''.

HBM3E 12H is manufactured using thermocompressed non-conductive film (TC NCF). Therefore, although it has a 12-layer structure, it can fit in the same volume as an 8-layer HBM and can meet existing packaging requirements.

Samsung's simulations show that a system using HBM3E 12H can perform machine learning 34% faster than a system using 8-layer HBM3, and the number of inference services that can be provided simultaneously is 11.5 times larger.

Sample shipments of HBM3E 12H have already begun, and mass production is scheduled to begin in the first half of 2024.

Related Posts:

in Hardware, Posted by log1o_hf