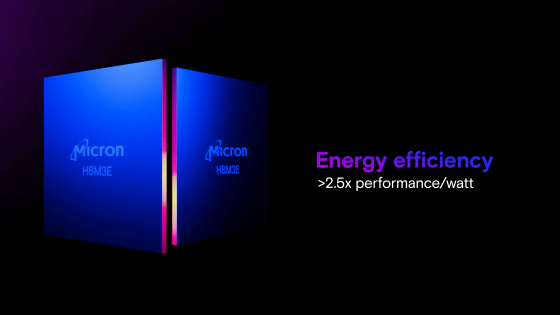

Micron unveils 36GB 12-stacked HBM3E, a high-capacity version of ultra-fast memory useful for AI development

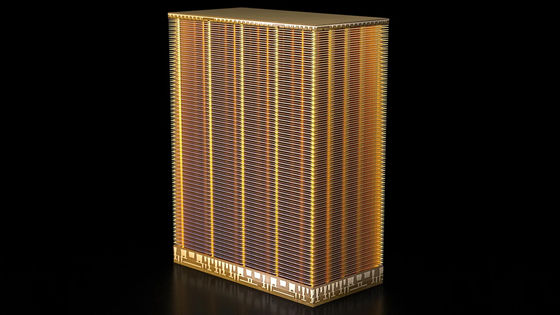

Micron has announced that it has begun shipping 36GB 12-stacked HBM3E. The 36GB 12-stacked HBM3E developed by Micron has a memory bandwidth of over 1.2TB/s, which is said to be useful for AI development.

Micron continues memory industry leadership with HBM3E 12-high 36GB | Micron Technology Inc.

HBM3E | Micron Technology Inc.

https://www.micron.com/products/memory/hbm/hbm3e

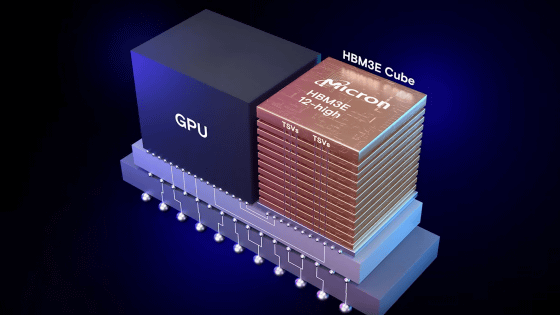

AI development requires handling huge amounts of data, so not only the processor that processes the calculations but also the memory capacity and bandwidth involved in the movement of data are extremely important factors. Micron is already mass-producing 24GB, 8-stacked HBM3E, which is also used in NVIDIA's high-performance AI processing chip ' H200 '.

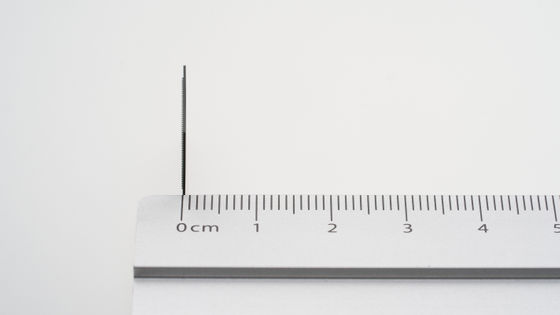

The newly developed 36GB 12-stacked HBM3E has a memory bandwidth of over 1.2TB/s, allowing even large AI models to run on a single processor, and consumes significantly less power than competitors' 24GB 8-stacked HBM3E.

Micron's 36GB, 12-stacked HBM3E is already shipping to industry partners and is undergoing testing for adoption in the AI ecosystem.

Samsung has also started shipping the 36GB 12-stack HBM3E.

Samsung announces ultra-high-speed memory 'HBM3E 12H' with a transfer speed of 1280GB/s and a capacity of 36GB, enabling faster AI learning and increased parallel execution of inference - GIGAZINE

Related Posts:

in Hardware, Posted by log1o_hf