Report that image generation AI such as Stable Diffusion can generate learned images almost as they are based on 'memory'

Image generation AI is subject to intense legal and ethical debate, one of the issues being the huge datasets used for training. The dataset used for AI learning includes many images collected on the Internet, and it is regarded as a problem that the copyright issue is not clear. Google, DeepMind, the University of California, Berkeley, Princeton University, A group of researchers from the ETH Zurich made a presentation.

[2301.13188] Extracting Training Data from Diffusion Models

Paper: Stable Diffusion “memorizes” some images, sparking privacy concerns | Ars Technica

https://arstechnica.com/information-technology/2023/02/researchers-extract-training-images-from-stable-diffusion-but-its-difficult/

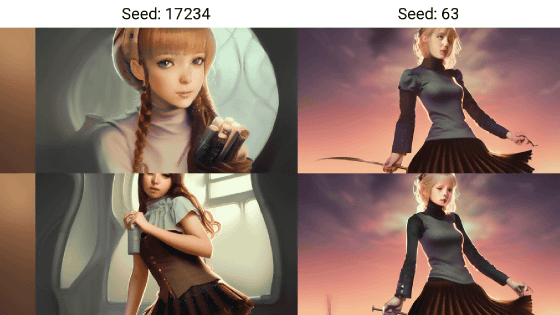

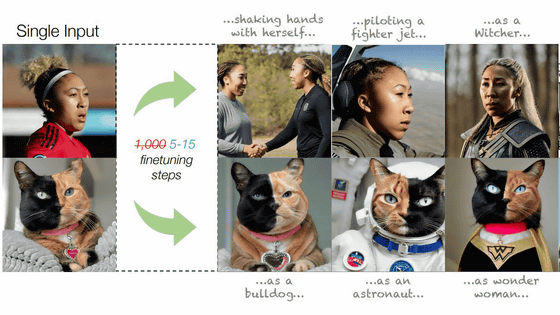

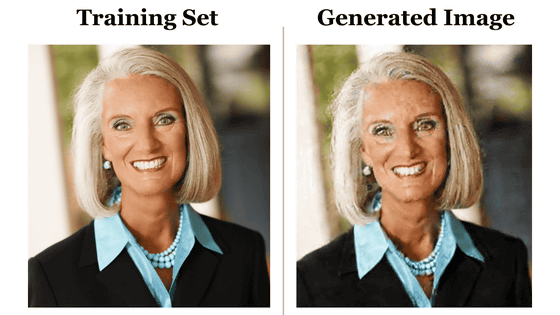

The research team generated 175 million images with Stable Diffusion v1.4, out of which 350,000 extracted images similar to 160 million images of the dataset used for learning. As a result, it was possible to identify 109 images that are considered to be very similar to 94 directly matched images.

Below is an image (Original) included in the dataset and an image (Generated) generated by Stable Diffusion v1.4. Although it is not the same as digital data, it matches to the extent that it can be regarded as almost the same as a photograph.

Of course, the size of the dataset used for learning Stable Diffusion is much larger than the file size of the model data of Stable Diffusion, which is about 2 GB, so the image data of the dataset remains in Stable Diffusion. not. The matching rate shown in the paper is also only 0.03%, so the probability of AI extracting the exact same image as contained in the dataset is very low.

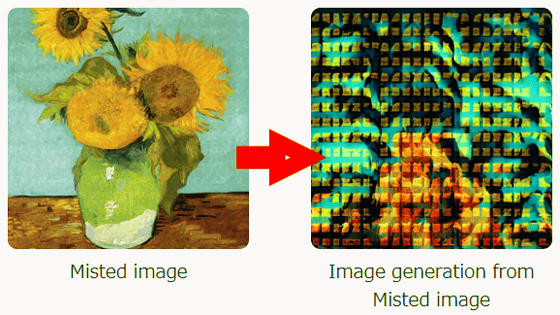

Image generation AI such as Stable Diffusion does not cut and paste the learned images as they are, but compresses the learning results obtained from a huge number of images into statistical weights and stores them in a low-dimensional “latent space”. It is a mechanism to generate an image from noise using space. Therefore, the dataset image itself is not included in the AI model, and in principle it was said that the dataset image would not be output as it is.

Detailed illustration of how the image generation AI 'Stable Diffusion' generates images from text - GIGAZINE

However, when training an image generation AI on a dataset, if the dataset contains many of the same images, the AI will overfit to the images and lose its versatility (overlearning). may occur. In fact, it was pointed out that Stable Diffusion is overlearning Leonardo da Vinci's ' Mona Lisa '. In other words, overlearning for a specific image remains in AI in the form of 'memory'.

Q: i saw stable diffusion create an exact duplicate of the mona lisa just like a little fucked up. you said the ai isn't plagiarizing.

— mx. curio (commissions era) (@ai_curio) August 31, 2022

A: this is called 'overfitting' and its a sign that an ai has SO MANY duplicates of a particular thing. i'll let discord-me explain.pic.twitter.com/3g6leb4Ad9

Eric Wallace, one of the authors of the paper, suggested on Twitter that AI model developers should deduplicate images from datasets to reduce memory.

See our paper for a lot more technical details and results.

— Eric Wallace (@Eric_Wallace_) January 31, 2023

Speaking personally, I have many thoughts on this paper. First, everyone should de-duplicate their data as it reduces memorization. However, we can still extract non-duplicated images in rare cases! [6/9] pic.twitter.com/ 5fy8LsNbjb

The content of the paper announced this time is based on the assertion that ``the image generation AI does not memorize the images of the learned dataset'' and ``unless the image generation AI outputs the images, the images contained in the dataset are in a private state.'' will be contrary. The research team acknowledges that this paper may be used as evidence in court as the number of lawsuits over image generation AI such as Stable Diffusion increases in the future, but the research purpose is 'potential in the future'. To improve diffusion models and reduce the harm caused by overfitting memories.'

Related Posts:

in Mobile, Software, Web Service, Science, Art, Posted by log1i_yk