An easy-to-understand illustration of ``how to draw a picture'' that you can understand if you know how to master the image generation AI ``Stable Diffusion''

The image generation AI `` Stable Diffusion '', which was released to the public free of charge in August 2022, can generate arbitrary character strings and images by anyone with a CPU equipped with an NVIDIA GPU or an online execution environment such as Google Colaboratory. can be generated. AI Pub, which explains AI on Twitter, explains how such Stable Diffusion generates images.

// Stable Diffusion, Explained //

/15pic.twitter.com/VX9UVmUaKJ — AI Pub (@ai__pub) August 21, 2022

You've seen the Stable Diffusion AI art all over Twitter.

But how does Stable Diffusion _work_?

A thread explaining diffusion models, latent space representations, and context injection:

1

You can roughly understand how Stable Diffusion generates images by looking at the GIF animation that you can see by clicking the image below.

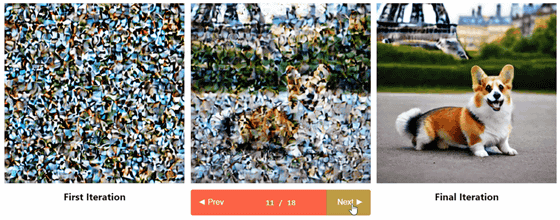

In the first place, 'Diffusion' in Stable Diffusion means 'diffusion'. This diffusion is the process of repeatedly adding random small noises to the image, proceeding from left to right in the image below. Stable Diffusion also does the reverse, right-to-left, that is, transforming noise into an image.

First, a one-tweet summary of diffusion models (DMs).

— AI Pub (@ai__pub) August 21, 2022

Diffusion is the process of adding small, random noise to an image, repeatedly.

Diffusion models reverse this process, turning noise into images, bit-by-bit.

Photo credit: @AssemblyAI

2/15pic.twitter.com /Jk34utlZxE

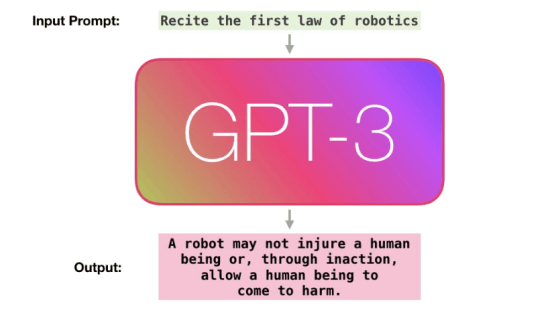

Then, a trained neural network is in charge of the process of converting this noise into an image. What the neural network learns is the function f(x,t), which slightly denoises x to produce what it looks like t-1 times.

How do DMs turn noise into images?

— AI Pub (@ai__pub) August 21, 2022

By training a neural network to do so gradually.

With the sequence of noised images = x_1, x_2, ... x_T,

The neural net learns a function f(x,t) that denoises x 'a little bit', producing what x would look like at time step t-1.

3/15pic.twitter.com/ QZJX89X6Rk

To turn pure noise into a clean image, you can apply this function many times. Stable Diffusion processing is like f(f(f(f(....f(N, T), T-1), T-2)..., 2, 1), a nested state of functions where N is pure noise and T is the number of steps.

To turn pure noise into an HD image, just apply f several times!

— AI Pub (@ai__pub) August 21, 2022

The output of a diffusion model really is just

f(f(f(f(....f(N, T), T-1), T-2)..., 2, 1)

where N is pure noise, and T is the number of diffusion steps.

The neural net f is typically implemented as a U-net.

4/15pic.twitter.com /lxYvucaCGt

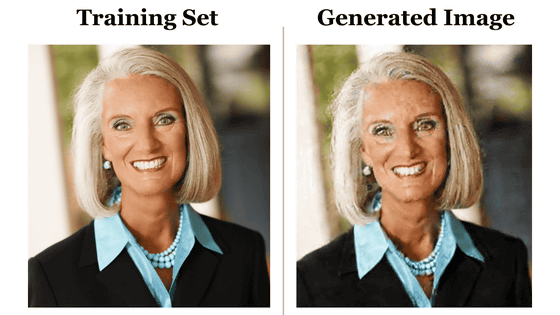

Of course, doing a series of tasks with 512 x 512 pixels is very computationally expensive and costly.

The key idea behind Stable Diffusion:

— AI Pub (@ai__pub) August 21, 2022

Training and computing a diffusion model on large 512 x 512 images is _incredibly_ slow and expensive.

Instead, let's do the computation on _embeddings_ of images, rather than on images themselves.

5/15 pic.twitter.com/h8qRyxeqY1

So instead of using the actual pixel space, we use the lower dimensional latent space to reduce this computational burden. Specifically, we use an encoder to compress the image X to a latent spatial representation z(x), and perform the Diffusion Process and Denoising U-Net on z(x) instead of x. flow. In the figure below, ε is the encoder and D is the decoder.

So, stable diffusion works in two steps.

— AI Pub (@ai__pub) August 21, 2022

Step 1: Use an encoder to compress an image 'x' into a lower-dimensional, latent-space representation 'z(x)'

Step 2: run diffusion and denoising on z(x), rather than x.

Diagram below!

6/15pic.twitter.com /SPHCyZDOSD

The following article also explains how the neural network understands the image.

How does the neural network understand images - GIGAZINE

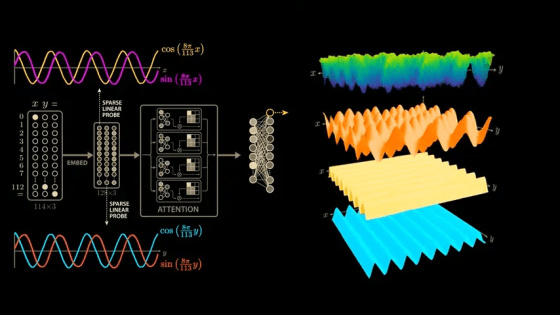

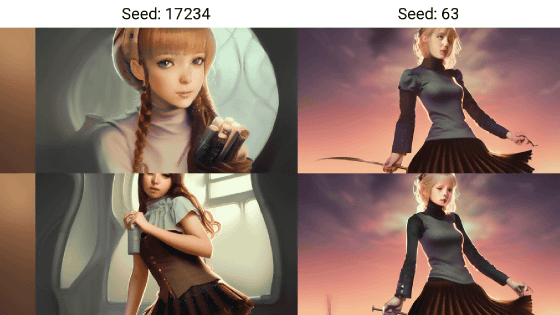

Stable Diffusion can also input strings (prompts) as function variables. By entering the prompt, the direction of denoising is determined to some extent.

But where does the text prompt come in?

— AI Pub (@ai__pub) August 21, 2022

I lied! SD does NOT learn a function f(x,t) to denoise xa 'little bit' back in time.

It actually learns a function f(x, t, y), with y the 'context' to guide the denoising of x.

Below, y is the image label 'arctic fox'.

8/15 pic.twitter.com/z4WVWJ8NVu

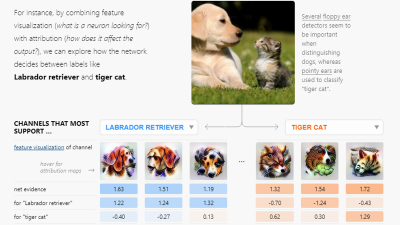

Stable Diffusion allows the 'context' of the prompt to intervene with simple concatenation during the denoising process and cross-attention just before decoding.

But how does SD process context?

— AI Pub (@ai__pub) August 21, 2022

The 'context' y, alongside the time step t, can be injected into the latent space representation z(x) either by:

1) Simple concatenation

2) Cross-attention

Stable diffusion uses both.

10/15 pic.twitter.com/WaDmy0RyIB

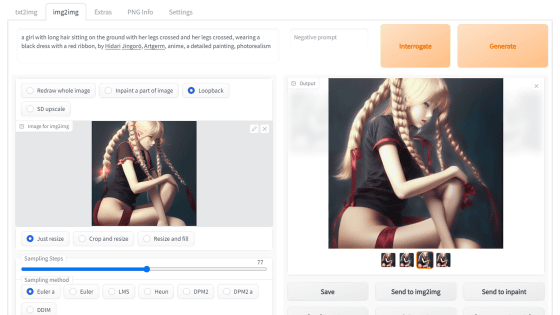

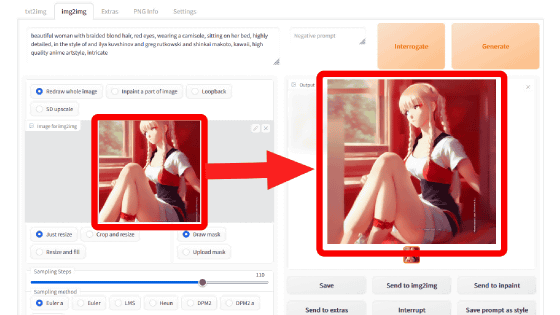

Another major feature of Stable Diffusion is that it can handle images in addition to strings as context. Stable Diffusion simultaneously performs image restoration and image synthesis from image data.

The cool part not talked about on Twitter: the context mechanism is incredibly flexible.

— AI Pub (@ai__pub) August 21, 2022

Instead of y = an image label,

Let y = a masked image, or y = a scene segmentation.

SD trained on this different data, can now do image inpainting and semantic image synthesis!

11/15 pic.twitter.com/wzCF8OOV0p

In addition, the following article also summarizes the mechanism of Stable Diffusion in detail.

What is the mechanism of image generation AI 'Stable Diffusion' that raises issues such as artist rights violations and pornography generation? -GIGAZINE

Related Posts:

in Software, Posted by log1i_yk