Image generation AI `` Stable Diffusion '' version 3 makes it possible to instruct not to learn your own image

Emad Mostaque, founder of image generation AI startup Stability AI, announced on Twitter that it will allow artists to remove their work from the upcoming Stable Diffusion 3.0 training dataset. did. The move comes after an artist advocacy group called Spawning tweeted that it would respect

Opt-in as well of course, about 50:50 both ways.

— Emad (@EMostaque) December 14, 2022

Technically this is tags for LAION and coordinated around that.

It's actually quite difficult due to size (eg what if your image is on a news site?)

Exploring other mechanisms for attribution etc, welcome constructive input. https://t.co/uYvN4yHldo

Stability AI plans to let artists opt out of Stable Diffusion 3 image training | Ars Technica

https://arstechnica.com/information-technology/2022/12/stability-ai-plans-to-let-artists-opt-out-of-stable-diffusion-3-image-training/

Stable Diffusion, an image generation AI, has been criticized for learning from the large-scale data set ' LAION ' of images collected from the Internet without consulting the rights holder.

AI that generates images that look like they were drawn by a human artist is criticized for ``infringing on the artist's rights''-GIGAZINE

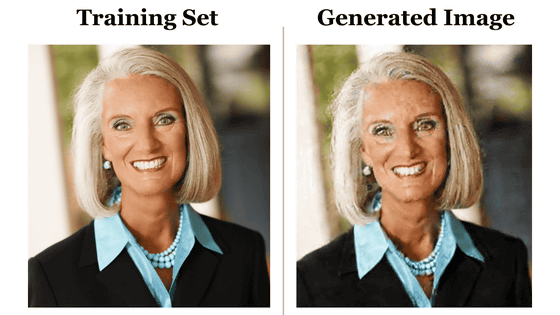

Therefore, Have I Been Trained? allows you to 'opt out' to apply for removal of your image from the dataset if you find that your image is included in the dataset.

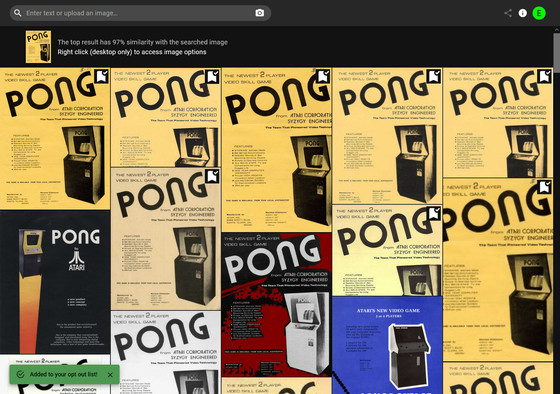

Tech news site Ars Technica actually created an account on Have I Been Trained? .

Then, a large number of PONG leaflets were displayed as search results as shown below, so he right-clicked on some thumbnails and selected 'opt out of this image' in the pop-up menu. Of course, Ars Technica does not own the rights to PONG's leaflets, but there was no attempt to confirm identity or legal control in the series of work.

At the time of writing the article, in the laws of the United States and the EU, using images collected without permission in AI datasets has a different legal interpretation, and there is an opinion that ``it is fair use and does not violate copyright.'' For example, there is also the argument that 'the reason for AI datasets is not considered fair use'. It has also been pointed out that the opt-out request process prepared by Stablity AI does not meet the definition of consent in the EU's General Data Protection Regulation (GDPR). For this reason, there is an opinion that ``the opt-in should not be an opt-out requesting to be removed from the dataset, but an opt-in that allows inclusion in the dataset.''

Ars Technica said, ``If Stability AI, LAION, and Spawning do the enormous work of legally confirming the rights of images with opt-out requests, who will pay for the effort? Would you entrust these organizations with the personal information necessary to confirm your I'm happy with the stance of being transparent. Everything is in motion and in a precocious state.'

Related Posts:

in Software, Posted by log1i_yk