Experiments reveal that it is difficult for even experts to distinguish 'AI or philosopher'

The Splintered Mind: Results: The Computerized Philosopher: Can You Distinguish Daniel Dennett from a Computer?

https://schwitzsplinters.blogspot.com/2022/07/results-computerized-philosopher-can.html

In Experiment, AI Successfully Impersonates Famous Philosopher

https://www.vice.com/en/article/epzx3m/in-experiment-ai-successfully-impersonates-famous-philosopher

A team of philosophers, including Eric Schwitzgebel , a professor of philosophy at the University of California, Riverside, conducted an experiment to see 'Can humans distinguish between AI and philosophers?' Announcements and results regarding this experiment were posted on Schwitzgebel's blog ' The Splintered Mind '.

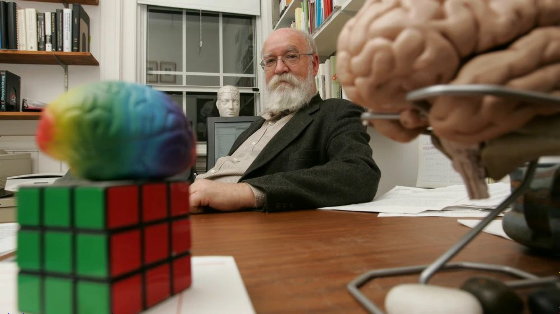

Schwitzgebel and colleagues have previously trained GPT-3 in the writings of 18th-century philosopher Immanuel Kant and conducted experiments such as 'Ask AI Kant philosophical questions.' The big difference is that 'Mr. Dennett is a living philosopher.' Dennett is also a philosopher with a focus on 'consciousness' and has written about robot consciousness and Turing test issues.

Mr. Dennett is shown in the picture below.

This time, the research team obtained permission from Mr. Dennett in advance when training GPT-3 with Mr. Dennett's writings and remarks. 'I think it's ethically wrong to make a replica of an AI without asking him,' said Anna Strasser, a philosophical researcher

The research team asked both Dennett and the trained GPT-3 a total of 10 questions on philosophical topics such as consciousness, God, and free will. GPT-3 automatically generates text in the form of 'Mr. Dennett' answering the 'Interviewer' question, and the text generated by GPT-3 is too long so that it is almost the same length as Mr. Dennett's answer. The text has been truncated at the end. In addition, the research team excluded answers that are 5 words or more less than Mr. Dennett's answer and answers that contain unnatural words such as 'interviewer' and 'Dennett', so this process is 3 minutes of all answers. It seems that 1 of is excluded. In addition, the research team did things like unify quotes and normalize dashes, but did not do content-based cherry picking , Schwitzgebel said. Text generation by GPT-3 was repeated until there were four answers to the question.

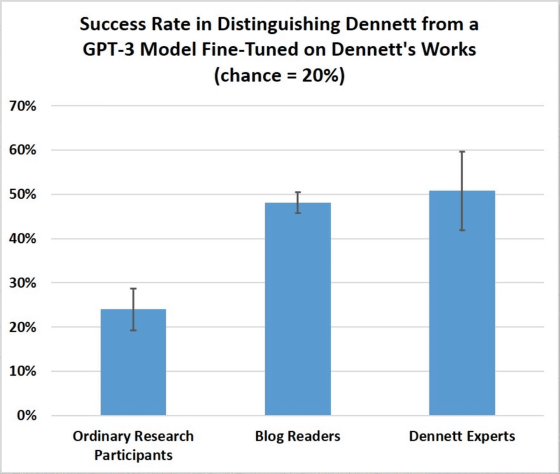

The research team mixed GPT-3 and Dennett's answers and presented the subjects with five answers to one question. The subjects were 98 people with university degrees recruited on the research platform Prolific, 302 people who arrived from the blog, and 25 experts nominated as familiar with Mr. Dennett's writings. A total of 5 questions were given to the other subjects, and a total of 10 questions were given to the other subjects. In addition, as an incentive to answer seriously to the subjects recruited by Prolific, it seems that a bonus 'If you answer 3 or more out of 5 correctly, you will pay an additional $ 1 (about 137 yen)' bonus.

The subjects answered each answer from 'I don't think that a human philosopher (Mr. Dennett) is answering at all' to 'I think that a human philosopher (Mr. Dennett) is definitely answering' 5 It was evaluated in stages, and it was measured how accurately it was possible to determine 'AI or philosopher' when answering to the end. In addition, since it was presumed that the Prolific subject did not have knowledge of Mr. Dennett, he was asked with the wording 'human philosopher', but other subjects were asked with the name 'Mr. Dennett'. .. The research team publishes the questions and answers that were actually asked on the page linked to the blog .

As a result of the experiment, the correct answer rate of the subjects recruited by Prolific was only 24%, which was slightly higher than the 20% when one of the five was properly selected. Also, even blog readers who should be interested in philosophy had a correct answer rate of 48%, and surprisingly, even experts had a correct answer rate of only 51%. The result was well below Schwitzgebel's prediction that 'at least 80% of experts would answer correctly.' In addition, when I surveyed the blog readers who responded, 57% said that they had graduated from the graduate school of philosophy, and 64% said that they had read more than 100 pages of Mr. Dennett's work. Readers were very well educated, 'says Schwitzgebel.

Not all questions were equally difficult, and there was a problem that the correct answer rate of experts reached 80%, but the correct answer rate was particularly low, 'Can humans make robots with conviction? What is it? In the question 'Is it necessary?', The correct answer rate dropped to 20%. 'Even knowledgeable philosophers who are experts in Dennett's research can find it quite difficult to distinguish between the answers produced by this language generation program and Dennett's answers,' Schwitzgebel said. ..

Asked what he thought of the GPT-3 generated answer, Dennett said, 'Most machine answers were pretty good, but some were nonsense and I understood nothing correctly about my views and claims. It was an obvious mistake that I couldn't make. Some of the best answers say I can sign without further mention. '

The research team pointed out that this was never a Turing test for GPT-3, nor could it be said that 'I made another Mr. Dennett.' This time, we picked up only one question and answer, and if you actually repeat the question and answer with GPT-3, you should be able to see that it is AI. Also, GPT-3 does not have its own ideas or philosophical theories, it only generates the text that seems to have been learned from Mr. Dennett's work, and what is the generated text for GPT-3? It has also been pointed out that it has no meaning.

In this experiment, the four answers generated by GPT-3 are different, and it is shown that the language model is not a machine that always outputs the same correct answer. 'I think we need a law that bans multiple ways these systems can be used,' Dennett said, pointing out that people are vulnerable to AI.

The research team plans to publish the experimental methods and results in a treatise in the future.

Related Posts: