A startup that was conducting an experiment to guide young people who are mentally disturbed to chatbots is strongly criticized

Chatbots, in which AI responds to questions and requests written in, are able to respond very naturally to questions from humans, so they are also used in the field of mental care

'Horribly Unethical': Startup Experimented on Suicidal Teens on Social Media With Chatbot

https://www.vice.com/en/article/5d9m3a/horribly-unethical-startup-experimented-on-suicidal-teens-on-facebook-tumblr-with-chatbot

Improving uptake of mental health crisis resources: Randomized test of a single-session intervention embedded in social media

https://doi.org/10.31234/osf.io/afvws

Startup Uses AI Chatbot to Provide Mental Health Counseling and Then Realizes It 'Feels Weird'

https://www.vice.com/en/article/4ax9yw/startup-uses-ai-chatbot-to-provide-mental-health-counseling-and-then-realizes-it-feels-weird

Rob Morris, founder of the mental health non-profit organization `` Koko '', said on January 7, 2023, ``We used GPT-3 to provide mental health support to about 4,000 people.'' clarified the details. According to Morris, the message created by AI and reworked by humans received a higher rating than the message thought by humans, and was able to reduce the response time by 50%. But when people who received mental health care learned that the message was machine-generated, the message almost didn't work, and Morris said, ``The empathy simulated by the chatbot feels strange and empty.'' I'm here.

We provided mental health support to about 4,000 people — using GPT-3.

— Rob Morris (@RobertRMorris) January 6, 2023

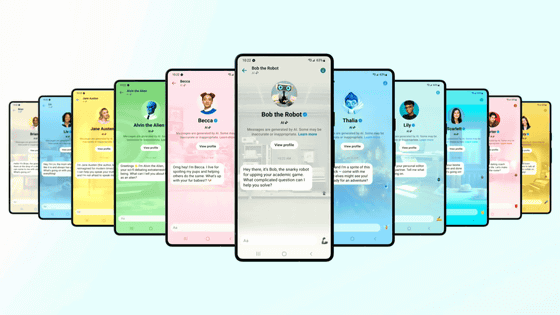

When Koko's algorithm detects ``people who may be suffering from mental health problems'' on platforms such as Facebook, Discord, and Tumblr, it directs those users to Koko's platform. By agreeing to Koko's Privacy Policy and Terms of Service, you agree to participate in the experiment.

Birth of AI to detect mental illness from posts on internet bulletin boards - GIGAZINE

When users start using Koko, the chatbot asks, 'What are you worried about?' ” randomly. 'Tomorrow I will ask how you are doing. It is very important to get this feedback so that we can learn how to improve things. Can you promise to receive that notification?' If the user agrees to the message, a soothing animation of the cat will be displayed saying 'Thank you! There is a cat here!' Even if you do not agree, the same cat animation will be displayed as 'It's okay, we still love you. Here's a cat. Let's continue to create your safety plan.' I'm here.

Next, the user is asked a series of questions about ``why are you worried?'', ``what can you do to deal with it,'' and ``is there someone you can talk to about your worries?'' Finally, as a “safety plan” for mental health, record who you can call in an emergency when your mental health is severely compromised, record a dedicated hotline if you don’t have a specific person, and set a “number to contact in case of emergency”. You will be prompted to save and take a screenshot. It is important to secure this 'reliable hotline', but based on the idea that 'a hotline that can be connected from anywhere in the world 24 hours a day, 365 days a year' is impossible, Mr. Morris believes that platforms such as SNS are Koko's He hopes to guide users seeking mental health care to chatbots.

According to the pre-peer-reviewed paper, 374 participants were randomly assigned to ``a group that was guided to a hotline number that can be consulted by phone'' and ``a group that performed a one-minute session guided by a chatbot''. “People who were directed to Koko chatbot sessions were more likely to report a significant decrease in feelings of hopelessness after 10 minutes than those who were directed to the hotline.” The authors also concluded that Koko's recommended sessions of hugging an animal, watching something interesting, playing a video game, and drawing yourself reduced feelings of helplessness. increase.

Koko's experiment announced by Morris has drawn the attention of AI ethicists, experts, and some users. Elizabeth Marquis of the University of Michigan said, 'It was new and surprising that the benefits of the experiment implied that people disliked chatbots in mental health care. However, the experiment did not address the consent process or ethical review. It's not mentioned and the experiment is being conducted via the app, which is a distinct red flag.'

Also, Emily M. Bender, a professor of linguistics at the University of Washington, told Motherboard, ``Trusting AI to treat mental health patients can do a lot of harm. A language model is a program designed to generate plausible-sounding text given training data and input prompts.Chatbots have no empathy, they don't understand what you say, they don't know what you say. You don't even understand the situation you're in. Using something like that in the delicate field of mental health is taking unknown risks,' he said, pointing out the direct problems of using chatbots for mental health. He pointed out, 'An even more important question is who is responsible if a chatbot makes a harmful suggestion. It looks like they are developing an experiment to impose accountability on people.'

Arthur Kaplan, a professor of bioethics at New York University, said, 'It's completely, horribly unethical, an unproven method of experimentally tinkering with someone who might be having suicidal thoughts.' is just terrible,' he criticized. In the pre-peer-reviewed paper, ``In consultation with the Institutional Review Board (IRB) of Stony Brook University, the request for informed consent from the subject has been waived,'' but the subject of the experiment is mentally ill to some extent. Koko's experiment was criticized by many researchers for ``lacking protection for participants'' because it is hard to imagine that such a person would be able to read the terms and conditions carefully. condemned. Motherboard sent a confirmation notice to the department in charge at Stony Brook University, but said that there was no response.

In response to the criticism, Morris said, ``People who participated in the experiment always received a message stating that it was written in collaboration with Koko Bot, and asked whether they read the text for their own mental care. I was able to make a choice.' Koko also claimed that she did not use any personal information and did not plan to publicly release detailed data from this study.

Morris told Motherboard, ``The purpose of this study is to highlight the need that the resources giant social media platforms provide to users at risk should be improved. The platform has closed its mouth to the pursuit of whether it can support users in crisis, ”he says about the significance of the research. Koko will provide social media companies with a program to direct users to Koko as a 'suicide prevention kit'.

Related Posts:

in Web Service, Posted by log1e_dh