Research reveals that 'answers to patients' generated by interactive AI 'ChatGPT' are preferred to answers by human doctors

ChatGPT, an interactive AI developed by OpenAI, is capable of generating sentences with such high accuracy that research results have reported that

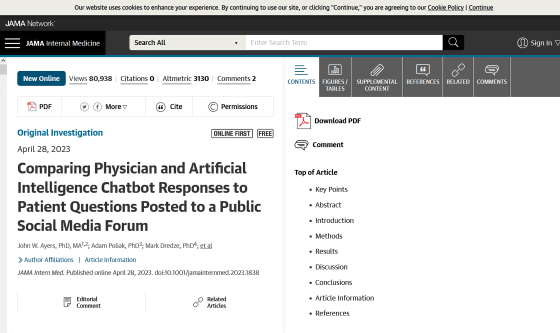

Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum | Health Informatics | JAMA Internal Medicine | JAMA Network

https://dx.doi.org/10.1001/jamainternmed.2023.1838

Study Finds ChatGPT Outperforms Physicians in High-Quality, Empathetic Answers to Patient Questions

https://today.ucsd.edu/story/study-finds-chatgpt-outperforms-physicians-in-high-quality-empathetic-answers-to-patient-questions

AI has better 'bedside manner' than some doctors, study finds | Artificial intelligence (AI) | The Guardian

https://www.theguardian.com/technology/2023/apr/28/ai-has-better-bedside-manner-than-some-doctors-study-finds

The research team conducted an experiment to compare ChatGPT's answers to the questions asked by the patients and the doctor's answers in order to ascertain the question, ``Is ChatGPT able to respond appropriately to the questions from the patients?'' 'ChatGPT may be able to pass the licensure exam, but giving direct, accurate, and empathetic responses to patient questions is a challenge,' said Davey Smith, professor of medicine at the University of California, San Diego and co-author of the paper. It's a different story,' he said.

In order to collect patient questions and doctor's answers to be used in the experiment, the research team turned to a forum called ' AskDocs ' on Reddit, an overseas bulletin board. AskDocs is a forum where doctors whose identities have been verified by moderators respond to questions from patients, and a huge number of questions and answers are done.

The research team randomly collected 195 questions and answers from AskDocs and gave the original question to ChatGPT to generate an answer. We then asked three medical professionals to rate the questions, the ChatGPT responses, and the doctor's responses. The medical professionals who evaluated the responses were not told whether each response was from ChatGPT or a human doctor.

As a result of the experiment, 78.6% of medical professionals preferred ChatGPT answers to answers by human doctors, and the proportion of answers rated as 'high quality' was about 3.6 times higher for ChatGPT. I understand. Furthermore, the percentage of responses rated as “empathetic” or “very empathetic” to patient questions reached 45.1% for ChatGPT, compared to 4.6% for human doctors. bottom.

According to the research team, ChatGPT's answer has more text than the answer by a human doctor, and this point may have led to a high evaluation of the answer. 'ChatGPT messages respond with nuanced and precise information and can address more aspects of a patient's question than a doctor's answer,' said study co

'I never thought I'd say this, but ChatGPT is the prescription I want in my email inbox,' said Aaron Goodman, co-author of the paper and associate professor of medicine at the University of California, San Diego. 'This tool will change the way I support my patients.' Co-author Adam Poliak, Ph.D., assistant professor of computer science at Bryn Mawr University in the United States, said, ``Our study compared ChatGPT to doctors, but the final solution is not to kick doctors out. , Doctors can use ChatGPT to provide better empathic care.'

In California and Wisconsin, attempts are already underway to use GPT-4, OpenAI's large-scale language model, in medical settings .

ChatGPT Will See You Now: Doctors Using AI to Answer Patient Questions - WSJ

https://www.wsj.com/articles/dr-chatgpt-physicians-are-sending-patients-advice-using-ai-945cf60b

A Wisconsin-based medical technology company called Epic has developed a tool called MyChart that allows patients to send messages to healthcare professionals. MyChart saw a significant increase in users during the COVID-19 pandemic, from 106 million logins in Q1 2020 to 2 in Q1 2023. It is said that it has increased to 60 million cases.

In April 2023, Epic began testing to generate replies to patient questions using OpenAI's GPT-4 through Azure, a cloud service provided by Microsoft. The University of California, San Diego School of Medicine is also participating in the test, and primary care director Marlene Millen said she has seen a surge in messages from patients since the COVID-19 pandemic, and an AI-powered response support tool. says it has become a hope for some medical staff.

When a healthcare worker clicks on a message from a patient, Epic's AI tool looks at the information in the message and a shortened version of the electronic medical record stored at the hospital, and instantly generates a draft reply. . If the content of the message is correct, the medical worker can edit the details or reply as it is, and if it is incorrect, it is possible to rewrite it yourself. According to Millen, if a patient's message included something like 'I just got back from a trip,' the AI could reply to ask if the trip was good, 'what a healthcare professional would do.' It seems that you can also reproduce 'touch like'.

On the other hand, interactive AI such as ChatGPT is also known to sometimes make up things that do not exist, and the content cannot be trusted as it is. As such, the team at the University of California, San Diego School of Medicine does not allow AI to answer questions seeking medical advice, and is limited to responding to limited questions such as requesting a prescription or requesting a document. It is said that AI is used for

Related Posts: