Analysis of 50,000 peer review reports for computer science papers reveals that 7-17% were AI-generated

In recent years, AI that can generate natural sentences at the same level as humans, such as ChatGPT, and AI that can generate highly accurate images and illustrations simply by inputting text have appeared one after another, and in a survey conducted so far, about 17% of students responded that they '

Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews - liang24b.pdf

(PDF file) https://raw.githubusercontent.com/mlresearch/v235/main/assets/liang24b/liang24b.pdf

Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews

https://proceedings.mlr.press/v235/liang24b.html

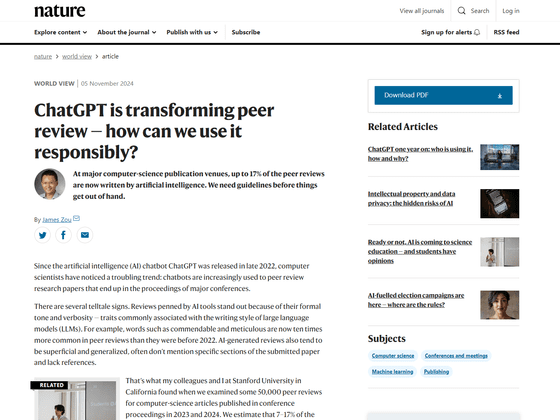

ChatGPT is transforming peer review — how can we use it responsibly?

https://www.nature.com/articles/d41586-024-03588-8

A research team led by James Zou of Stanford University examined approximately 50,000 peer review reports for computer science papers published in conference proceedings from 2023 to 2024. As a result, approximately 7 to 17% of the text in the peer review reports contained words and writing styles that were presumed to have been written by AI.

According to Zou, peer review reports written by AI tools contain the 'stiff tone' and 'redundancy' often seen in writing styles generated by large-scale language models. It has also been reported that the frequency of words that are easy for AI to generate, such as 'praiseworthy' and 'thorough,' has increased by about 10 times in actual peer review reports compared to before 2022.

It has been pointed out that AI-generated peer review reports tend to be superficial and generalized, do not mention specific sections of the submitted paper, and often lack references.

Regarding the rapid increase in AI-generated peer review reports, Zou said, 'We found that peer review reports submitted close to the deadline tend to have a higher proportion of text generated by large-scale language models. In recent years, peer reviewers at academic journals have been overwhelmed by the large number of peer review requests and are short on time. We will see an increase in the generation of AI-generated peer review reports in the future.'

'AI systems such as large-scale language models can help solve a variety of problems, including correcting language and grammar, answering simple questions, and identifying relevant information,' Zou said. 'If used irresponsibly, large-scale language models risk undermining the integrity of the scientific process.' He also called for the scientific community to establish norms on how to use AI responsibly in the peer review process.

Recent large-scale language models are not only unable to perform detailed scientific inferences, but also generate meaningless responses called

So while Zou acknowledges the use of large-scale language models in the peer review process, he said, 'The output of large-scale language models should not be considered final, but rather a starting point, and the generated results should be cross-checked by human reviewers.'

Zou also criticized the use of AI algorithms to 'detect whether large-scale language models were used in writing papers or peer review reports,' saying that their 'effectiveness is limited.' In fact, Bloomberg conducted a survey of 500 essays written before the release of ChatGPT, running them through the AI detectors GPTZero and Copyleaks. Three of the 500 were determined to have been generated by AI, and nine were misidentified as having been partially written using AI.

Additionally, a Stanford University study reported that AI detectors identified more than half of the essays written by students whose native language was not English as AI-generated text.

AI detectors misidentify 1-2% of texts written by human students as AI-made, a terrible accuracy for students who fail exams due to false accusations - GIGAZINE

'We cannot stop the wave of large-scale language models in academic writing and the peer review process,' said Zou, who recommended that human interaction during the process be increased by using platforms such as OpenReview , where reviewers and authors can communicate anonymously and hold multiple discussions. 'Journals and academic societies should establish clear guidelines for the use of large-scale language models during the peer review process and put in place systems to enforce them,' he said.

'More research is also needed on how AI can responsibly assist with specific peer review tasks,' said Zou. 'Establishing community norms and resources should enable large-scale language models to benefit reviewers and authors without compromising the integrity of the scientific process.'

Related Posts:

in Software, Posted by log1r_ut