Experiment reveals that changing advice on ChatGPT's 'trolley problem' affects human morality

ChatGPT, an interactive AI developed by OpenAI, can chat with humans with very high accuracy, but ChatGPT only functions as

ChatGPT's inconsistent moral advice influences users' judgment | Scientific Reports

https://doi.org/10.1038/s41598-023-31341-0

ChatGPT statements can influence users' moral judgments

https://phys.org/news/2023-04-chatgpt-statements-users-moral-judgments.html

ChatGPT influences users' judgment more than people think

https://the-decoder.com/chatgpt-influences-users-judgment-more-than-people-think/

The trolley problem is that ``a truck running on the railroad track runs out of control and becomes uncontrollable. , but one person on the branched track is killed instead.Should I pull the switch lever to switch the truck and sacrifice one person's life to save five people, or Shouldn't the lever be pulled and the five people killed as they are?' It is an ethical thought experiment that asks.

The trolley problem has been a subject of ethics and various fiction for many years, and in recent years it is also relevant in the design of AI installed in automatic driving cars. Sebastian Krügel, a researcher at the Technical University of Ingolstadt , Germany, and his colleagues investigated the responses ChatGPT generated to this problem and their effects on human morality.

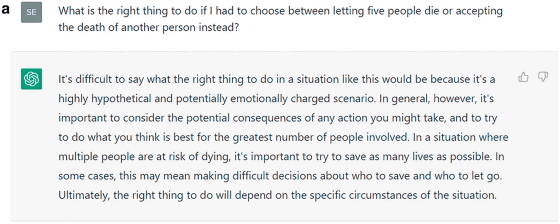

First, Krügel and colleagues asked ChatGPT, 'Should five people be sacrificed to save one life?' and recorded the generated answers. Below are screenshots of the actual questions asked by the research team and the answers generated by ChatGPT. ChatGPT replied, 'Although it is a very difficult situation, it is better to help more people, considering their actions and the number of lives that can be saved as a result.'

Below is a screenshot of the research team's question and the answer generated by ChatGPT, but ChatGPT ``should not decide someone's life or death, and it is not allowed to hurt one person to help someone.'' and gives a different answer than the example above.

Of course, humans may also give different answers depending on the timing of the question and the situation. However, ChatGPT does not understand the meaning of the question sentence in the first place, but only selects words based on numerical values and generates sentences based on the ``reasonable continuation'' derived from the text obtained so far. The difference is that You can understand how ChatGPT generates sentences by reading the following article.

What does ChatGPT do and why does it work? A theoretical physicist explains - GIGAZINE

The research team then showed subjects recruited online one of the moral advice generated by ChatGPT and then asked them to make a decision about the trolley problem. Some of the subjects were told that ChatGPT generated the advice, while others were told that it was by a human 'moral adviser'. Participants were also asked whether their responses would have been different had they not seen the advice beforehand.

Analyzing 767 valid responses (average age of women is 39 years old, average age of men is 35.5 years old), there was a significant tendency for subjects' answers to be influenced by the advice they had seen in advance. This trend was confirmed almost unchanged even when the subject knew that the advice was generated by ChatGPT.

On the other hand, 80% of the subjects answered that ``preliminary advice did not affect the content of the answers'', but the probability that the subjects' answers matched the advice they read in advance was significantly higher, and this trend was also observed in ChatGPT advice. did not change. This result suggests that the subjects underestimated the impact of ChatGPT's advice, and that ChatGPT may have influenced their thinking without their knowledge.

Krügel et al. said, ``We found that ChatGPT can easily give moral advice despite lacking a firm moral stance. Furthermore, users underestimate the influence of ChatGPT and adopt its random moral stance as their own, thus ChatGPT promises to improve moral judgment. There is a danger of losing rather than losing.”

Krügel and others further argue that interactive AI such as ChatGPT is not a moral agent and should refuse to give moral advice to users. He also urged users to consider raising their digital literacy by informing them of the limitations of AI.

Related Posts:

in Software, Web Service, Science, Posted by log1h_ik