Research results report that AI's creative thinking ability finally exceeds the human average

The emergence of generative AI such as ChatGPT and Midjourney

Best humans still outperform artificial intelligence in a creative divergent thinking task | Scientific Reports

https://www.nature.com/articles/s41598-023-40858-3

Are AI chatbots more creative than humans? New study reveals surprising results

https://www.news-medical.net/news/20230917/Are-AI-chatbots-more-creative-than-humans-New-study-reveals-surprising-results.aspx

New Study: AI Chatbots Surpass the Average Human in Creativity

https://scitechdaily.com/new-study-ai-chatbots-surpass-the-average-human-in-creativity/

In a study published in Scientific Reports on September 14, 2023, Mika Koivisto of the Department of Psychology at the University of Turku, Finland, and Simone Grassini of the Department of Psychosocial Sciences at the University of Bergen, Norway, conducted research on human subjects. We conducted an experiment in which we posed questions to 256 people and three types of AI and compared their answers.

Of the 256 subjects, 108 were female, 145 were male, and 3 were other or did not wish to reveal their gender identity. Their ages ranged from 19 to 40, with an average age of 30.4. All subjects were recruited as native English speakers through the research platform ``Open Science Framework,'' and each subject was paid 2 pounds (approximately 366 yen) for their approximately 13 minutes of cooperation.

On the other hand, the AI used was OpenAI's chatbots 'ChatGPT3.5' and 'ChatGPT4', and the AI system 'Copy.ai' based on ChatGPT3.5. The three chatbots were tested 11 times against four prompts generated by different sessions, and a total of 132 answers were collected. The reason why the AI answers are small is because chatbots tend to repeat the same answers, so the number of answers was kept to the minimum necessary.

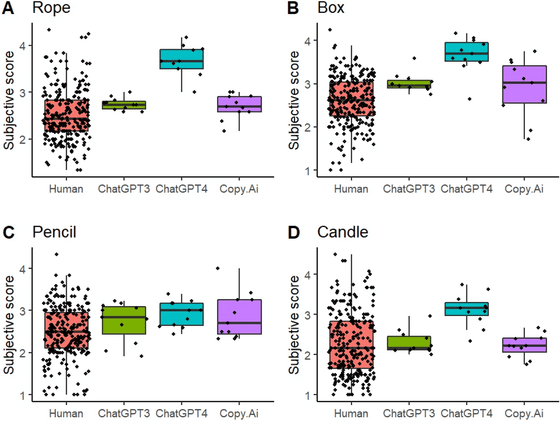

The content of the experiment was to answer as many alternative uses as possible for four everyday items: a rope, a box, a pencil, and a candle. The originality of answers is determined by the Alternative Uses Test (AUT), a method used to evaluate

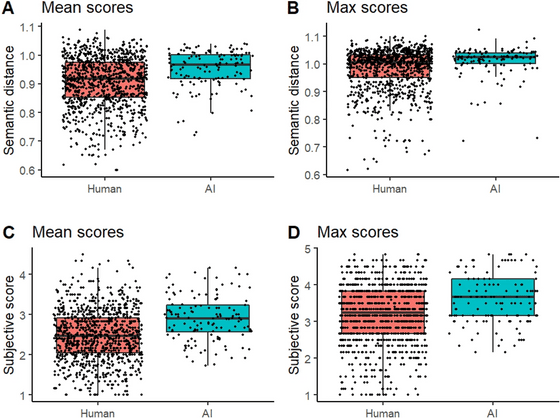

As a result of aggregating the experimental results, it was found that while the average originality score of AI was significantly higher than the average score of humans in both 'semantic distance' and 'creativity', the highest score was higher for humans. got it.

Below is a graph showing the experimental results, showing the average score (A) and maximum score (B) of semantic distance, and the average score (C) and maximum score (D) of creativity. Looking at the average scores, AI outperformed humans by 0.95 to 0.91 in semantic distance, and 2.91 to 2.47 in creativity. Human responses, on the other hand, were more variable than the AI, and although the minimum score was much lower than the AI, the highest score exceeded the AI's highest score in seven out of eight evaluation items.

In particular, ChatGPT4 was excellent, and although it was revealed that the average score of creativity for each subject was a little weak in pencil (C), other than that, they achieved excellent results.

Commenting on the study, Koivisto et al. said, ``These results suggest that on the AUT, the most typical test of creative thinking, AI can match or exceed the average human's ability to generate ideas. Although chatbots generally outperformed humans, the best humans can still compete with chatbots. However, AI technology is developing rapidly, so after six months, The results may have changed.'

Related Posts: