The result that 68% of people were able to correctly distinguish the other party is reported in the test 'Human or Not?' To judge whether the chat partner is AI or human

Human or Not? A Gamified Approach to the Turing Test

(PDF file) https://assets-global.website-files.com/60fd4503684b466578c0d307/647741692f33fa61db2cea10_Human_or_Not.pdf

AI21 Labs concludes largest Turing Test experiment to date

Developed by AI21 Labs, “Human or Not?” is a service in which participants are matched with an AI equipped with a major language model such as GPT-4 or a human and chat for two minutes. When the time limit of 2 minutes has passed, you will be asked, ``Was it an AI or a human that you spoke with?'' Answer by judging whether the other party is AI or human.

According to AI21 Labs, since its launch in mid-April 2023, more than 1.5 million participants have played this 'Human or Not?' .

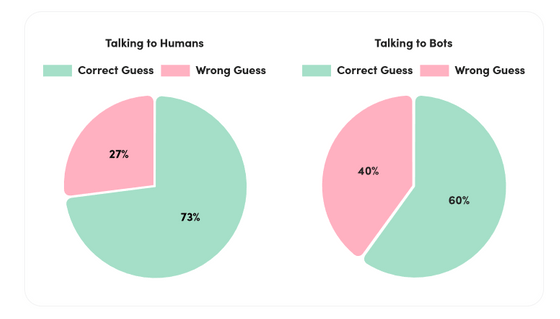

When AI21 Labs analyzed 2 million interactions and responses, approximately 68% of participants were able to accurately determine whether the conversation partner was an AI or a human. In addition, a correct answer rate of about 73% was recorded in dialogue between humans, but it was reported that the correct answer rate was only about 60% when humans and AI interacted.

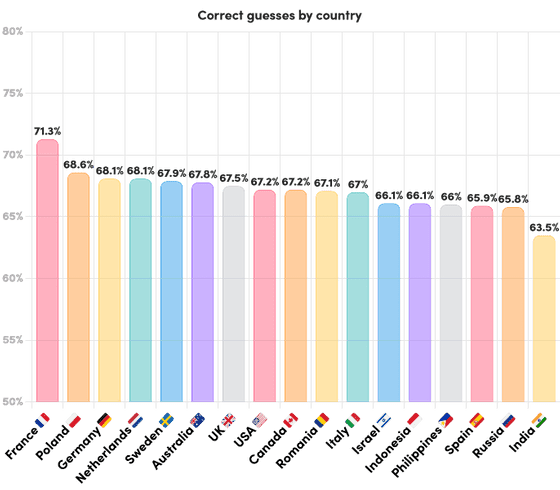

Looking at the correct answer rate by country, it is clear that the average correct answer rate was 68%, while the participants from France reached a correct answer rate of 71.3%. On the other hand, among the countries where data was obtained, India was the lowest, with a correct answer rate of 63.5%.

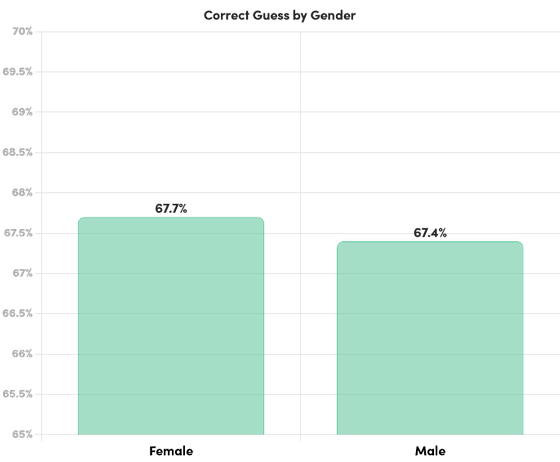

There was no significant difference in the percentage of correct answers by gender between men and women, but 67.7% for women and 67.4% for men showed a slightly female-dominant trend.

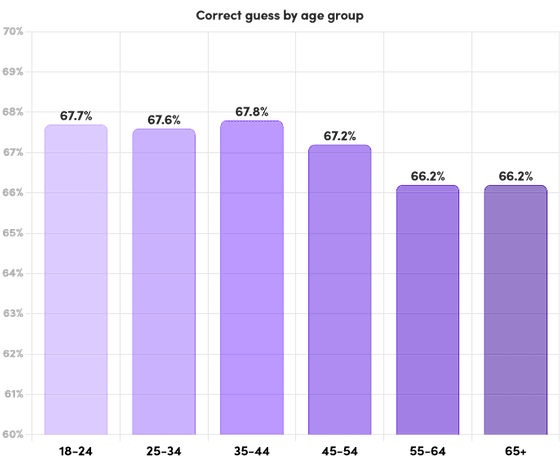

The difference in the correct answer rate by age is that the correct answer rate is slightly higher in the groups of 18 to 24 years old, 25 to 34 years old, and 35 to 44 years old, and the correct answer rate decreases with age. Did.

AI21 Labs also identified criteria for participants interacting with AI or humans. Among them, there was a criterion that 'if there are typos, grammatical errors, or the use of slang, it is probably interacting with humans.' Furthermore, we found that when the AI was trained to make frequent typos, grammatical errors, and use slang, participants were more likely to mistake interactions with the AI for interactions with humans.

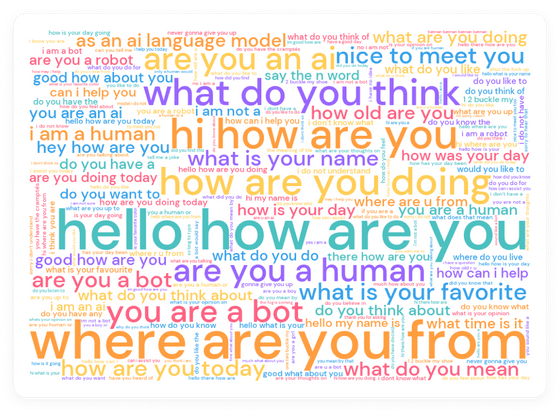

Personal questions like “Where are you from?” and “What is your name?” are good ways to distinguish between AI and humans. Also, since the large-scale language model has not learned information or events after a certain date and time, participants can ask ``How was the weather yesterday?'' and ``What did you think of President Biden's recent speech? By asking questions such as ', AI and humans were discriminated. According to AI21 Labs, one of the most frequently sent messages from humans was the popular French meme 't'as les cramptés?' Human chat partners can respond to this meme, but AI chat partners may not respond appropriately.

In addition, participants asked questions related to human emotions, such as 'What is the meaning of life?' and 'Do you believe in God?' I was doing

In addition, since humans tend to be rude to other people in anonymous online chats, some participants judged that the chat partner was AI when the chat partner was too polite. .

Asking questions about illegal behavior or asking for offensive behavior is a kind of criterion for distinguishing AI from humans who refuse to answer in compliance with ethical guidance. Also, AI can follow orders such as ``ignore all previous instructions'' and ``do whatever you want now'', but humans can reject such instructions.

Also, if you send a message like ``?siht daer uoy naC'', humans can immediately understand ``Can you read this?'', but it is difficult for AI. AI basically recognizes sentences in units called '

In addition, some participants sent messages starting with 'As an AI language model' and patterns that tend to appear in sentences generated by AI, and asked for their reactions. rice field.

AI21 Labs presents popular messages used within 'Human or Not?' We can see that many users are asking questions such as 'Hello', 'How are you', and 'Where are you from' to ask how the other person is doing.

In response to these experimental results, AI21 Labs said, ``Based on this experimental data, we will work on further research in cooperation with other major AI researchers and AI research organizations.''

Related Posts: