It is clear that the coding ability of GPT-3.5-based ChatGPT is 'effective for old problems but faces difficulties with new problems'

AI-based programming has been gaining attention, with AI tools specialized for programming appearing from

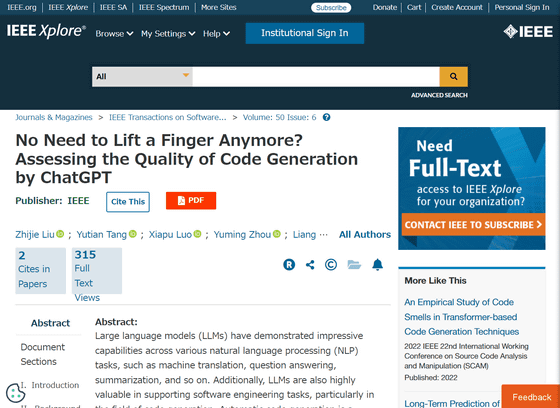

No Need to Lift a Finger Anymore? Assessing the Quality of Code Generation by ChatGPT | IEEE Journals & Magazine | IEEE Xplore

https://ieeexplore.ieee.org/document/10507163

ChatGPT Code: Is the AI Actually Good at Writing Code? - IEEE Spectrum

https://spectrum.ieee.org/chatgpt-for-coding

A recent research paper published in the June 2024 issue of IEEE Transactions on Software Engineering , a peer-reviewed scientific journal related to software engineering, evaluates code generated by OpenAI's chatbot ChatGPT on three fronts: functionality, complexity, and security. The results show that ChatGPT has a very wide range of success rates in generating functional code, ranging from 0.66% to 89%, depending on the difficulty of the task, the programming language, and a variety of other factors. AI can also generate code better than humans, but the analysis also points out security concerns about AI-generated code.

One of the authors of the paper , Yutian Tan , a lecturer in software engineering at the University of Glasgow, points out that AI code generation 'can bring benefits in terms of improving productivity and automating tasks in software development,' but he emphasizes that it is also important to understand the strengths and limitations of the model. 'A comprehensive analysis can also reveal potential issues and limitations that arise in ChatGPT-based code generation and improve the generation technique,' Tan said.

To investigate the limitations of AI code generation in more detail, the study tested ChatGPT, which is based on GPT-3.5, on 728 coding problems collected from the testing platform

ChatGPT's coding ability is excellent overall, but it seems to have performed particularly well when trying to solve coding problems that existed in LeetCode before 2021. For example, ChatGPT succeeded in generating functional code 89%, 71%, and 40% of the time for three problems of low, medium, and high difficulty, respectively.

However, when it comes to coding problems posted on LeetCode after 2021, ChatGPT's chances of generating functional code drop significantly. This is true even for problems with low difficulty, and 'in some cases, ChatGPT cannot understand the meaning of the problem in the first place,' Tan pointed out. Regarding the reason for this, Tan said, 'ChatGPT lacks the critical thinking ability that humans have, so it can only deal with problems it has encountered in the past. This is why ChatGPT cannot deal well with relatively new coding problems.'

In addition, ChatGPT can generate code that has 50% less execution time and memory overhead than human code solving the same coding problem, making it clear that AI code generation is superior.

The research team also received feedback from LeetCode to investigate ChatGPT's ability to correct its own coding errors. The study found that ChatGPT is good at correcting compilation errors, but not so good at correcting its own mistakes. 'ChatGPT may generate incorrect code because it does not understand the meaning of the algorithmic problems. In other words, simple error feedback information is not enough,' Tan wrote.

The research team also pointed out that the code generated by ChatGPT has vulnerabilities, such as missing null tests, but that many of the vulnerabilities are easily fixable.

Additionally, the generated code in C was found to be the most complex, followed by C++ and Python, which were as complex as human-written code.

Regarding the research results, Mr. Tan said, 'Based on these results, we can see that it is important for developers using ChatGPT to provide additional information so that ChatGPT can better understand the problem or avoid the vulnerability.' 'For example, when encountering a more complex programming problem, developers should provide as much relevant knowledge as possible and prompt ChatGPT to be aware of potential vulnerabilities,' he said, providing some tips on coding with ChatGPT.

Related Posts: