The key to distinguishing faces synthesized by deepfake is 'shining eyes'

To synthesize the fictional image or video using AI technology

EXPOSING GAN-GENERATED FACES USING INCONSISTENT CORNEAL SPECULAR HIGHLIGHTS

(PDF file) https://arxiv.org/pdf/2009.11924.pdf

New AI tool detects Deepfakes by analyzing light reflections in the eyes

https://thenextweb.com/neural/2021/03/11/ai-detects-deepfakes-analyzing-light-reflections-in-the-cornea-eyes-gans-thispersondoesnotexist/

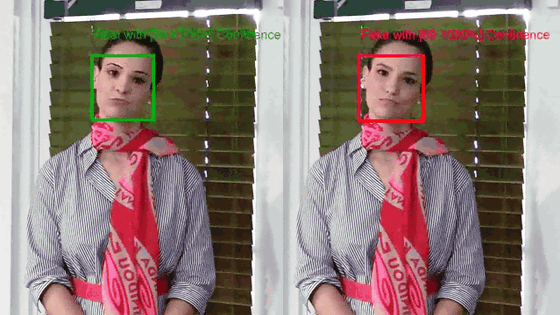

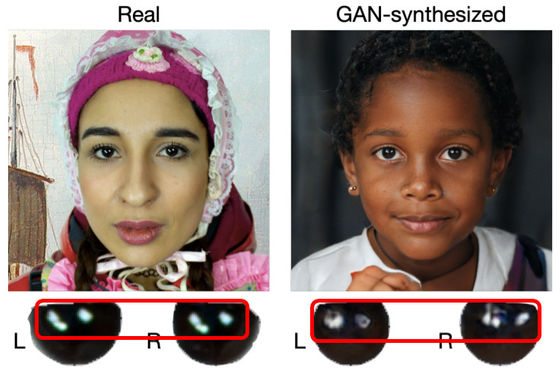

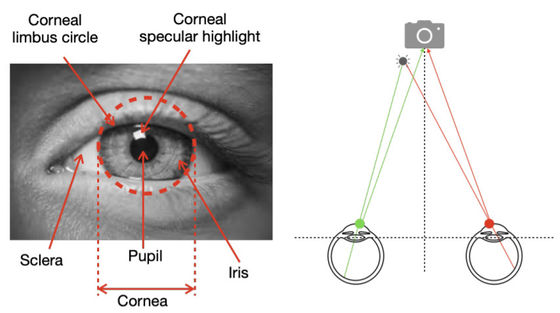

Below is a side-by-side view of a real human face (left) and a fictitious human face generated by a Generative Adversarial Network (GAN) (right). At first glance, both look like real facial photographs, but if you look closely at the figure below with the eyes enlarged, the shape of the light reflected on the cornea is almost the same on the left and right in the real thing, whereas in deepfake The left and right are separated.

Since both eyes are looking at the same thing, the reflection of the left and right eyes is usually the same for a real face. However, in many cases GAN cannot accurately reproduce this similarity. Therefore, researchers explain that by mapping the position of the eyes and analyzing the light reflected in both eyes, it is possible to distinguish whether the face photo is real or deepfake with high accuracy.

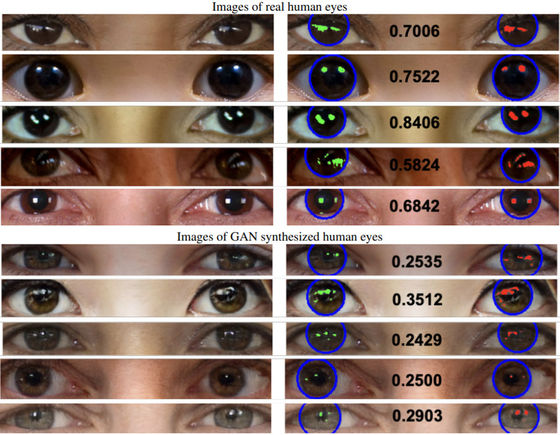

The following is the result of analyzing both real human eyes (upper half) and deepfake eyes (lower half) with a discrimination tool developed by the research team.

This discrimination method does not work when only one eye is shown in the photograph, and it also has the disadvantage that the accuracy drops significantly if the subject is not looking at the camera. In addition, it is possible to make it difficult to distinguish by manually correcting the light in the pupil.

'At this stage, we can't detect very sophisticated deepfake photos, but we can identify many crude deepfake photos,' the research team said. We decided to carry out further research.

Related Posts:

in Software, Posted by log1l_ks