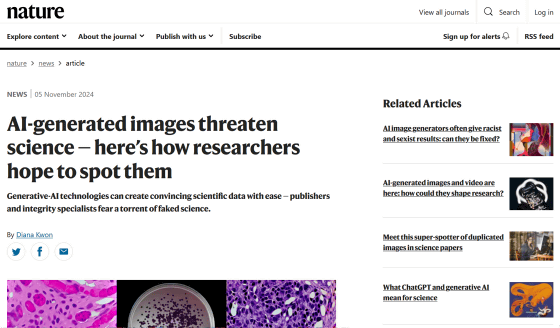

Generative AI tools make it easy to fabricate images and experimental data, threatening scientific research

Fraud in scientific papers, such as the manipulation of experimental results or image manipulation, has long plagued the scientific community, but the rise of generative AI tools in recent years has made it even harder to detect scientific misconduct by making it easier to fabricate convincing fake images.

AI-generated images threaten science — here's how researchers hope to spot them

“Generative AI is evolving rapidly,” said Jana Christopher, an integrity analyst who looks into the integrity of image data at a German academic publisher. “People in the fields where I work, such as image integrity and publishing ethics, are increasingly worried about the possibilities that generative AI brings.”

There are concerns that the increasing use of generative AI tools to create the text, images, and experimental data of papers could undermine trust in the scientific community as a whole. Integrity experts, academic publishers, and technology companies are already racing to develop tools to detect AI-generated elements in papers.

Researchers also benefit from having AI help them write the text of their papers, and many academic journals already allow the use of AI-generated text under certain circumstances. However, it is unlikely that AI will be allowed to generate images, experimental data, or other parts of scientific research that are fundamental to the research. Elizabeth Bik, a research integrity specialist, said, 'AI-generated text may be acceptable in the near future, but data generation is a different story.'

Christopher and Vik suspect that papers containing fabricated images and experimental data using generative AI are already circulating in the world. They also suspect that 'paper mills' that mass-produce and sell fake scientific papers are using generative AI tools to mass-produce papers. In fact, there have been reported cases where AI-generated papers that used researchers' names without permission were published in online journals.

Horror! A paper I don't remember writing is published in a magazine I've never heard of (predatory?) under my name. The contents are so obvious they could have been written by an AI. For some reason, I'm affiliated with the University of Tokyo, and within that department, the Forestry and Forest Products Research Institute. My email address is a little different lol https://t.co/StyIPnk2rK

— Kazushi Fujii (soil researcher) (@VirtualSoil) November 9, 2024

Identifying AI-generated images is a major challenge in detecting AI-based paper fraud. In scientific papers, it is very difficult to distinguish between 'real images' and 'AI-generated images,' at least with the naked eye, and it is also difficult to obtain evidence that an image was generated by AI. 'We feel like we encounter AI-generated images every day,' Christopher said. 'But unless we can prove it, there's not much we can do.'

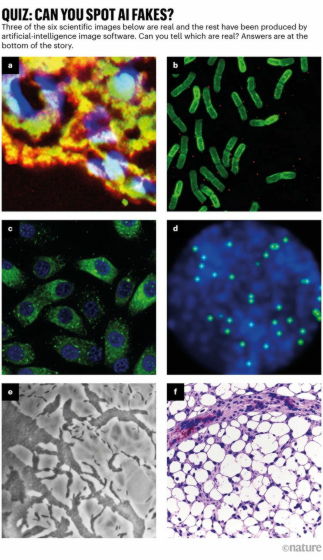

For example, the following six images are all of the type commonly seen in scientific papers, but three of them (b, c, and f) were generated by AI. At first glance, it is difficult to tell the difference between the two.

Before the rise of generative AI, the fake images in research papers were created with tools like Adobe Photoshop. These manually altered images contained many telltale signs that the human eye could easily spot, such as an identical background to other images or an unusual lack of dirt or blemishes.

However, even with his experience of seeing through many images that have been edited by hand, Vic says he has difficulty accurately identifying images generated by AI. 'I've seen many papers where I think, 'This

There is no clear evidence that the number of papers using generative AI tools to fabricate images is on the rise. However, given the increase in papers whose text appears to be written using ChatGPT and other tools, and the sharp decline in human-edited images over the past few years, it is reasonable to assume that fraudsters have begun to use generative AI tools to fabricate images.

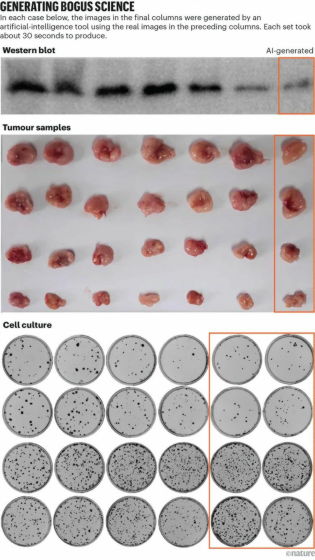

Kevin Patrick, a scientific image integrity researcher, reports that he was able to generate realistic images of tumors and cultured cells in less than a minute using Photoshop's generative AI tool, ' Generative Fill .' In the image below, the image in the orange box on the far right is an AI-generated image, and the rest of the images are real. You can see how difficult it is to distinguish between AI-generated and real images.

'If this (creating images using generative AI tools) were possible, then surely people who are being paid to generate fake data would do it,' Patrick said. 'I'm sure there's probably a lot more data that could be generated with these tools.'

Because it is difficult for the human eye to distinguish AI-generated images, efforts are underway to use AI to find them. Tools to distinguish AI-generated images, such as ' Imagetwin ' and ' Proofig ,' have already been developed, and several publishers and research institutions are using these tools to detect academic misconduct.

In recent years, there have been attempts to make AI-generated images and text identifiable by adding watermarks to them. However, in the field of scientific paper integrity, the opposite approach has been proposed: adding watermarks to raw data taken with a microscope to make it identifiable.

'I'm confident that technology will advance to the point where we can detect fraudulent practices today because at some point they will be considered 'relatively crude,'' Patrick said. 'This will keep fraudsters up at night. They may be able to fool the scientific research process today, but they can't keep fooling us forever.'

Related Posts:

in Science, Posted by log1h_ik