What was revealed by comparing DeepSeek's inference model 'DeepSeek-R1' with OpenAI's o1 & o3?

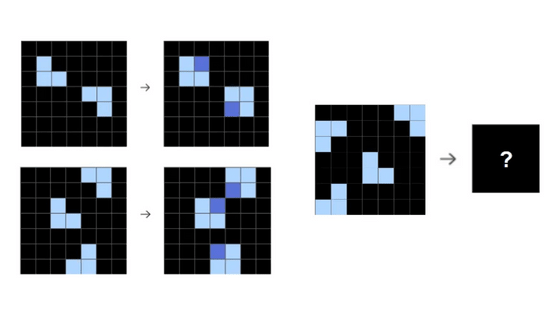

Most AI benchmarks measure the output accuracy (skill) of an AI, but skill does not represent the intelligence of the AI.

R1-Zero and R1 Results and Analysis

https://arcprize.org/blog/r1-zero-r1-results-analysis

The ARC Prize's goal is to define and evaluate new ideas for artificial general intelligence (AGI). To that end, the organization strives to build the strongest possible global innovation environment. According to the ARC Prize, 'At the time of writing, the prevailing view in the AI industry is that AGI does not exist and innovation remains constrained.'

Meanwhile, DeepSeek has announced the DeepSeek-R1 family, a proprietary inference model that achieves performance equivalent to OpenAI's o1 inference model. DeepSeek-R1, DeepSeek-R1-Zero, and o1 all achieve scores of approximately 15-20% on ARC-AGI, while DeepSeek-R1's operating costs are only 3.6% of o1's. Furthermore, since the scores of conventional large-scale language models (LLMs) on ARC-AGI were at most 5%, DeepSeek-R1 is said to be an extremely superior AI model even from the perspective of the ARC Prize.

How did DeepSeek surpass OpenAI's O1 at 3% the cost? - GIGAZINE

However, the inference model ' o3 ' announced by OpenAI in December 2024 achieved extremely high scores in ARC-AGI, with 76% in low computing mode and 88% in high computing mode. The ARC Prize commented that 'o3's extremely high score in ARC-AGI has received little attention or coverage in the mainstream media.'

The table below summarizes the ARC-AGI scores for R1, R1-Zero, o1 (low, medium, and high computing modes), and o3 (low and high computing modes), along with the average tokens and average operating costs for each model.

| Model name | ARC-AGI score | Average Tokens | Average cost |

|---|---|---|---|

| R1-Zero | 14% | 11K | $0.11 (approximately 1.7 yen) |

| R1 | 15.8% | 6K | $0.06 (approximately 9.3 yen) |

| o1(low) | 20.5% | 7K | $0.43 (approximately 66 yen) |

| o1(middle) | 31% | 13K | $0.79 (approx. 120 yen) |

| o1(high) | 35% | 22K | $1.31 (approximately 200 yen) |

| o3(low) | 75.7% | 335K | $20 (approx. 3,100 yen) |

| o3(high) | 87.5% | 57M | $3,400 (approximately 530,000 yen) |

The ARC Prize speculates that OpenAI's o1 and o3 inference systems function as follows: The reason it's called a 'speculation' is because OpenAI's o1 and o3 are closed models and the processing process leading up to the answer output is unknown.

1: Generate a chain of thought ( CoT ) for the problem domain

2: Combining human experts ( supervised fine-tuning or SFT ) and automated machines (e.g. , reinforcement learning ) to label intermediate CoT steps

3: Train the base model using step 2

4: Iteratively infer from the process model during testing

In contrast, DeepSeek's R1 family is open source, so how the inference system works is clear. According to the ARC Prize, the key insight of DeepSeek's inference system is that novelty fitness (and reliability) improves along three dimensions:

1: Adding human labels (SFT) to CoT process model training

2: Instead of linear inference, perform CoT search (parallel CoT inference for each step)

3: Sampling of the entire CoT (parallel trajectory estimation)

ARC Prize states, 'The most interesting thing DeepSeek did was to release R1 and R1-Zero separately. R1-Zero is a model that does not use SFT, as described in item (1), and instead relies on reinforcement learning. R1-Zero and R1 achieved high scores of 14% and 15.8%, respectively, on ARC-AGI, and also achieved excellent scores in DeepSeek's independently reported benchmark scores. For example, their scores on MATH AIME 2024 were 71% (R1-Zero) and 76% (R1), respectively, a significant increase from the approximately 40% achieved by DeepSeek-V3.

However, the R1 developers wrote in their paper that 'DeepSeek-R1-Zero faces challenges such as readability and mixed languages,' and

Based on these results, the ARC Prize states that the following three points are suggested:

1: SFT (e.g., human expert labeling) is not required for accurate and legible CoT inference in domains requiring strong validation.

2: R1-Zero's training process creates its own internal domain-specific language (DSL) within the token space via reinforcement learning optimization.

3: SFT is necessary to increase the generality of the CoT reasoning domain

The ARC Prize wrote, 'This makes intuitive sense, since the language itself is effectively an inference DSL. Just like a program, the exact same 'words' can be learned in one domain and applied to another. Pure reinforcement learning approaches are not yet capable of discovering a broad common vocabulary, but we expect this to be a focus of future research.'

Furthermore, ARC Prize states, 'DeepSeek is almost certainly targeting OpenAI's o3. It will be important to see whether SFT will be required to add CoT search and sampling, or whether 'R2-Zero' (the next inference model) will emerge along the same logarithmic accuracy vs. inference scaling curve. Based on the results of R1-Zero, we believe that SFT will not be necessary for the next model to achieve a high score on ARC-AGI.'

Based on these insights, the ARC Prize states, 'Economically, there are two major changes occurring in AI. One is that 'you can spend more money to get higher accuracy and reliability,' and the other is that 'training is shifting to inference.' Both will create a huge demand for inference, but neither will reduce the demand for computing. In fact, the demand for computing will increase. AI inference systems promise far greater benefits than improved accuracy in benchmarks. The biggest problem preventing further use of AI automation is reliability. I've spoken to hundreds of Zapier users who are looking to introduce AI agents into their businesses, and the feedback is consistent: 'I don't trust them yet because they're not reliable.''

Furthermore, 'Because R1 is open and reproducible, more people and teams will push CoT and search to the limits. This will actually show us where the frontier is sooner and foster a wave of innovation that increases the likelihood of reaching AGI quickly. I've already heard from several people that they plan to use an R1-style system for the ARC Prize 2025, and I'm excited to see the results. The fact that R1 is open is great for the world. DeepSeek has advanced the cutting edge of science,' he wrote, praising DeepSeek.

Related Posts: