Hugging Face has launched the 'Open-R1' project to complement the non-open parts so that anyone can develop low-cost, ultra-high performance 'DeepSeek-R1'-like AI models

The AI model '

Open-R1: a fully open reproduction of DeepSeek-R1

https://huggingface.co/blog/open-r1

DeepSeek-R1 is an AI model developed by DeepSeek, a China-based AI company, and has recorded scores higher than OpenAI o1 in multiple tests measuring mathematical performance and coding performance. The development cost of DeepSeek-R1 is said to be $5.6 million (approximately 870 million yen), which is a very low cost for a high-performance AI model. In addition, DeepSeek-R1 is also characterized by its low operating costs, and concerns about the future prospects of high-performance chips necessary for AI learning and operation have had a major impact on the entire industry, such as the decline of high-tech stocks such as NVIDIA across the board.

China's AI 'DeepSeek' shock causes panic selling of tech stocks, NVIDIA's market capitalization disappears by 91 trillion yen, more than doubling the previous record of crash - GIGAZINE

DeepSeek has made DeepSeek-R1 and its derivative models available for free , so anyone can run DeepSeek-R1 and develop additional learning models. For example, CyberAgent, a major Japanese IT company, has released a Japanese-specific model based on a lightweight version of DeepSeek-R1.

CyberAgent releases a model that has been further trained in Japanese based on a derivative model of 'DeepSeek-R1' - GIGAZINE

However, DeepSeek does not disclose all information about DeepSeek-R1, and does not disclose the dataset or code used to train the model. For this reason, although AI developers can create 'models with additional learning based on DeepSeek-R1', it is difficult to develop 'models built from scratch using technology similar to DeepSeek-R1'. To solve this problem, Hugging Face has launched the project 'Open-R1' to analyze DeepSeek-R1 and reproduce the dataset and code.

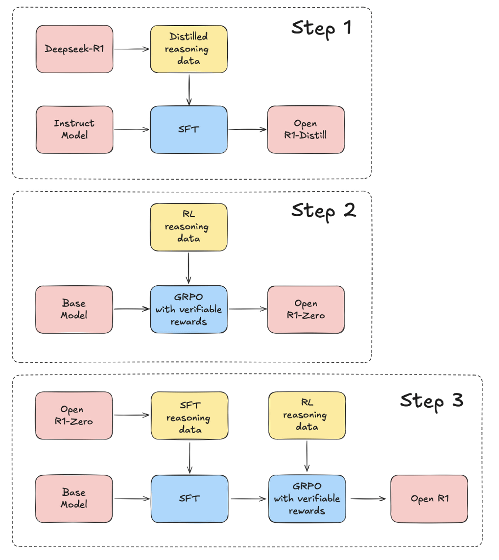

DeepSeek-R1 is trained using reinforcement learning (RL) without supervised fine tuning (SFT). It also employs a technique called 'group relative policy optimization (GRPO)' to reduce training costs. Open-R1 aims to reproduce the techniques used in DeepSeek-R1 and make them open source, while referring to the technical report of DeepSeek-R1.

At the time of writing, Open-R1 is still in the early stages of the project, but if the project is successful, it is expected that low-cost, high-performance AI models optimized for a wide range of fields, including medicine and science, will be created.

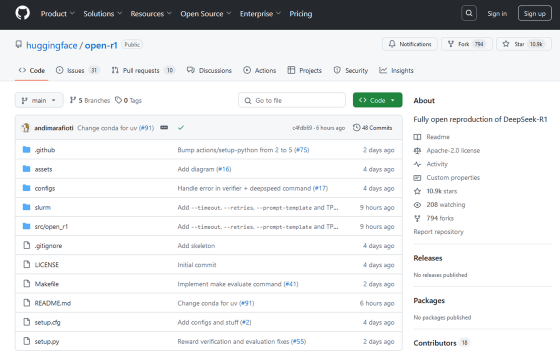

The results of Open-R1 will be made publicly available in the following GitHub repository.

GitHub - huggingface/open-r1: Fully open reproduction of DeepSeek-R1

https://github.com/huggingface/open-r1

Related Posts:

in Software, Posted by log1o_hf