How did DeepSeek surpass OpenAI's O1 at 3% of the cost?

The open source model '

DeepSeek-R1's bold bet on reinforcement learning: How it outpaced OpenAI at 3% of the cost | VentureBeat

https://venturebeat.com/ai/deepseek-r1s-bold-bet-on-reinforcement-learning-how-it-outpaced-openai-at-3-of-the-cost/

A feature of R1 is its low operating costs. OpenAI's o1 costs $15 (about 2,330 yen) per million input tokens and $60 (about 9,330 yen) per million output tokens, while DeepSeek Reasoner, a model based on R1,

VentureBeat says one of the breakthroughs the R1 has achieved is its 'move toward pure reinforcement learning .'

Current large-scale language models (LLMs) are typically trained using a technique called supervised fine-tuning (SFT), which trains the model on a carefully selected dataset and teaches it a step-by-step inference called a chain-of-thought (CoT), which has been considered essential to improving the model's inference capabilities.

However, we trained R1 almost exclusively using reinforcement learning, with little use of SFT. This approach gave the model the unexpected ability to allocate additional processing time to complex problems and prioritize tasks based on difficulty.

In a technical report , DeepSeek researchers call this phenomenon an 'Aha moment.'

Although SFT was used to a limited extent in the final stages of development, VentureBeat praised DeepSeek for achieving such a dramatic improvement in performance through almost entirely reinforcement learning.

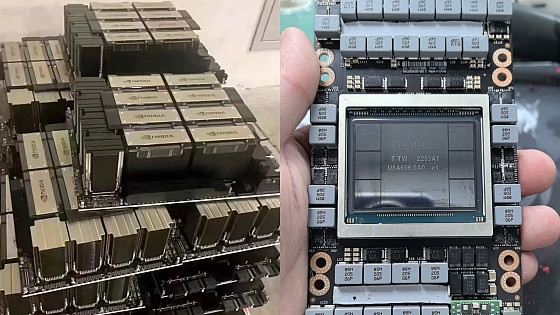

Another noteworthy point is that it was developed with very little machine power. DeepSeek was founded in 2023 as a successor to High-Flyer Quant, a Chinese hedge fund. High-Flyer Quant secured 10,000 NVIDIA GPUs before the US export restrictions were imposed, and expanded its GPUs to 50,000 through alternative routes after the restrictions were tightened.

The GPUs procured in this way are said to be NVIDIA H800s with reduced transfer speeds between chips to comply with regulations, but some reports have said that 'China is circumventing the regulations to bring advanced chips into the country.'

Still, 50,000 is still a very small number compared to the over 500,000 GPUs used by major AI labs such as OpenAI, Google, and Anthropic to run their AI.

VentureBeat said, 'DeepSeek's ability to produce competitive results with limited resources highlights the extent to which ingenuity and resourcefulness can challenge the high-cost paradigm of training cutting-edge LLMs.'

Another reason R1 is attracting attention is that it is an open weight model with publicly available weighting. Even Meta's Llama, the leading open source model, does not display the CoT process unless you actively prompt it, but R1 transparently displays the CoT of the answer by default.

However, the fact that the research results are published in technical reports means that competing models can quickly adopt them and catch up, so the R1's innovations are not enough to establish an overwhelming lead. According to VentureBeat, open source model companies such as Meta and Mistral AI are expected to catch up within a few months.

R1 has also been criticized for ethical issues, such as refusing to answer questions about the Chinese government's brutal crackdown on the Tiananmen Square protests.

Many developers believe these biases are edge cases that can be mitigated with tweaks: Meta's Llama, for example, remains a popular open model despite its closed dataset and facing accusations of hidden bias and copyright infringement lawsuits.

Meta CEO Mark Zuckerberg is being pursued in a lawsuit for allowing the AI 'Llama' development team to use copyrighted works without permission - GIGAZINE

VentureBeat contrasts the huge AI investment plan 'Stargate Project' led by OpenAI, Oracle, SoftBank, and others with DeepSeek, which has realized low-cost AI, saying, 'The Stargate Project is based on the belief that achieving general-purpose artificial intelligence (AGI) requires unprecedented computational resources, but DeepSeek's demonstration of a high-performance model at a fraction of the cost is a test of the sustainability of this approach and calls into question OpenAI's ability to produce results commensurate with its huge investment.'

Related Posts:

in Software, Posted by log1l_ks