A method to build an AI model equivalent to OpenAI o1-preview with just 26 minutes of learning and a computational cost of less than 1,000 yen will be announced

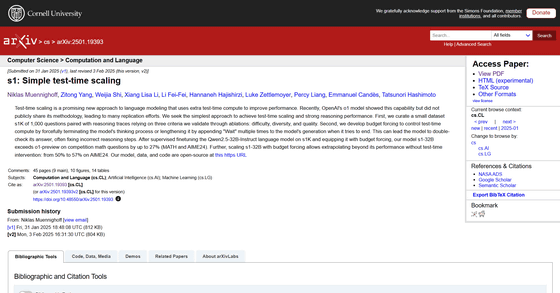

On January 31, 2025, a research team led by Niklas Muennihoff , a researcher at Stanford University studying large-scale language models, published a paper on arXiv, a repository of unpeer-reviewed research papers, that demonstrated a method for reproducing scaling and performance almost identical to OpenAI o1-preview using a small number of data samples and simple methods. Tim Kellogg, an AI architect and software engineer, provided a commentary on the paper.

[2501.19393] s1: Simple test-time scaling

https://arxiv.org/abs/2501.19393

S1: The $6 R1 Competitor? - Tim Kellogg

https://timkellogg.me/blog/2025/02/03/s1

The paper published by Muennihoff et al. summarizes 'Simple Test-Time Scaling,' which improves the inference performance of language models by increasing the computational resources during testing.

Traditionally, improvements in the performance of language models have been achieved through large-scale pre-training or by expanding datasets. However, recent research has shown that performance can be improved without additional training by simply increasing the model's computational resources during testing. OpenAI's o1 model is thought to employ this technique, but the specific methodology has not been disclosed.

Therefore, Munihoff et al. proposed a training method using 1,000 carefully selected datasets (s1K) from tens of thousands of datasets based on quality, difficulty, and diversity. In fact, Munihoff et al. reported that by using this s1K, they were able to create a model called s1-32B, which has performance almost equivalent to OpenAI's o1-preview, by performing supervised fine tuning (SFT) on the large-scale language model Qwen2.5 developed by Alibaba.

According to Munyhoff et al., the training cost of s1-32B is very low, and training was completed in just 26 minutes using 16 NVIDIA H100 GPUs , with an estimated cost of just $6 (approximately 910 yen). This shows that, unlike conventional approaches using large-scale computational resources, it is possible to build high-performance AI models even in inexpensive environments.

Kellogg also focuses on the 'Wait trick,' a simple technique for adjusting inference time.

The Wait Trick is a technique that, when a model determines that it has finished thinking, it would normally terminate, but by forcing the insertion of a 'Wait' token, it encourages reconsideration and improves accuracy. This method is extremely simple yet effective, and has been praised for its ability to improve inference performance at a lower cost than conventional methods.

Kellogg considers the impact that approaches like s1 will have on future AI research in terms of speeding up AI development and reducing costs.

Traditionally, AI development has required large amounts of funding and large-scale data centers, but the results of S1 overturn this assumption and pave the way for advanced research with fewer resources. As a result, Kellogg pointed out that this could further open up the door to AI development, making it easier for many researchers to get involved.

Kellogg also mentioned that OpenAI has criticized DeepSeek for developing models using o1 distillation , and argued that it will be difficult to detect and regulate such methods in the future. Now that it has been shown that a high-performance model can be built using just 1,000 pieces of data, Kellogg said that even an individual could potentially achieve the same feat, potentially changing the very nature of AI development.

Kellogg said that it's interesting that s1 doesn't completely replicate OpenAI o1 or DeepSeek-R1, but rather achieves similar results using different methods. He concluded that there are multiple approaches to the evolution of AI, and that further development of each method will lead to even greater technological innovation by 2025.

Related Posts: