Open-source ChatGPT, ready-to-use with only 1.6GB of GPU memory for 7.73x faster training

OpenAI's interactive AI '

Open-source replication of ChatGPT implementation process! You can try it out with 1.6GB GPU memory but with 7.73 times faster training speed, all available through a single line of code. #ChatGPT #opensource

— HPC-AI Tech (@HPCAITech) February 14, 2023

Blog: https://t.co/BccMx8p9PJ

Code: https://t.co/IFAwE9zhbC pic.twitter.com/KKHWL4Tchd

Open source solution replicates ChatGPT training process! Ready to go with only 1.6GB GPU memory and gives you 7.73 times faster training!

https://www.hpc-ai.tech/blog/colossal-ai-chatgpt

GitHub - hpcaitech/ColossalAI: Making big AI models cheaper, easier, and more scalable

https://github.com/hpcaitech/ColossalAI

ChatGPT is said to be the fastest growing service in history, with Microsoft founder Bill Gates praising it as ``as important as the invention of the Internet,'' and Microsoft CEO and chairman Satya. Mr. Nadella has been attracting attention, as he frankly said, 'AI will fundamentally change every software category.' In February 2023, Microsoft announced a new search engine `` Bing '' and a browser `` Edge '' that integrated ChatGPT's upgraded AI, and Mr. Nadella said, `` Microsoft can break Google search with AI. says .

Microsoft announces new search engine Bing and browser Edge integrating ChatGPT's upgraded AI - GIGAZINE

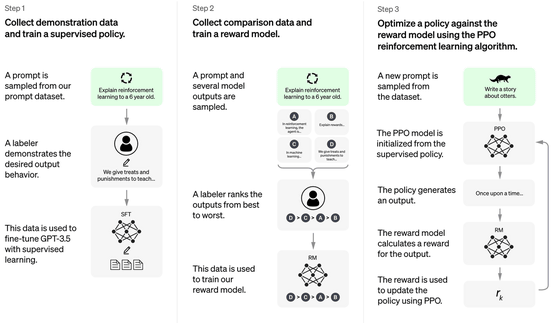

Google and Chinese companies are working on conversational AI to compete with ChatGPT, but ChatGPT has neither published open source pre-training weights nor a low-cost complete open source training process, and has 100 billion parameters. It is considered difficult to efficiently replicate the entire ChatGPT process based on the model. According to HPC-AI Tech , which aims to accelerate deep learning capabilities and reduce costs by analyzing large-scale neural networks, ChatGPT's surprising feature is that it 'introduces human feedback reinforcement learning (RLHF) into the training process. At the end of the training process, further training ensures that the language model produces content that more closely matches human preferences.

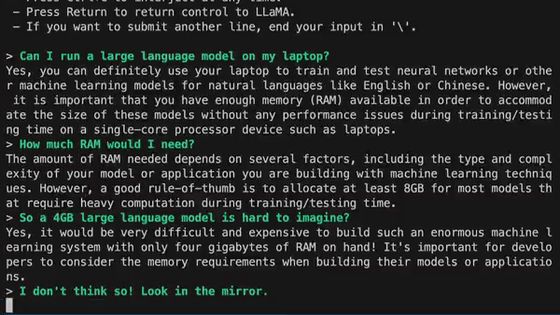

Since ChatGPT introduces reinforcement learning, it runs multiple inference models during training. In the InstructGPT paper on which ChatGPT is based, both

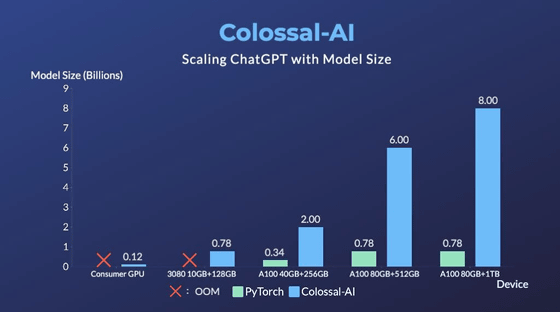

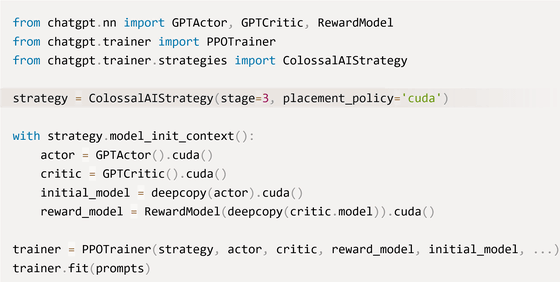

Developed by HPC-AI Tech, Colossal-AI is an open-source method for the process of ChatGPT training, including the complex stages of Stage 1 pre-training, Stage 2 reward model training, and Stage 3 reinforcement learning training in ChatGPT. Reported to be duplicated. Compared to PyTorch , an open-source machine learning library for Python, Colossal-AI is up to 7.73x faster for single-server training and 1.42x faster for single-GPU inference, and continues to scale to massively parallel processing. , which has been recorded to significantly reduce the cost of ChatGPT replication.

Colossal-AI also provides a ChatGPT training process that can be tried out on a single GPU, minimizing training costs. According to HPC-AI Tech, PyTorch can only launch up to 780 million parameter models with 80 gigabytes of GPU memory, while Colossal-AI can meet a minimum of 1.62 gigabytes of GPU with a single consumer-level GPU. It is said that 120 million parameters can be satisfied with memory. In addition, based on pre-trained large-scale models, it can reduce the cost of fine-tuning tasks and increase fine-tuning model capacity by up to 3.7x compared to PyTorch with a single GPU. .

HPC-AI Tech publishes ready-to-use ChatGPT training code for Colossal-AI. HPC-AI Tech open-sourced the complete algorithm and software design to replicate the ChatGPT implementation process, allowing pre-trained models previously owned only by a few large technology companies with large-scale computing power. We have announced that we will make it a success in the open source community, build an ecosystem based on Colossal-AI, and make efforts toward the era of big AI models, starting from the replication of ChatGPT.

Related Posts:

in AI, Web Service, Posted by log1e_dh