Microsoft publishes ``DeepSpeed-Chat'' that can learn large-scale language models used for ChatGPT etc. at 15 times faster and lower cost than conventional systems

It is reported that chat AI such as “

DeepSpeed/blogs/deepspeed-chat/japanese at master microsoft/DeepSpeed GitHub

https://github.com/microsoft/DeepSpeed/tree/master/blogs/deepspeed-chat/japanese

Until now, there was no pipeline that could easily and efficiently perform RLHF, which is necessary for training a model like ChatGPT. Also, training an AI model like ChatGPT requires multiple expensive GPUs, making it difficult for general developers to develop this type of AI model. In addition, even with a GPU, conventional software could only extract less than 5% of the performance of hardware, making it impossible to train models with hundreds of billions of parameters easily, quickly, and at low cost. reported.

Therefore, Microsoft announced the framework ' DeepSpeed-Chat ', which aims to allow developers to develop chat AI at a more affordable price.

DeepSpeed-Chat is now available for training ChatGPT-style models! A single GPU can train models with over 10 billion parameters, and multiple GPUs can train models with over 100 billion parameters. 15 times faster learning than SoTA can be executed with a single script, easy and low cost! A Japanese article has also been published!

https://t.co/3OtxlLCA5t https://t.co/AYlITILqIT pic.twitter.com/UwaEMomaMm — Microsoft DeepSpeed (@MSFTDeepSpeedJP) April 12, 2023

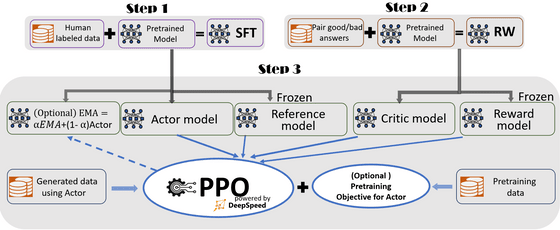

DeepSpeed-Chat can generate its own ChatGPT-like model by executing the three steps of ``supervised fine-tuning '', ``reward model fine-tuning'', and ``RLHF training'' performed in InstructGPT , which is the basis of ChatGPT. provide the script. It also provides an inference API for testing the conversational form after learning.

Furthermore, the 'DeepSpeed-RLHF pipeline' installed in DeepSpeed-Chat performs 'supervised fine-tuning', 'fine-tuning of the reward model', and 'training of RLHF', and researchers and developers use multiple data resources. To help you train your own RLHF model with , it is possible to perform 'data abstraction' and 'blending functions'. ``Data abstraction'' creates an abstracted dataset to unify the format of different datasets, and ``blend function'' appropriately fuses multiple datasets to create 3 types of data such as ``supervised fine-tuning''. split into two workouts.

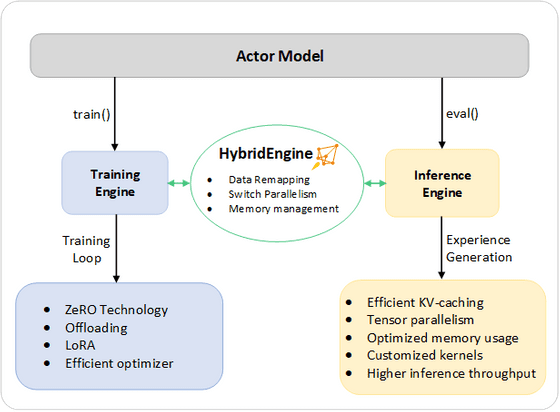

In addition, in order to execute learning by the 'DeepSpeed-RLHF pipeline' on a wide range of hardware at high speed and at low cost, the 'DeepSpeed hybrid engine' that fuses all systems for inference and learning such as

Using DeepSpeed-Chat, which is equipped with the DeepSpeed hybrid engine, and training with 64 NVIDIA A100 GPUs for data centers on Microsoft Azure , the ' OPT-13B ' model can be trained in about 7.5 hours. Done. Also, the cost at that time is $ 1920 (about 250,000 yen). Furthermore, in the ' BLOOM ' model, training will be completed for about 20 hours and 5120 dollars (about 680,000 yen). These figures show that it is possible to train much faster and at a lower cost than existing RLHF systems.

DeepSpeed-Chat is also capable of training and inferring large-scale models with billions to trillions of parameters, and is said to be capable of training and inference even in environments with limited GPU resources. .

Hacker News states , 'DeepSpeed-Chat doesn't make it easy to reproduce GPT-4, but it can definitely overcome some major hurdles to reproduction.' In addition, it is stated that Microsoft has invested $ 10 billion (about 1.3 trillion yen) free of charge in DeepSpeed , which develops DeepSpeed-Chat, to support research that incorporates functions like ChatGPT into Microsoft products. increase.

DeepSpeed-Chat source code etc. are published on GitHub.

GitHub - microsoft/DeepSpeed: DeepSpeed is a deep learning optimization library that makes distributed training and inference easy, efficient, and effective.

https://github.com/microsoft/DeepSpeed/

Related Posts: