OpenAI releases programming language 'Triton' for open source neural networks

Triton, an open-source programming language for neural networks that enables productivity and high-speed code writing that

Introducing Triton: Open-Source GPU Programming for Neural Networks

https://www.openai.com/blog/triton/

OpenAI debuts Python-based Triton for GPU-powered machine learning | InfoWorld

https://www.infoworld.com/article/3627243/openai-debuts-python-based-triton-for-gpu-powered-machine-learning.html

OpenAI proposes open-source Triton language as an alternative to Nvidia's CUDA | ZDNet

https://www.zdnet.com/article/openai-proposes-triton-language-as-an-alternative-to-nvidias-cuda/

OpenAI, a non-profit organization that studies artificial intelligence (AI), has released version 1.0 of its Python-based open source programming language for neural networks, Triton.

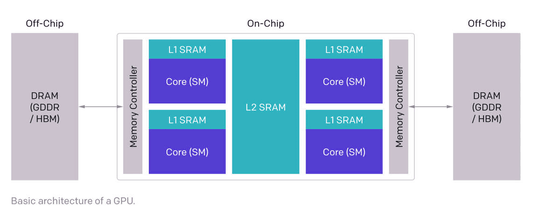

The reason OpenAI developed Triton as an alternative programming language to CUDA is very simple: 'NVIDIA's graphics processing units, including CUDA, are too difficult to program.' Specifically, creating native kernels and functions for GPUs requires moving allocated data and instructions across the memory hierarchy of a multi-core GPU, which complicates programming.

According to OpenAI, Triton allows researchers who have never worked with CUDA to write highly efficient GPU code at the same level as those created by GPU coders. For example, less than 25 lines of code

When technology media ZDNet contacted OpenAI, Philippe Tillet said, 'Our goal is to create a viable alternative to CUDA for deep learning.' In addition, Tillet says Triton's target is 'for machine learning engineers and researchers who have good software engineering skills but aren't used to GPU programming.'

OpenAI researchers have already used Triton and have shown that they have succeeded in creating kernels that are up to twice as efficient as programming in PyTorch.

When using Triton, developers use a dedicated library to write code in Python and JIT compile it for execution on the GPU. This will allow integration with the rest of the Python ecosystem for developing machine learning solutions.

Technology media InfoWorld wrote, 'Triton's library provides a set of primitives reminiscent of NumPy ,' pointing out similarities with NumPy. Specifically, it points out that it provides a function that performs matrix operations and array reduction according to some criteria. He also noted that it is 'similar to Numba ' in that it integrates the primitive with its own code and compiles it to run on the GPU.

Triton is based on a paper published in 2019, but it 's a project that has just started. At the time of writing, it's only available on Linux, and the documentation available is minimal, so InfoWorld said, 'Early adopters may need to scrutinize the sources and examples. I warn you.

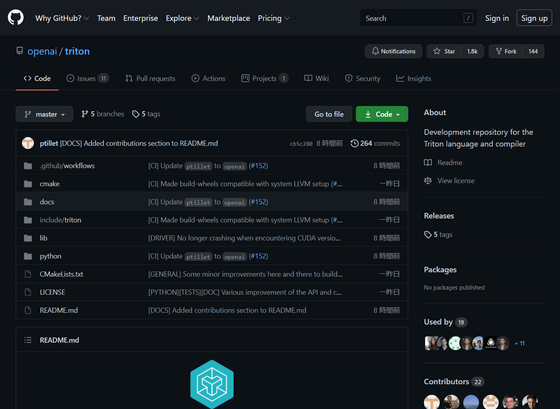

You can access the Triton development repository from:

GitHub --openai / triton: Development repository for the Triton language and compiler

https://github.com/openai/triton

Related Posts:

in Software, Posted by logu_ii