Meta releases Meta Large Language Model Compiler, a commercially available large-scale language model that can compile and optimize code

Meta has released the Meta Large Language Model Compiler, which compiles and optimizes large language models as they are written. The models are available for commercial use and are hosted

Meta Large Language Model Compiler: Foundation Models of Compiler Optimization | Research - AI at Meta

https://ai.meta.com/research/publications/meta-large-language-model-compiler-foundation-models-of-compiler-optimization/

Today we're announcing Meta LLM Compiler, a family of models built on Meta Code Llama with additional code optimization and compiler capabilities. These models can emulate the compiler, predict optimal passes for code size, and disassemble code. They can be fine-tuned for new… pic.twitter.com/GFDZDbZ1VF

— AI at Meta (@AIatMeta) June 27, 2024

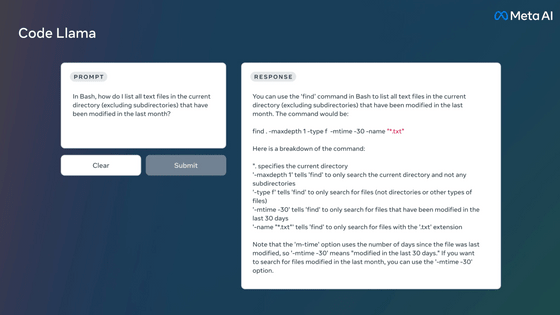

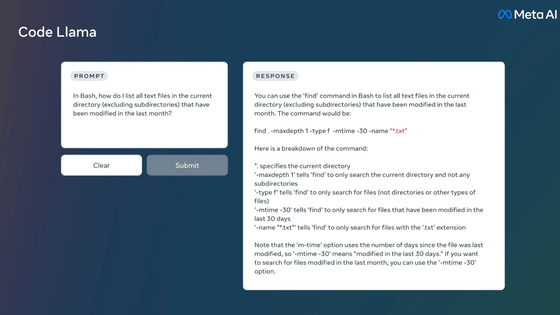

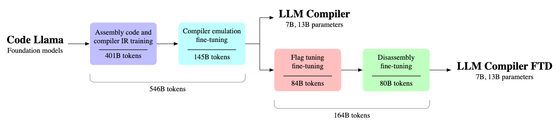

Meta's research team built a model based on the Code Llama model , training it on a corpus of compiler intermediate representations and assembly code totaling 546 billion tokens to create a model that can handle compiler intermediate representations, assembly language, and optimization techniques.

This time, two pre-trained models, '7B' and '13B', have been released, and the '7B' and '13B' models of 'LLM Compiler FTD' have been released, which have been fine-tuned to improve the ability to optimize assembly code and reduce code size, and to disassemble assembly code into a compiler intermediate representation.

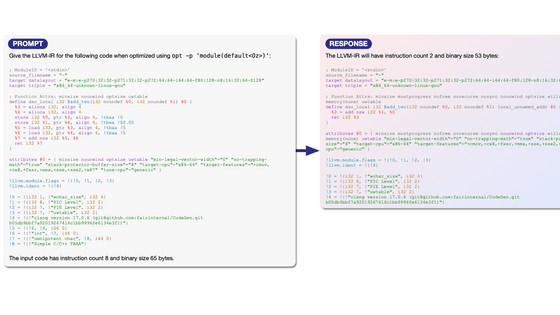

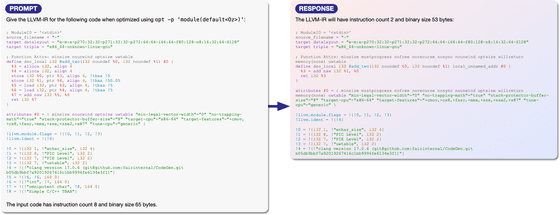

It is possible to pass the compiler's intermediate representation to the model as a prompt, specify the optimization method, and output the output code after that optimization. In the case of the LLM Compiler FTD 13B model, we succeeded in generating smaller object files in 61% of cases compared to when the compiler's code size reduction option '-Oz' was used.

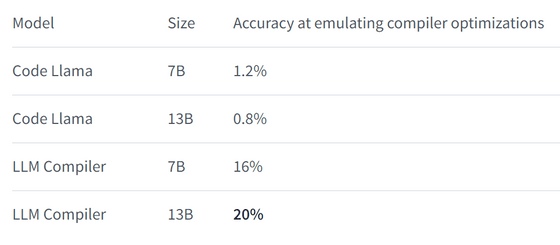

The comparison of compiler optimization emulation capabilities is shown in the figure below. The Code Llama model was almost inaccurate because it had no knowledge of the compiler's intermediate representation, but the LLM Compiler succeeded in matching the actual compiler output at the character level in 16% of cases for the 7B model and 20% of cases for the 13B model.

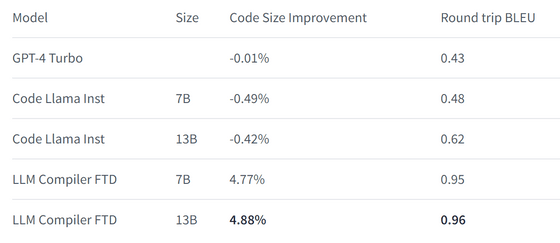

For the disassembly task of generating a compiler intermediate representation based on x86 assembly code, the LLM Compiler FTD model not only improved code size, but also beat other models by a large margin in the BLEU score used to evaluate translation tasks, demonstrating its ability to perform disassembly with high accuracy.

All four models announced this time have been released under the commercially available Meta Large Language Model Compiler (LLM Compiler) LICENSE and are available for download from Hugging Face .

◆ Forum is currently open

A forum related to this article has been set up on the official GIGAZINE Discord server . Anyone can post freely, so please feel free to comment! If you do not have a Discord account, please refer to the account creation procedure article to create an account!

• Discord | 'What is the use of an AI model capable of optimization similar to 'code optimization in a compiler'?' | GIGAZINE

https://discord.com/channels/1037961069903216680/1256175165889581217

Related Posts: